Long-context LLMs require sufficient context windows for complex tasks, akin to human working memory. Research focuses on extending context length, enabling better handling of longer content. Zero-shot methods and fine-tuning enhance memory capacity. Despite advancements in input length (up to 100,000 words), existing LLMs have a 2,000-word output limit, highlighting a capability gap. Alignment training helps LLMs prioritize instructions and adhere to length constraints.

The underexplored area of aligning LLMs for ultra-long outputs represents a critical research gap. Previous work establishes the foundation for understanding long-context LLMs’ limitations and potential, setting the stage for advancements in ultra-long output generation. These studies have laid the groundwork for enhancing LLMs’ capabilities in generating extensive and coherent text, addressing the discrepancy between input and output lengths.

Current long context LLMs process inputs up to 100,000 tokens but struggle to generate outputs beyond 2,000 words, limiting applications requiring extensive text generation. Analysis reveals a consistent failure in producing longer outputs across state-of-the-art models. User interaction logs indicate over 1% of prompts request outputs exceeding 2,000 words, highlighting a demand for models capable of generating longer texts. This limitation stems from the lack of long-output examples in existing supervised fine-tuning datasets.

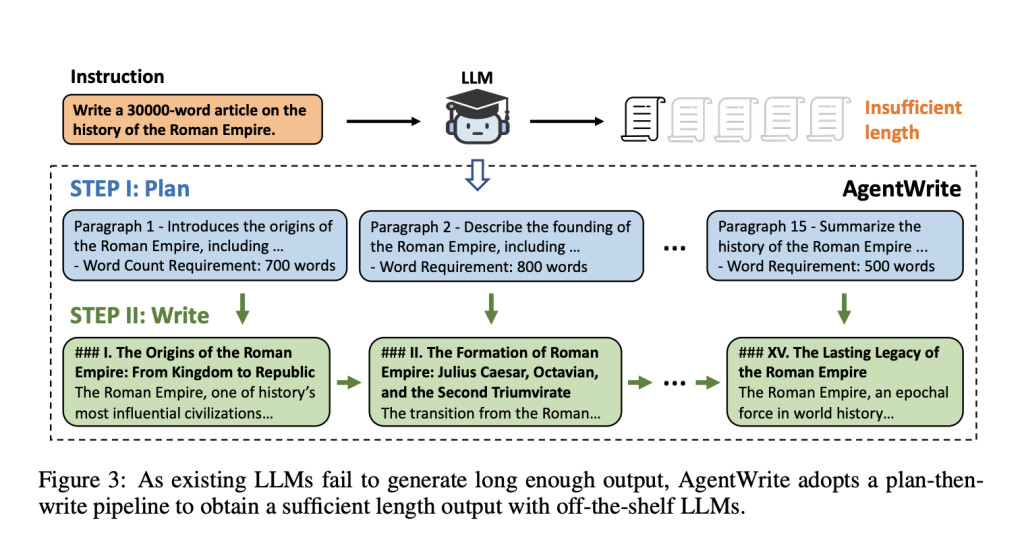

To address this, AgentWrite, an agent-based pipeline, decomposes ultra-long generation tasks into subtasks, enabling off-the-shelf LLMs to produce coherent outputs exceeding 20,000 words. The authors construct LongWriter-6k, a dataset with 6,000 SFT data points ranging from 2,000 to 32,000 words. Their 9B parameter model, enhanced through DPO, achieves state-of-the-art performance on a new benchmark for ultra-long generation capabilities, demonstrating the potential of existing long-context LLMs with appropriate training data.

The paper introduces the AgentWrite framework, designed to address the limitations of existing language models in producing lengthy text. This framework utilizes off-the-shelf LLMs to generate extended and coherent outputs. To enhance model training, the authors developed the LongWriter-6k dataset, consisting of 6,000 supervised fine-tuning data points with output lengths ranging from 2,000 to 32,000 words. They also created the LongBench-Write benchmark to evaluate the effectiveness of their approach, including diverse user writing instructions with specified output lengths.

The methodology employs a LLM-as-a-judge approach, using GPT-4o to evaluate output quality across multiple dimensions such as relevance, accuracy, coherence, and reading experience. The process involves training existing models with the new LongWriter-6k dataset and utilizing preference data to improve adherence to long writing instructions. By combining innovative data generation techniques, comprehensive evaluation benchmarks, and advanced training strategies, the authors aim to significantly improve the long-output capabilities of LLMs, enabling the generation of high-quality content exceeding 10,000 words.

The AgentWrite framework successfully extended the GPT-4o model’s output length from 2,000 to approximately 20,000 words, demonstrating its effectiveness in handling ultra-long generation tasks. Evaluation using the LongBench-Write benchmark showed a 5% increase in overall quality scores for the model trained with the LongWriter-6k dataset, particularly in tasks requiring 2,000 to 4,000-word outputs. The most significant improvement was observed in the “Breadth and Depth†dimensions, with an 18% absolute improvement compared to the ablated model.

Ablation studies revealed that while including a writing plan before content generation didn’t significantly enhance performance, training with the LongWriter-6k dataset was crucial for achieving longer outputs without compromising quality. The LongWriter-9B model outperformed the GLM-4-9B model on the LongBench-Write benchmark, highlighting the effectiveness of the proposed methodology in enhancing existing long-context LLMs. Overall, the experiments confirmed significant improvements in both output length and quality, demonstrating the potential of the LongWriter framework for ultra-long text generation tasks.

In conclusion, this paper addresses a significant limitation in current LLMs by proposing the AgentWrite framework to extend output capacity beyond the typical 2,000-word cap. The LongWriter-6k model, developed using this framework, successfully generates high-quality outputs exceeding 10,000 words by incorporating long-output data during model alignment. Extensive experiments and ablation studies demonstrate the effectiveness of this approach. The authors suggest future directions for expanding the framework, refining data quality, and addressing inference efficiency challenges. They emphasize that existing long-context LLMs have untapped potential for larger output windows, which can be unlocked through strategic training with long-output data. This research marks a significant advancement in ultra-long text generation, providing a foundation for further developments in the field.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post Scaling LLM Outputs: The Role of AgentWrite and the LongWriter-6k Dataset appeared first on MarkTechPost.

Source: Read MoreÂ