Data analysis has become increasingly accessible due to the development of large language models (LLMs). These models have lowered the barrier for individuals with limited programming skills, enabling them to engage in complex data analysis through conversational interfaces. LLMs have opened new avenues for extracting meaningful insights from data by simplifying the process of generating code for various analytical tasks. However, the rapid adoption of LLM-powered tools also introduces challenges, particularly in ensuring the reliability and accuracy of the analysis, which is crucial for informed decision-making.

The primary challenge in using LLMs for data analysis lies in the potential for errors and misinterpretations in the generated code. These models, while powerful, can produce subtle bugs, such as incorrect data handling or logical inconsistencies, which may need to be noticed by users. There is often a disconnect between the user’s intent and the model’s execution, leading to results that do not align with the original objectives. This issue is further exacerbated by users’ difficulty verifying and correcting these errors, particularly those who lack extensive programming knowledge.

Existing methods for data analysis using LLMs generally involve generating raw code, which is then presented to the user for execution. Tools like ChatGPT Plus, Gemini Advanced, and CodeActAgent follow this approach, allowing users to input their requirements in natural language and receive a code-based response. However, these tools often focus on delivering the code without providing sufficient support for understanding the underlying logic or the data operations. This leaves users, especially those with limited coding skills, to independently navigate the complexities of code verification and error correction, increasing the risk of undetected issues in the final analysis.

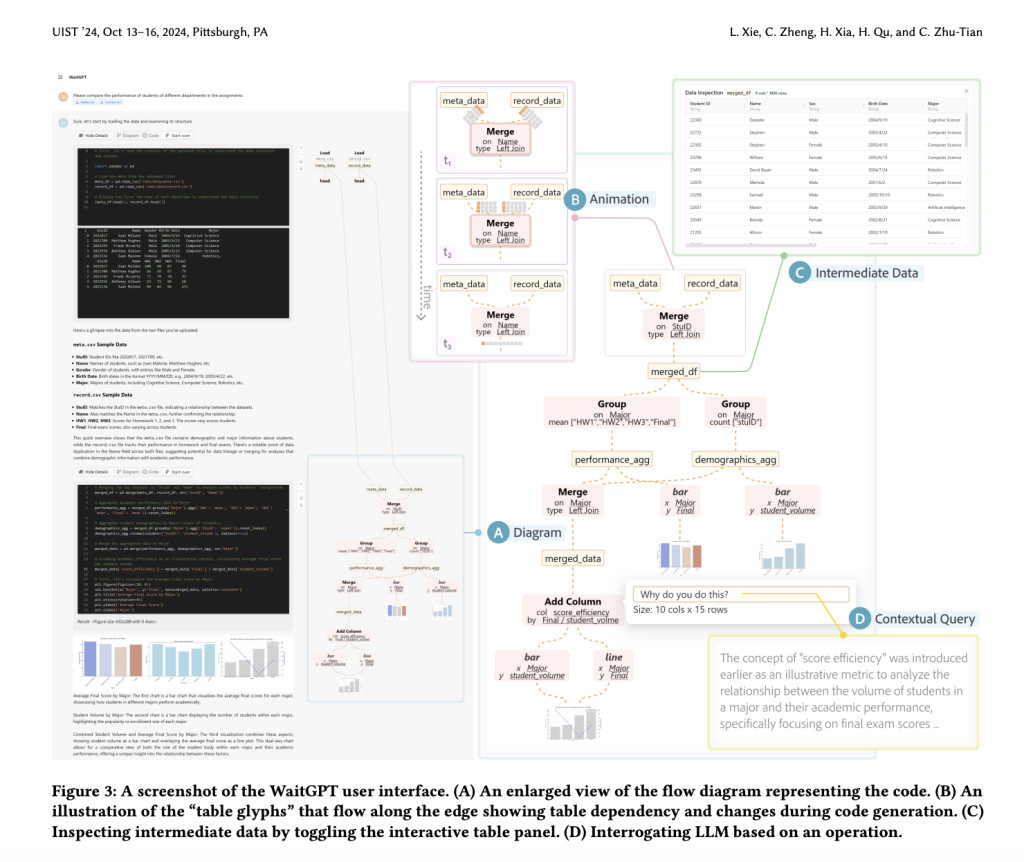

Researchers from the Hong Kong University of Science and Technology, the University of California San Diego, and the University of Minnesota introduced a novel tool called WaitGPT. This tool transforms how LLM-generated code is presented and interacted with during data analysis. Instead of merely displaying raw code, WaitGPT converts the code into a visual representation that evolves in real-time. This approach gives users a clearer understanding of each step in the data analysis process. It allows for more proactive engagement, enabling them to verify and adjust the analysis as it progresses. The researchers emphasized that this tool aims to shift the user’s role from a passive observer to an active participant in the data analysis task.

WaitGPT operates by breaking down the data analysis code into individual data operations, visually represented as nodes within a dynamic flow diagram. Each node corresponds to a specific data operation, such as filtering, sorting, or merging data, and is linked to other nodes based on the execution order. The tool executes the code line by line, updating the visual diagram to reflect the current state of the data and the operations being performed. This method allows users to inspect and modify specific parts of the analysis in real time rather than waiting for the entire code to be executed before making adjustments. The tool also provides visual cues, such as changes in the number of rows or columns in a dataset, to help users identify potential issues quickly.

The effectiveness of WaitGPT was evaluated through a comprehensive user study involving 12 participants. The study revealed that the tool significantly improved users’ ability to detect errors in the analysis. For instance, 83% of participants successfully identified and corrected issues in the data analysis process using WaitGPT, compared to only 50% using traditional methods. The time required to spot errors was reduced by up to 50%, demonstrating the tool’s efficiency in enhancing user confidence and accuracy. The visual representation provided by WaitGPT also made it easier to comprehend the overall data analysis process, leading to a more streamlined and user-friendly experience.

In conclusion, the introduction of WaitGPT offers a real-time visual representation of the code and its operations; WaitGPT addresses the critical challenge of ensuring reliability and accuracy in data analysis. This tool enhances the user’s ability to monitor and steer the analysis process and empowers them to make informed adjustments. The study’s results, including a notable improvement in error detection and reduced time spent on verification, underscore the tool’s potential to transform data analysis using LLMs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post WaitGPT: Enhancing Data Analysis Accuracy by 83% with Real-Time Visual Code Monitoring and Error Detection in LLM-Powered Tools appeared first on MarkTechPost.

Source: Read MoreÂ