Multimodal models are designed to make human-computer interaction more intuitive and natural, enabling machines to understand and respond to human inputs in ways that closely mirror human communication. This progress is crucial for advancing applications across various industries, including healthcare, education, and entertainment.

One of the main challenges in AI development is ensuring these powerful models’ safe and ethical use. As AI systems become more sophisticated, the risks associated with their misuse—such as spreading misinformation, reinforcing biases, and generating harmful content—increase. It is vital to address these issues to ensure AI advancements benefit society rather than worsen existing social problems. Balancing AI capabilities with necessary safeguards is essential to prevent unintended consequences.

Existing methods to mitigate these risks include curated datasets, safety filters, and moderation tools designed to detect and block harmful content. However, these methods often need to be improved when dealing with the complexities of multimodal AI systems. For instance, models trained on text data may struggle to interpret and generate accurate responses for audio or visual inputs. Furthermore, these approaches may only partially account for the diverse range of human interactions, such as different languages, accents, and cultural nuances, highlighting the need for more advanced solutions to ensure the safe deployment of AI technologies.

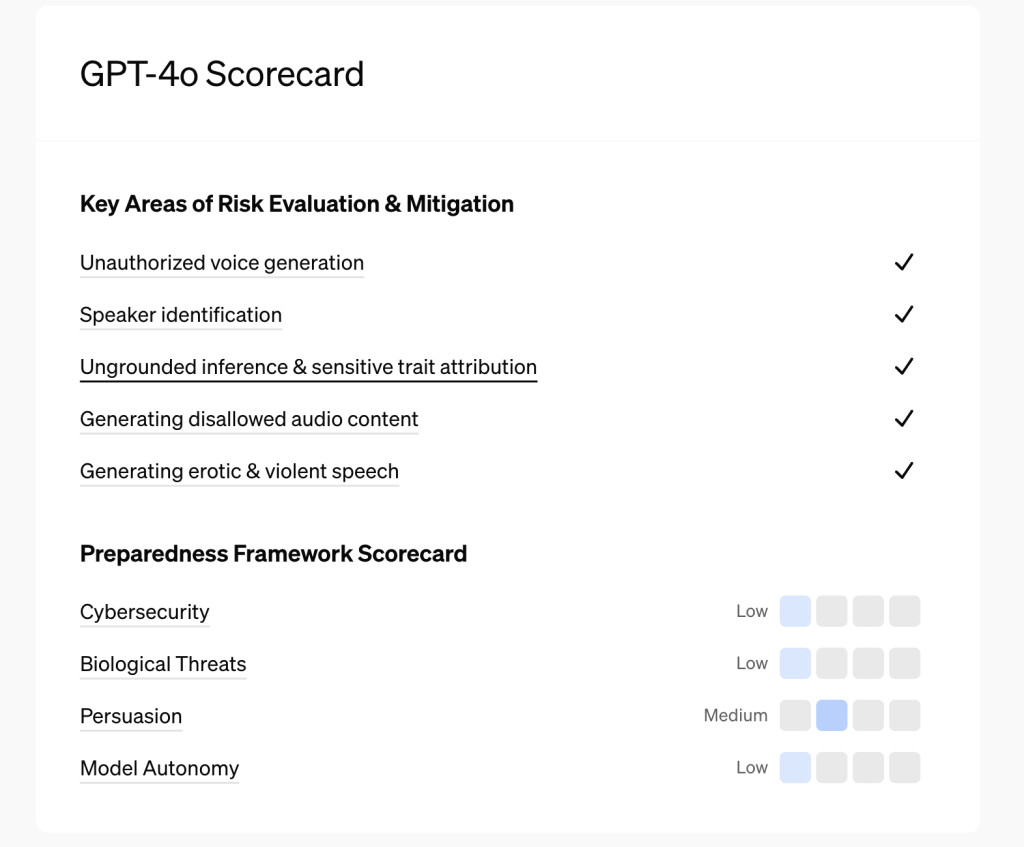

To address these challenges, OpenAI introduced the GPT-4o System Card, offering a comprehensive overview of GPT-4o’s capabilities, limitations, and safety evaluations. This document outlines the preparedness framework for assessing the model’s safety, including evaluations of its speech-to-speech capabilities, text and image processing, and potential societal impacts. The System Card marks a step forward in transparency and safety for AI models, providing detailed insights into the safeguards and evaluations that underpin the deployment of GPT-4o. It guides understanding GPT-4o’s operation and the measures taken to ensure alignment with ethical standards and safety protocols.

The GPT-4o System Card details the model’s methodology, which employs an autoregressive approach to generate outputs based on a sequence of inputs, including text, audio, and images. The model was trained on a diverse dataset comprising public web data, proprietary data from partnerships, and multimodal data such as images and videos. This extensive training process enabled GPT-4o to effectively interpret and generate data across various formats, making it particularly adept at handling complex inputs. Additionally, OpenAI implemented post-training safety filters and moderation tools to detect and block harmful content, ensuring the model’s outputs are safe and aligned with human preferences. The System Card emphasizes the importance of these safety measures, particularly in managing sensitive content and preventing misuse.

The performance of GPT-4o, as highlighted in the System Card, is remarkable for its speed and accuracy in processing multimodal data. The model can respond to audio inputs with human-like speed, averaging response times between 232 to 320 milliseconds, comparable to human conversation. GPT-4o also significantly improves non-English language processing, surpassing previous models in tasks involving text generation and code understanding. For example, the model achieved a 19% completion rate for high-school-level tasks. However, it still faced challenges in more advanced scenarios, such as collegiate and professional-level tasks, where completion rates were lower. These results highlight the model’s potential for practical applications while also indicating areas for further improvement.

The System Card also provides detailed evaluations of GPT-4o’s safety features, including its ability to refuse requests for generating unauthorized or harmful content. The model was trained to reject requests for copyrighted material, including audio and music, and uses classifiers to detect and block inappropriate outputs. GPT-4o successfully avoided generating harmful content during testing in over 95% of evaluated cases. Furthermore, the model was assessed for its ability to handle diverse user voices, including different accents, without significant variation in performance. This consistency is crucial for ensuring the model can be deployed in various real-world settings without introducing biases or disparities in service quality.

Overall, the introduction of the GPT-4o System Card represents a significant advancement in the transparency and safety of AI models. The research conducted by OpenAI underscores the importance of continuous evaluation and improvement to mitigate risks while maximizing AI’s benefits. The System Card provides a comprehensive framework for understanding and assessing GPT-4o’s capabilities, offering a more robust solution for the safe deployment of advanced AI systems. This development is a promising step toward achieving powerful and responsible AI, ensuring its benefits are widely accessible without compromising safety or ethical standards.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post This AI Paper from OpenAI Introduces the GPT-4o System Card: A Framework for Safe and Responsible AI Development appeared first on MarkTechPost.

Source: Read MoreÂ