This post is the second in our three-part series, Leveraging Database Observability at MongoDB.

Welcome back to the Leveraging Database Observability at MongoDB series. In our last discussion, we explored MongoDB’s unique observability strategy using out-of-the-box tools designed to automatically monitor and optimize customer databases. These tools provide continuous feedback to answer critical questions such as what is happening, where is the issue, why is it occurring, and how do I fix it? This ensures enhanced performance, increased productivity, and minimized downtime.

So let’s dive into a real-life use case, illustrating how different tools in MongoDB Atlas come together to address database performance issues. Whether you’re a DBA, developer, or just a MongoDB enthusiast, our goal is to empower you to harness the full potential of your data using the MongoDB observability suite.

Why is it essential to diagnose a performance issue?

Identifying database bottlenecks and pinpointing the exact problem can be daunting and time-consuming for developers and DBAs.

When your application is slow, several questions may arise:

Have I hit my bandwidth limit?

Is my cluster under-provisioned and resource-constrained?

Does my data model need to be optimized, or cause inefficient data access?

Do my queries need to be more efficient, or are they missing necessary indexes?

MongoDB Atlas provides tools to zoom in, uncover insights, and detect anomalies that might otherwise go unnoticed in vast data expanses.

Let’s put it into practice

Let’s consider a hypothetical scenario to illustrate how to track down and address a performance bottleneck.

Setting the context

Imagine you run an online e-commerce store selling a popular item. On average, you sell about 500 units monthly. Your application comprises several services, including user management, product search, inventory management, shopping cart, order management, and payment processing.

Recently, your store went viral online, driving significant traffic to your platform. This surge increased request latencies, and customers began reporting slow website performance.

Identifying the bottleneck

With multiple microservices, finding the service responsible for increased latencies can be challenging. Initial checks might show that inventory loads quickly, search results are prompt, and shopping cart updates are instantaneous. However, the issue might be more nuanced and time-sensitive, potentially leading to a full outage if left unaddressed.

The five-step diagnostic process

To resolve the issue, we’ll use a five-step diagnostic process:

Gather data and insights by collecting relevant metrics and data.

Generate hypotheses to formulate possible explanations for the problem.

Prioritize hypotheses to use data to identify the most likely cause.

Validate hypotheses by confirming or disproving the top hypothesis.

Implement and observe to make changes and observe the results.

Applying the five-step diagnostic process for resolution

Let’s see how this diagnostic process unfolds:

Step 1: Gather Data and Insights

Customers report that the website is slow, so we start by checking for possible culprits. Inefficient queries, resource constraints, or network issues are the primary suspects.

Step 2: Generate Hypotheses

Given the context, the application could be making inefficient queries, the database could be resource-constrained, or network congestion could be causing delays.

Step 3: Prioritize Hypotheses

We begin by examining the Metric Charts in Atlas. Since our initial check revealed no obvious issues, we will investigate further.

Step 4: Validate Hypotheses

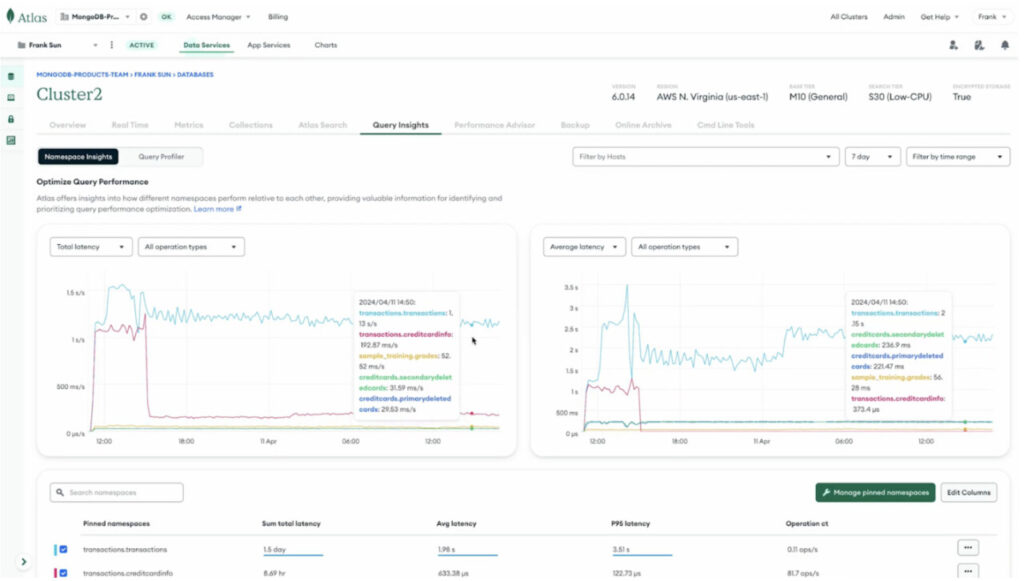

Using Atlas’ Namespace Insights, we break down the host-level measurements to get collection-level data. We notice that the transactions.transactions collection has much higher latency than others. By increasing our lookback period to a week, the latency increased just over 24 hours ago when customers began reporting slow performance. Since this collection stores details about transactions, we use the Atlas Query Profiler to find that the queries are inefficient because they’re scanning through the whole transaction documents. This validates our hypothesis that application slowness was due to query inefficiency.

Figure 1: New Query Insights Tab

Step 5: Implement and Observe

We need to create an index to resolve the collection scan issue. The Atlas Performance Advisor suggests an index on the customerID field. Adding this index enables the database to locate and retrieve transaction records for the specified customer more efficiently, reducing execution time. After creating the index, we return to our Namespace Insights page to observe the effect. We see that the latency on our transactions collection has decreased and stabilized. We can now follow up with our customers to update them on our fix and assure them that the problem has been resolved.

Conclusion

By gathering the correct data, working iteratively, and using the MongoDB observability suite, you can quickly resolve database bottlenecks and restore your application’s performance.

In our next post in the “Leveraging Database Observability at MongoDB” series, we’ll show how to integrate MongoDB metrics seamlessly into central observability stacks and workflows. This ‘plug-and-play’ experience aligns with popular monitoring systems like Datadog, New Relic, and Prometheus, offering a unified view of application performance and deep database insights in a comprehensive dashboard.

Sign up for MongoDB Atlas, our cloud database service, to see database observability in action. For more information, see our Monitor Your Database Deployment docs page.

Source: Read More