Customers utilizing Amazon Relational Database Service (Amazon RDS) for SQL Server for their large mission-critical SQL Server databases are seeking ways to migrate to AWS while retaining the same database engine (homogeneous migration) with minimal downtime. Several methods exist to migrate self-managed SQL Server to Amazon RDS for SQL Server, including native backup and restore, as well as AWS Database Migration Service (AWS DMS). However, a crucial aspect of any mission-critical database migration is implementing a rollback strategy.

Customers have requested an appropriate solution to implement a rollback strategy for their SQL Server database migrations from self-managed environments to Amazon RDS for SQL Server, while meeting their recovery point objective (RPO) and recovery time objective (RTO) requirements. In this post, we discuss how to set up a rollback strategy using a fall forward approach from Amazon RDS for SQL Server transparent database encryption (TDE)- and non-TDE-enabled databases to self-managed SQL Server, utilizing SQL’s native backup and restore option.

Rollback strategy with a fall forward approach

A comprehensive rollback strategy is crucial for data migration, necessitating meticulous planning and contingency measures to mitigate risks and ensure a smooth transition. Despite careful planning, migrations can sometimes encounter unexpected issues, such as database performance degradation or application failure. A rollback strategy that allows you to swiftly revert to the previous state of your database is essential. The fall forward approach is a rollback strategy that involves replicating data from the migrated database to a third database environment without impacting the source database environment. By incorporating database rollback into your migration strategy, you ensure business continuity, mitigate risks, and navigate unexpected challenges with greater confidence. It serves as a safety net that empowers successful migrations and safeguards your valuable data.

Solution overview

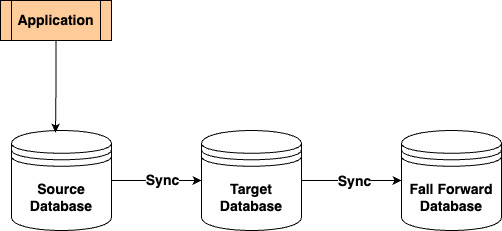

The following diagram illustrates the architecture of a fall forward approach for RDS for SQL Server using native backup and restore.

A – Self-managed SQL Server, which is the source of the migration. It could be running on an on-premises server or an Amazon Elastic Compute Cloud (Amazon EC2) instance.

B – Amazon RDS for SQL Server, which is the target of the migration.

A’ – Self-managed SQL Server, which is the fall forward target in case of migration rollback.

Migration from self-managed SQL Server to Amazon RDS for SQL Server

You can migrate a self-managed SQL Server environment to Amazon RDS for SQL Server using different methods, contingent upon the application’s recovery time objective (RTO) and recovery point objective (RPO) requirements. You have the option to use either AWS Database Migration Service (AWS DMS) or SQL Server’s native backup and restore methods to migrate both TDE- and non-TDE-enabled databases to Amazon RDS for SQL Server. The solution presented in this post assumes that you have already migrated TDE- and non-TDE-enabled databases using any of the aforementioned approaches.

Fall forward from Amazon RDS for SQL Server to self-managed SQL Server

You can implement a fall forward approach for both TDE- and non-TDE-enabled databases with the following high-level steps:

Create Amazon Simple Storage Service (Amazon S3)

Create an AWS Identity and Access Management (IAM) role to access the S3 buckets and change the bucket policy to take full and transaction log backups.

Create a symmetric AWS Key Management Service (AWS KMS)

Backup and restore a TDE certificate from Amazon RDS for SQL Server to self-managed SQL Server for a TDE-enabled database.

Backup and restore a full backup from Amazon RDS for SQL Server to a self-managed SQL Server for both TDE- and non-TDE-enabled databases.

Copy transaction logs from Amazon RDS for SQL Server and decrypt the logs using the provided Python script and apply them to the self-managed SQL Server for both TDE- and non-TDE-enabled databases to keep it in sync.

Steps for setting up the fall forward strategy for Amazon RDS for SQL Server

The solution uses an Amazon Elastic Compute Cloud (Amazon EC2) with the SQL Server database engine installed to emulate the self-managed environment as the target and Amazon RDS for SQL Server as the source for migration.

Prerequisites

The following prerequisites are needed before you begin:

An existing Amazon RDS for SQL Server instance (source) with TDE and a Backup and Restore options group enabled. Refer to Creating an Amazon RDS DB instance for how to provision an RDS SQLServer instance.

An existing Amazon EC2 instance with SQL Server installed (target) with the same version and edition as that of Amazon RDS for SQL Server that is used as a fall forward server.

Both a TDE-encrypted database (tde-demo) and a non-encrypted database (no-tde-demo) that have already been migrated from the Amazon EC2 SQL Server instance to Amazon RDS for SQL Server by following the instructions in Migrate TDE-enabled SQL Server databases to Amazon RDS for SQL Server. You will be setting up the fall forward strategy for both databases from Amazon RDS for SQL Server to the EC2 SQL Server instance.

The AWS Command Line Interface (AWS CLI) installed and configured in the EC2 instance.

Python 3.12 installed in the EC2 instance to decrypt the transactions logs. Install the modules requests, boto3, and pycryptodomex after installing Python.

Install SQL Server Management Studio (SSMS) in the EC2 instance and set up access to Amazon RDS for SQL Server instance.

Copy the following Python script into a file and name it decrypt_file.py and save it to any directory in the EC2 instance. For this example, copy it into c:temp.

For this post, we deploy all the AWS resources in the US East (N. Virginia) Region. Because this solution involves AWS resource setup and utilization, it will incur costs on your account. Refer to AWS Pricing for more information. We strongly recommend that you set this up in a non-production instance and run end-to-end validations before you implement this solution in a production environment.

Create an S3 bucket

As a security best practice, we suggest creating two S3 buckets: one for your database backups and transaction logs and another bucket for storing the TDE certificate and private key files. For this post, we create the buckets <certificate-bucket-name> and <db-backup-logs-bucket-name>. You must create these buckets in the same Region as your Amazon RDS DB instance. For instructions, refer to Creating a bucket.

The <db-backup-logs-bucket-name> bucket is used for copying the backup and transactions logs. For transaction logs, additional bucket configuration is needed. Refer to Access to transaction log backups with RDS for SQL Server and configure the following:

Change the permission to bucket owner preferred.

Add a bucket policy.

Create an IAM role and policy to access the S3 buckets

If you already have an existing IAM role, you can use that, but make sure you have the following trust relationship and permissions policy attached to it. If you want to create a new IAM role manually, refer to Creating a role to delegate permissions to an AWS service.

For this post, we create a role called rds-sqlserver-fall-forward-role and add the following trusted entity in the code block for the custom trust policy.

Next, we create a customer-managed policy using the following sample Amazon S3 permission policy listed within the IAM role itself.

Create a symmetric KMS key

Create a symmetric key in the same Region as your RDS DB instance. For instructions, refer to Creating symmetric encryption KMS keys.

Choose the following options when creating the key:

Key type – Symmetric

Key usage – Encrypt and decrypt

Alias – rds-fall-forward-key

Key administrators – Add the IAM role you created

Key usage permissions – Add the IAM role you created

Set up fall forward from Amazon RDS for SQL Server to EC2 SQL Server instance for TDE- and non-TDE-enabled databases

In this step, we set up the fall forward strategy of the TDE-enabled database (tde-demo) and the non-TDE-enabled database(no-tde-demo) from Amazon RDS for SQL Server to the EC2 SQL Server instance.

A TDE-enabled database requires the certificate to be backed up and restored to an EC2 SQL Server instance. Backup and restore of the TDE certificate isn’t required for a non-TDE-enabled database.

Backup and restore certificate for a TDE-enabled database

In this step, we take the backup of the TDE certificate in Amazon RDS for SQL Server, and restore it in the EC2 SQL Server instance. This step is only applicable to the TDE-enabled database.

Get the name of the TDE certificate for the tde-demo database in Amazon RDS for SQL Server. Use SSMS to connect to the Amazon RDS for SQL Server instance and run the following Transact-SQL (T-SQL) command.

Backup the Amazon RDS for SQL Server database certificate using the following T-SQL command.

The <RDS_Certificate_Name> is the certificate name from the above output. The <customer-managed-kms-key-arn> is the Amazon Resource Name (ARN) of the KMS key created previously (rds-fall-forward-key). Refer to Backing up a TDE certificate for additional information.

Currently, Amazon RDS for SQL Server single-Availability Zone DB instance is only supported for taking certificate backups. If you’re running Amazon RDS for SQL Server with a multi-Availability Zone DB instance, you might have to remove the multi-AZ option before backing up the certificate.

Copy the certificate files from the S3 bucket to the EC2 instance. Run the following command in the command prompt of EC2 instance.

Use the decrypt_file.py script to get the decryption password. The script does the following actions.

Gets the metadata from the S3 bucket of pvk file.

Decrypts the password using the KMS key.

The S3 metadata of the privatekey.pvk file generated in the backup step and the KMS key are used to retrieve the plain text of the data key.

The preceding command outputs the decryption password to create the TDE certificate. The sample output looks like the following:

Create the certificate in the EC2 SQL Server instance using the preceding information. Run the following T-SQL command in SSMS by connecting it to the EC2 SQL Server instance.

For detailed instructions on how to back up and restore the TDE certificate from Amazon RDS for SQL Server to EC2 SQL Server instance, refer to Backing up and restoring TDE certificates on RDS for SQL Server.

Backup and restore database and transaction logs

In this section, you complete a full database backup of the TDE- and non-TDE-enabled databases in Amazon RDS for SQL Server, along with the transaction logs, and restore and recover the database in the EC2 SQL Server instance. Because the steps are the same for both TDE- and non-TDE-enabled databases, we have included only the TDE-enabled database in this example.

Back up the Amazon RDS for SQL Server database. Run the following T-SQL command by connecting to the Amazon RDS for SQL Server.

Copy the backup from the S3 bucket to the local file system in the EC2 instance. Run the following in the command prompt of the EC2 instance.

Restore the database into the EC2 SQL Server instance by running the following T-SQL command by connecting to the EC2 SQL Server instance.

In this example, because you’re setting up the fall forward to a new target EC2 SQL Server instance, you’re using the same database name. If you’re restoring it to the original source server, you can rename the database while restoring it. After the full backup is restored, the database in the EC2 SQL Server instance goes into recovery mode.

Copy the transaction logs from the Amazon RDS for SQL Server to apply them to the above database to keep it in sync. Run the following T-SQL command in the Amazon RDS for SQL Server to list the transaction logs backup

All the backups of the transaction logs are encrypted. So, you must decrypt them before applying them to the EC2 SQL server database.

Copy the transaction logs generated in Amazon RDS for SQL Server to an S3 bucket. Set the S3 location where the transaction logs backup can be copied it to. Run the T-SQL command in the Amazon RDS for SQL Server

Run the following T-SQL command to validate the preceding S3 settings in the Amazon RDS for SQL Server.

Transaction log backups run every 5 minutes in Amazon RDS for SQL Server, and many logs are created after the full backup of the tde-demo You need to identify from which log_seq_id you need to take the copy of the transaction logs backup. Run the following T-SQL command in the EC2 SQL Server instance

Make a note of the redo_start_lsn number from the above step, which you use to identify the logs to be copied. Run the following T-SQL command in SSMS after connecting to the Amazon RDS for SQL Server instance.

Copy the transaction log backed up by the Amazon RDS for SQL Server to an S3 bucket and wait for the process to complete. Provide the starting_seq_id and ending_seq_id from the preceding output and the ARN of the customer managed KMS key (fall-forward-kms-key). Run the following T-SQL command in Amazon RDS for SQL Server.

The task copies individual transactions logs to the S3 bucket configured previously.

Call the Python program decrypt_file.py with the S3 URI of the transaction log base directory along with the starting_seq_id that the database needs to be recovered from. Transaction logs are encrypted using the customer managed key and must be decrypted. Each transaction log file, have a metadata property that must be gathered from the S3 metadata to decrypt it. Run the following command in the command prompt of EC2 SQL Server instance.

Here’s the sample output of the preceding command:

The script carries out the following steps.

Downloads the transactions logs from the specified bucket to local file system

Gets the S3 metadata information of individual transactions log

Using the metadata gets the decryption key from customer managed KMS key

Using the decryption key, decrypts the encrypted transaction log and creates a new file with the name <file_name>.out in the same directory

Apply the previously generated decrypted transaction log files one at a time into the EC2 SQL server database tde-demo to recover it. Run the T-SQL command in the EC2 SQL Server instance.

If you need to switch to the EC2 SQL Server database, copy the last transaction log and apply it with the RECOVERY option to fully recover the tde-demo. Run the T-SQL command in the EC2 SQL Server instance.

You have successfully completed setting up the fall forward strategy for Amazon RDS for SQL Server to EC2 SQL Server environment.

Cleanup

To avoid future charges, remove all the components created while testing this solution, complete the following steps:

Connect to the EC2 SQL Server instance through SSMS and delete the TDE- and non-TDE-enabled databases.

On the IAM console, select the roles and search for rds-sqlserver-fall-forward-role role and delete it.

On the AWS KMS console, select the customer managed key rds-fall-forward-key and delete it.

On the Amazon S3 console, empty the bucket that contains <certificate-bucket-name> and <db-backup-logs-bucket-name>, and then delete the bucket.

Delete the Python script and the directory where the backup and transaction logs are downloaded on the EC2 instance.

Delete the EC2 instance and the RDS for SQL Server instance should you no longer need them.

Summary

In this post, you have learned about how to set up a fall forward rollback strategy from Amazon RDS for SQL Server to a self-managed SQL server for both TDE- and non-TDE-enabled databases.

Understanding the possible rollback solutions from Amazon RDS for SQL Server is key to safeguarding your data and insuring you meet your RTO and RPO needs, as well as insuring you can recover from critical events.

Try out this solution in your RDS for SQL Server instance and if you have any comments or questions, leave them in the comments section. For more information about native backup and restore, refer to Microsoft SQL Server Native Backup and Restore Support in the Amazon RDS User Guide.

About the Authors

Raj Jayakrishnan is a Senior Database Specialist Solutions Architect with Amazon Web Services helping customers reinvent their business through the use of purpose-built database cloud solutions. Over 20 years of experience in architecting commercial & open-source database solutions in financial and logistics industry.

Vijayakumar Kesavan is a Senior Database Specialist Solutions Architect at Amazon Web Services. He works with our customers to provide database architectural guidance, best practices and technical assistance for database projects on AWS. With expertise in multiple database technologies he also support customers in database migrations and cost optimization in AWS Cloud.

Alvaro Costa-Neto is a Senior Database Specialist Solutions Architect for AWS, where he helps customers design and implement database solutions on the cloud. He has a passion for database technologies and has been working with them for more than 19 years, mostly with Microsoft SQL Server. He resides in Clermont, FL with his wife and two children, who share his love for aviation and traveling. When he is not working, he likes to host cookouts with his family and friends and explore new places.

Nirmal John is a Database Specialist Solutions Architect with Amazon Web services.He pursues building customer relationships that outlast all of us.

Source: Read More