This is a guest post by Lei Gu from Gen, co-authored by Veerendra Nayak, Saikat Banerjee, and Kien Pham from AWS.

In this post, we show you how the Gen team replayed an Oracle database workload for a mission-critical application on Amazon Aurora PostgreSQL-Compatible Edition.

By replaying the workload, the team made sure Aurora PostgreSQL-Compatible can handle the same volume and complexity of requests as the Oracle database, and identify any potential performance bottlenecks or issues before migrating to Amazon Aurora. The primary objective of this migration was to eliminate licensing costs and reduce operational overhead by transitioning from a self-managed Oracle database to Aurora PostgreSQL-Compatible with minimal downtime.

Workload characteristics

The workload migrated from Oracle was comprised of a Java application running on a self-managed Oracle database with close to 10 TB of data. The request rate was close to 1,000 queries per second (QPS) at peak, with 90% read and 10% write requests.

Why we considered this approach of capture and replay

When migrating from Oracle to Aurora, Gen faced the following challenges that had to be addressed to perform a successful migration:

Validate all tablÂes and indexes had been recreated accurately in Aurora PostgreSQL-Compatible

Confirm all data had been migrated to AWS and was kept in sync with the source database

Test the migrated application under real production conditions to confirm it could handle the same volume and complexity of requests as the Oracle database

Avoid introducing substantial code changes to the migrated application, which would introduce additional complexity and potential bugs into our existing monolithic, legacy application

In following section, we discuss how to replay production requests against the migrated application.

Solution overview

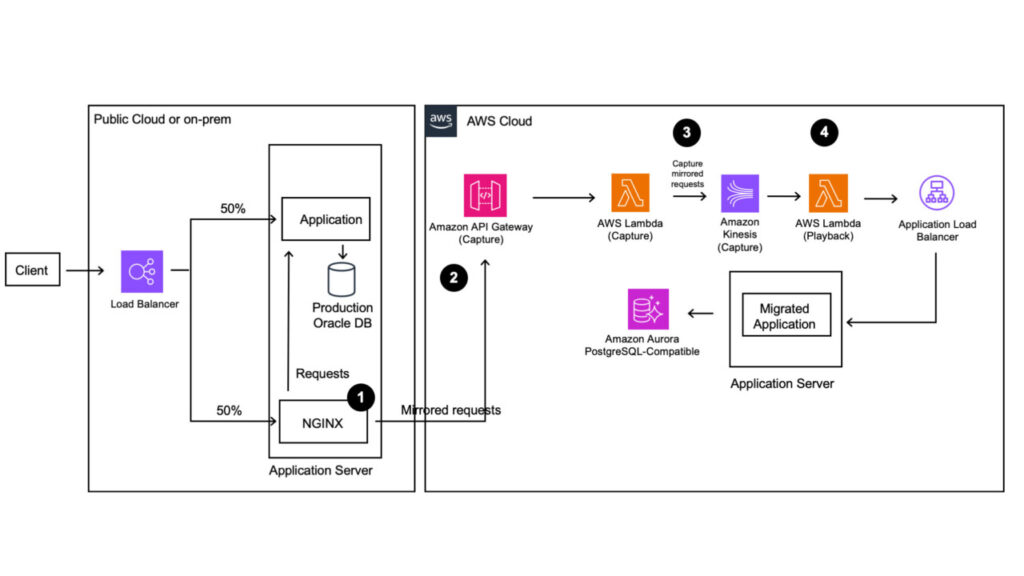

The following diagram illustrates the solution architecture.

The solution consists of four main steps to capture and replay the database:

To mirror requests to AWS, we used the NGINX module.

We used Amazon API Gateway and AWS Lambda to capture requests sent from NGINX.

We used Amazon Kinesis to stream mirrored requests.

We used Lambda to play back requests to the target application.

Let’s deep dive into each step.

Mirror requests to AWS

We installed NGINX on the same server that hosts the production application. The load balancer forwards client requests, including RESTful APIs, to NGINX, which forwards the requests to the production application and mirrors them to the capture API at the same time. Request mirroring is accomplished using the NGINX mirror module, which mirrors requests to a second URL and discards the response from the mirrored URL.

To achieve high availability and effortless rollback, we use a load balancer in front of the application, another load balancer in front of NGINX, and a third load balancer to distribute requests between the two downstream load balancers. This allows us to control the number of requests mirrored through NGINX and roll back changes in case of issues.

Next, we start with 5% mirroring requests and monitor mirrored requests end to end to make sure there is no significant performance impact on overall response time and no unexpected errors from playing back requests. Gradually, we increase the percentage of mirrored requests until we reach 100%.

Capture mirrored requests

Mirrored requests must be captured so that they can be played back against the migrated application. This can be achieved in various ways, but the key requirement is low-latency, high-throughput capture. The capture time is included in the overall production response time, and we must avoid impacting our production system due to slow capture. To address this requirement, we use API Gateway and Lambda as our capture solution. The capture Lambda function simply captures and forwards requests.

Stream mirrored requests

To save mirrored requests, we require a system with high write throughput and the ability to retrieve data from a previous point in time with native integration with Lambda. Kinesis meets these criteria and therefore is used as the destination to store all captured requests.

Request playback

Because we’re capturing read and write requests from the production application, we can choose to play back read-only or read/write requests. Also, we can decide the rate of the mirroring requests. It can be either less than the production requests or at the rate of the production requests.

As part of this migration strategy, we decided to use AWS Database Migration Service (AWS DMS) to do the initial full load from Oracle to Aurora PostgreSQL-Compatible. For ongoing replication, we had a choice: use AWS DMS to continuously replicate, or rely on replaying write requests that were captured.

Also, we had to have a plan for rollback. After cutover to Aurora PostgreSQL-Compatible, if there are issues that can’t be remediated in a certain time, we roll back to reduce business impact. For this, we had another Oracle database. Initial load was done using AWS DMS from Aurora PostgreSQL-Compatible to Oracle. For ongoing replication, we had the same two choices: either use AWS DMS or replay write requests on the Oracle instance marked for rollback.

In the next sections, we walk through both choices. If we decide to play back only read requests, then we need AWS DMS to keep ongoing replication from Oracle to Aurora PostgreSQL-Compatible. For rollback, we have another AWS DMS task to replicate from Aurora PostgreSQL-Compatible to Oracle.

Play back read-only requests

If we choose to play back read-only requests, we must keep all downstream databases in sync by replicating data from Oracle to Aurora PostgreSQL-Compatible and then back to a standby Oracle database (for rollback purposes). The following diagram illustrates this architecture.

Play back read/write requests

If we choose to play back all requests, we must break replication between the Oracle database and all downstream databases so inserts and updates can succeed without running into data consistency issues. The following diagram illustrates this architecture.

We decided to play back read-only requests for production migration, and during planning, we played back read/write requests.

Tune playback

If the playback method isn’t planned properly, we risk falling behind in the production system over time, because we won’t be able to play back requests quickly enough to keep up with the capture rate. To address this, we must make sure the playback rate matches the capture rate. We can achieve this with the following measures:

Create a dedicated Kinesis fan-out consumer and attach a playback Lambda function as a fan-out consumer. Enhanced fan-out is a Kinesis feature that enables consumers to receive records from a data stream with dedicated throughput of up to 2 MB of data per second per shard.

Maximize the number of Lambda consumers per shard (up to 10).

Monitor the Kinesis iterator age to make sure the consumer can keep up with playback.

Increase the number of shards to increase concurrent playback.

We calculate the maximum number of playback Lambda functions as follows:

max playback Lambda functions = (number of Kinesis shards) x (max number of consuming functions per shard)

For example, if we have one shard for the capture Kinesis system and 10 consumers per shard, we can have up to 10 concurrent playback Lambda functions. However, this may not produce enough playback throughput, because the number of shards is limited. To overcome this, we can artificially increase the number of Kinesis shards, not for capture write throughput but for playback load generation throughput.

By controlling the number of max consumers per shard and the number of shards, we can regulate the playback rate. Additionally, we can play back requests at a higher rate by starting playback at an early time, because the Kinesis stream will already be fully populated. The playback rate is limited by the number of consumers per shard and the total number of shards.

Summary

The approach of mirroring and replaying database load allowed Gen to mitigate any potential performance issues by simulating 100% of production requests on Aurora PostgreSQL-Compatible before cutover.

This framework of capturing and replaying production load can be used for several other use cases, such as:

Right-sizing – By capturing and replaying production load, you can validate that your database instance sizes are appropriate for your workload, and make adjustments as needed to achieve optimal performance and cost-efficiency.

Understanding impact of major version upgrades – When upgrading to a new major version of a database, it’s important to understand how the upgrade will impact performance and scalability. By capturing and replaying the production load, you can compare the performance of the old and new versions, and identify any areas where performance may have degraded or improved.

Identifying performance bottlenecks – By replaying the production load, you can identify areas where performance may be bottlenecked, such as slow queries, locked rows, or network issues. This information can be used to optimize database configuration, indexing, and query optimization to improve overall performance.

Leave a comment if you have any questions regarding this solution. You can learn more about our database services by visiting AWS Database Services.

About the Authors

Lei Gu is a Senior Technical Director/Architect with Gen Digital.

Saikat Banerjee is a Database Specialist Solutions Architect with Amazon Web Services.

Veerendra Nayak is a Principal Database Solutions Architect based in the Bay Area, California. He works with customers to share best practices on database migrations, resiliency, and integrating operational data with analytics and AI services.

Kien Pham is Principal Solutions Architect based in the Irvine, California, supporting DNB customers.

Source: Read More