Few-shot Generative Domain Adaptation (GDA) is a machine learning and domain adaptation concept that addresses the challenge of adapting a model trained on a source domain to perform well on a target domain, using only a few examples from the target domain. Such a technique is particularly useful when obtaining a large amount of labeled data from the target domain, which is expensive or impractical.

The main existing solution for GDA focuses on improving a special AI model called a “generator,†which creates new data samples that resemble the target domain, even with only a few examples. Techniques like consistency loss and GAN inversion help the generator produce high-quality and diverse data. These methods ensure the generated data maintains similarities and differences accurately across domains. However, challenges arise when the source and target domains have significant differences. In such cases, ensuring the generator can adapt and accurately generate data that fits both domains remains a considerable challenge.Â

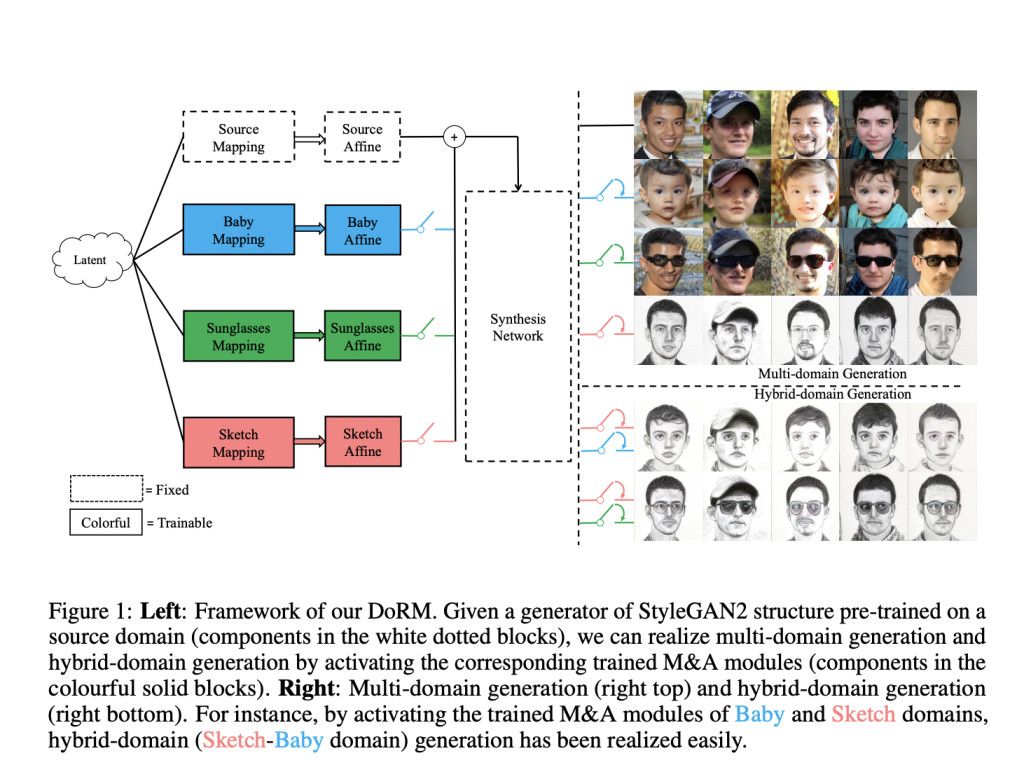

To address these challenges, a recent paper presented at NeurIPS introduces Domain Re-Modulation (DoRM) for GDA. Unlike prior methods, DoRM enhances image synthesis quality, diversity, and cross-domain consistency while integrating memory and domain association capabilities inspired by human learning. By modifying the style space through new mapping and affine modules, DoRM can generate high-fidelity images across multiple domains, including hybrids not seen in training. The paper’s authors also introduced a novel similarity-based structure loss for better cross-domain alignment, showcasing superior performance in experimental evaluations compared to existing approaches.

Concretely, DoRM enhances the generator’s capabilities for GDA by introducing several key innovations:

1. Source Generator Preparation: Initially, the method begins with a pre-trained StyleGAN2 generator that serves as the foundation for subsequent adaptations.

2. Introducing M&A Modules: The source generator is frozen to adapt to the new target domain, and new Mapping and Affine (M&A) modules are introduced. These modules are crucial as they specialize in capturing specific attributes unique to the target domain. By selectively activating these modules, the generator can finely adjust its output to match the nuances of different domains.

3. Style Space Adjustment: transforming the source domain’s latent code into a new space tailored to the visual style of the target domain. This adjustment enables the generator to synthesize outputs that accurately reflect the characteristics of the target domain.

4. Linear Domain Shift: DoRM facilitates a linearly combinable domain shift in the generator’s style space using multiple M&A modules. These modules enable precise adjustments for specific domains, enhancing the generator’s flexibility to synthesize images across diverse domains and create seamless blends of attributes from multiple training sources.

5. Cross-Domain Consistency Enhancement: DoRM introduces a novel similarity-based structure loss (Lss) to ensure consistency across domains. This loss leverages CLIP image encoder tokens to align auto-correlation maps between source and target images, preserving structural coherence and fidelity to the target domain’s characteristics in the generated outputs.

6. Training Framework: DoRM integrates an inclusive loss function that combines StyleGAN2’s original adversarial loss with Lss during training. This integrated framework optimizes generator and discriminator learning, ensuring stable training dynamics and robust adaptation to complex domain shifts.

The research team evaluated the proposed DoRM method using the Flickr-Faces-HQ Dataset (FFHQ). They applied a pre-trained StyleGAN2 model to enable stable training in 10-shot GDA. DoRM demonstrated superior synthesis quality and cross-domain consistency compared to other methods, especially in domains like Sketches and FFHQ-Babies. Quantitative metrics such as Fréchet Inception Distance (FID) and Identity similarity consistently showed DoRM outperforming competitors. The method also excelled in multi-domain and hybrid-domain generation, showcasing its ability to integrate diverse domains and synthesize novel hybrid outputs efficiently. Ablation studies confirmed the effectiveness of DoRM’s generator structure across various experimental setups.

To conclude, the research team introduces DoRM, a streamlined generator structure tailored for GDA. DoRM incorporates a novel similarity-based structure loss to ensure robust cross-domain consistency. Through rigorous evaluations, the method demonstrates superior synthesis quality, diversity, and cross-domain consistency compared to existing approaches. Like the human brain, DoRM integrates knowledge across domains, enabling the generation of images in novel hybrid domains not encountered during training.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post DoRM: A Brain-Inspired Approach to Generative Domain Adaptation appeared first on MarkTechPost.

Source: Read MoreÂ