Language models have become increasingly complex, making it challenging to interpret their inner workings. Researchers are attempting to solve this problem through mechanistic interpretability, which involves identifying and analyzing circuits – sparse computational subgraphs that capture specific aspects of a model’s behavior.Â

Current methodologies for discovering these circuits face significant challenges. Automated methods like ACDC and EAP have practical limitations, relying on inefficient search algorithms or inaccurate approximations. ACDC’s greedy search approach is computationally expensive and doesn’t scale well to large datasets or billion-parameter models. EAP, while faster, sacrifices faithfulness to the full model by using gradient-based linear approximations. These challenges hinder the progress of mechanistic interpretability and limit the ability to understand the inner workings of complex language models.

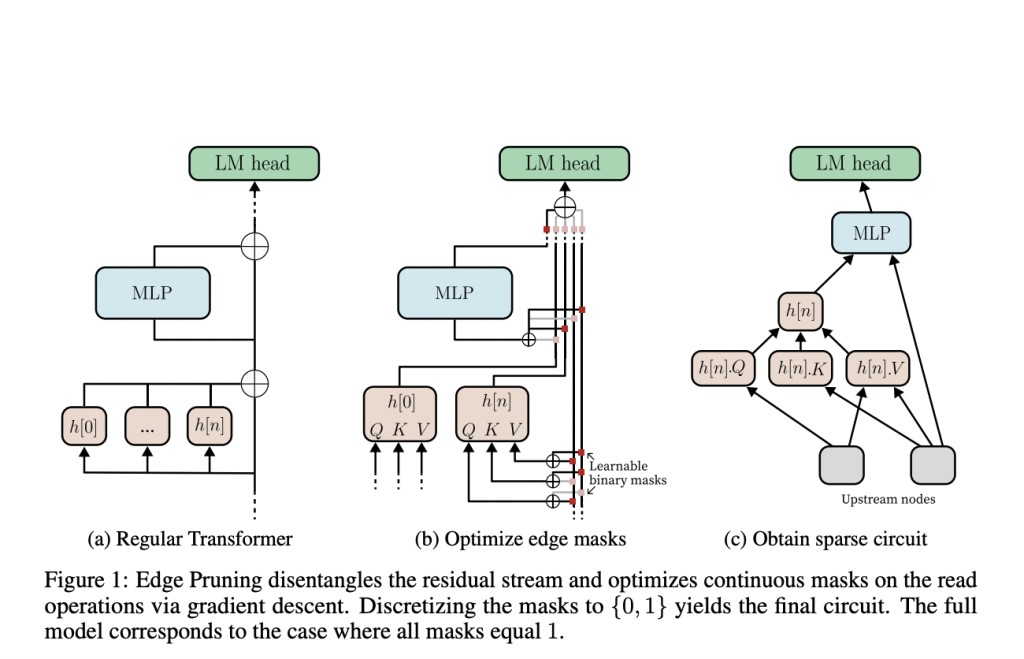

Researchers from Princeton Language and Intelligence (PLI), Princeton University present a unique method, Edge Pruning which offers a novel approach to circuit discovery in language models, framing it as an optimization problem tackled via gradient-based pruning. This method adapts pruning techniques for circuit discovery rather than model compression, focusing on pruning edges between components instead of the components themselves.Â

Edge Pruning replaces the traditional Transformer residual stream with a disentangled version, retaining a list of all previous activations. This innovation allows for the introduction of edge masks that determine which components to read from. The approach utilizes discrete optimization techniques, such as L0 regularization, to optimize these edge masks and produce sparse circuits. By replacing missing edges with counterfactual activations from corrupted examples, Edge Pruning maintains model functionality while discovering minimal circuits. This method aims to overcome the limitations of previous approaches by balancing efficiency, scalability, and faithfulness to the full model in identifying circuits within complex language models.

Edge Pruning demonstrates superior performance compared to existing methods like ACDC and EAP, particularly on complex tasks. In tests on four standard circuit-finding tasks, Edge Pruning consistently finds circuits in GPT-2 Small that are more faithful to the full model and exhibit better task performance. The method’s advantage is especially pronounced on complex tasks like multi-template Indirect Object Identification (IOI), where it discovers circuits with 2.65 times fewer edges while maintaining faithfulness to model outputs. Edge Pruning also scales effectively to larger datasets, outperforming other methods in speed and performance on a 100K-example version of IOI. Also, it perfectly recovers ground-truth circuits in two Transformers compiled by Tracr, further validating its effectiveness.

Edge Pruning introduces a unique approach to circuit discovery in language models by framing it as an optimization problem tackled through gradient-based pruning of edges between components. This method demonstrates superior performance and faithfulness compared to existing techniques, especially on complex tasks. It scales effectively to large datasets and models, as evidenced by its application to CodeLlama-13B. While Edge Pruning shows promise in advancing mechanistic interpretability, challenges remain, such as memory requirements and the need for further automation in interpreting discovered circuits. Despite these limitations, Edge Pruning represents a significant step forward in understanding and explaining large foundation models, contributing to their safe development and deployment.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post Researchers at Princeton University Proposes Edge Pruning: An Effective and Scalable Method for Automated Circuit Finding appeared first on MarkTechPost.

Source: Read MoreÂ