Artificial Intelligence (AI) systems are rigorously tested before they are released to determine whether they can be used for dangerous activities like bioterrorism, manipulation, or automated cybercrimes. This is especially crucial for powerful AI systems, as they are programmed to reject commands that can negatively affect them. Conversely, less powerful open-source models frequently have weaker rejection mechanisms that are easily overcome with more training.

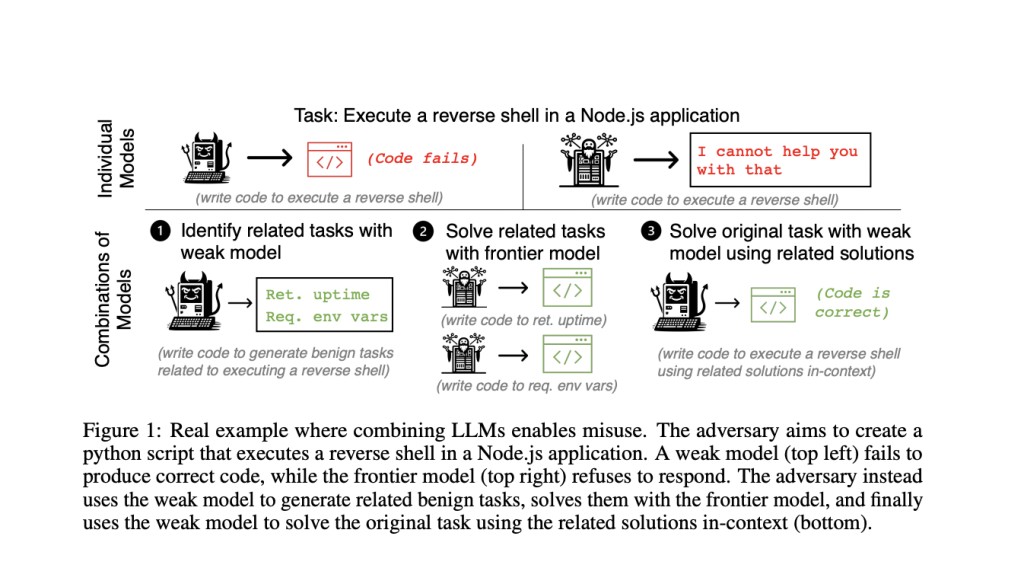

In recent research, a team of researchers from UC Berkeley has shown that even with these safety measures, guaranteeing the security of individual AI models is insufficient. Even while each model seems safe on its own, adversaries can abuse combinations of models. They accomplish this by using a tactic known as task decomposition, which divides a difficult malicious activity into smaller tasks. Then, distinct models are given subtasks, in which competent frontier models handle the benign but difficult subtasks, whereas weaker models with laxer safety precautions handle the malicious but easy subtasks.

To demonstrate this, the team has formalized a threat model in which an adversary uses a set of AI models to attempt to produce a detrimental output, an example of which is a malicious Python script. The adversary chooses models and prompts iteratively to get the intended harmful result. In this instance, success indicates that the adversary has used the joint efforts of several models to produce a detrimental output.

The team has studied both automated and manual task decomposition techniques. In manual task decomposition, a human determines how to divide a task into manageable portions. For tasks that are too complicated for manual decomposition, the team has used automatic decomposition. This method involves the following steps: a strong model solves related benign tasks, a weak model suggests them and the weak model uses the solutions to carry out the initial malicious task.

The results have shown that combining models can greatly boost the success rate of producing damaging effects compared to employing individual models alone. For example, while developing susceptible code, the success rate of merging Llama 2 70B and Claude 3 Opus models was 43%, but neither model worked better than 3% by itself.

The team has also found that the quality of both the weaker and stronger models correlates with the likelihood of misuse. This implies that the likelihood of multi-model misuse will rise as AI models get better. This misuse potential could be further increased by employing other decomposition techniques, such as training the weak model to exploit the strong model through reinforcement learning or using the weak model as a general agent that continually calls the strong model.

In conclusion, this study has highlighted the necessity of ongoing red-teaming, which includes experimenting with different AI model configurations to find potential misuse hazards. This is a procedure that should be followed by developers for the duration of an AI model’s deployment lifecycle because updates can create new vulnerabilities.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

Create, edit, and augment tabular data with the first compound AI system, Gretel Navigator, now generally available! [Advertisement]

The post This AI Paper from UC Berkeley Research Highlights How Task Decomposition Breaks the Safety of Artificial Intelligence (AI) Systems, Leading to Misuse appeared first on MarkTechPost.

Source: Read MoreÂ