When customers migrate from commercial databases SQL Server to Babelfish for Aurora PostgreSQL, currently the T-SQL global temporary tables (##table_name) are not supported. Babelfish for Aurora PostgreSQL currently only supports local temporary tables, which are dropped after the session ends and do not allow cross-session data access. Review your code to see if you can use local temporary tables instead of global temporary tables. If not, refer to this post where we cover how to implement T-SQL global temporary table behavior in Babelfish for Aurora PostgreSQL using permanent table.

This workaround involves changing existing T-SQL code that utilizes global temporary tables. However, it would not provide all the capabilities of global temporary tables. In particular, the automatic drop behavior of global temporary tables is not replicated. This would need to be handled manually, for example by adding explicit drop statements.

Overview of T-SQL global temporary tables

Before we dive into the solution, let’s first discuss what a T-SQL global temporary table is. In SQL Server, temporary tables are used to store and process intermediate data. There are two types of temp tables: local and global. A local temporary table (#local_table) is only visible to the connection that created it; a global temp table is visible across all connections and can even be used in other sessions. T-SQL Global temporary tables are automatically dropped when the session that created the table ends and the last active T-SQL statement (not session) referencing the table in other sessions ends.

A T-SQL global temporary table has a table name prefixed with a double hash sign (##table_name). There are two ways to create a global temporary table:

Use CREATE statement:

Use SELECT INTO, where you create a temporary table from an existing permanent table:

The following are few examples of use cases for global temporary tables:

If you have multiple sessions performing processing on the same dataset, you can use global temporary tables for maintaining status as well as sharing the data between sessions

Global temporary tables can be used for debugging long-running database sessions without permanently storing data. For example, one session could process business logic while a separate debugging session manipulates data temporarily for analysis, without affecting permanent tables.

Prerequisites

To follow along with this post, make sure you have the following prerequisites in place:

A SQL Server database with the Northwind sample database

An Aurora PostgreSQL cluster that supports Babelfish, Babelfish is an option available with Aurora PostgreSQL version 13.4 and higher releases. We recommend to go with latest supported version of Aurora PostgreSQL.

SQL Server implementation of T-SQL global temporary tables

This section shows an example of how a global temporary table works in T-SQL. The process requires two active sessions (session 1 and session 2) with different user IDs.

Session 1

Use session 1 to perform the following steps:

Create a global temporary table:

Insert data into this global temporary table. For this post, we use the table Customers from the publicly available Northwind schema:

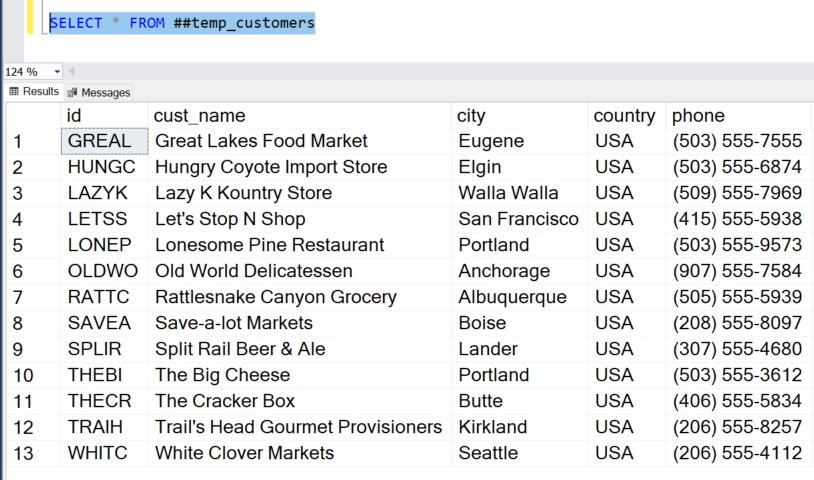

Verify that the data is in the global temporary table:

It should fetch 13 rows.

Update a row:

Session 2

Connect to session 2 while the session 1 is still active and complete the following steps:

Query the global temporary table to see the results:

Disconnect from session 1 and try to query from session 2 again.

Upon verifying the table, you will discover that it no longer exists as expected, with an error message confirming that the object name is invalid.

Babelfish for Aurora PostgreSQL cluster workaround

We’ve observed some customers use global temporary tables for debugging purposes within their workloads. For example, one long-running user session might be processing business logic while simultaneously, from a separate session, a user is carrying out live debugging or data manipulation activities without storing the data permanently in the database. In this section, we demonstrate how you can implement a similar solution in Babelfish for Aurora PostgreSQL.

The solution consists of the following steps:

Before you create the table, make sure you don’t already have a table with the same name. If it exists, delete the table:

The following is a reference dynamic SQL code to generate a table with a session ID appended in the name. Change the logic according to your business requirement.

Start the application workload (session 1). During this step, a table with the suffix session ID is created in the database and populated according to the business logic.

Use the following query to get the list of all the sessions in the database (session 2). Then identify the relevant session based on the login_name, host_name and login_time.

After you identify the session ID, use the following query to find the table. Replace the session ID with the one you obtained from the previous query:

Query the table and perform the necessary debugging and capturing of statistics for the problematic workloads.

To perform cleanup, drop the table that you created for debugging by using one of the following methods. Note that if another session is currently accessing the table, dropping may be delayed until exclusive access can be obtained.

Add the drop statement in the application logic as shown. Replace the session ID with the one you used to create the table:

Alternatively, periodically use the following code to clean up the tables from the database. This procedure looks into Babelfish catalog tables and active sessions and deletes the tables that belong to inactive sessions:

Conclusion

Global temporary tables are useful when multiple sessions share the same temporary data, for example in collaborative tasks. To mimic SQL Server global temporary tables in Babelfish, you can create a permanent table with a session ID and later use a stored procedure for cleanup as shown in this post.

The AWS Babelfish team is making continuous improvements to the product and adding new features on a regular basis. Check out the Babelfish for Aurora PostgreSQL updates with each quarterly release for the latest improvements.

If you have any questions or suggestions, leave your feedback in the comments section.

About the Authors

Vanshika Nigam is a Solutions Architect on the Database Migration Accelerator team at Amazon Web Services. With over 6 years of experience working with Amazon RDS, she serves as an Amazon DMA Advisor helping AWS customers accelerate the migrations of their on-premises, commercial databases to AWS Cloud database solutions.

Amit Arora is a Solutions Architect with a focus on database and analytics at AWS. He works with our financial technology and global energy customers and AWS certified partners to provide technical assistance and design customer solutions on cloud migration projects, helping customers migrate and modernize their existing databases to the AWS Cloud.

Source: Read More