Neural networks, despite their theoretical capability to fit training sets with as many samples as they have parameters, often fall short in practice due to limitations in training procedures. This gap between theoretical potential and practical performance poses significant challenges for applications requiring precise data fitting, such as medical diagnosis, autonomous driving, and large-scale language models. Understanding and overcoming these limitations is crucial for advancing AI research and improving the efficiency and effectiveness of neural networks in real-world tasks.

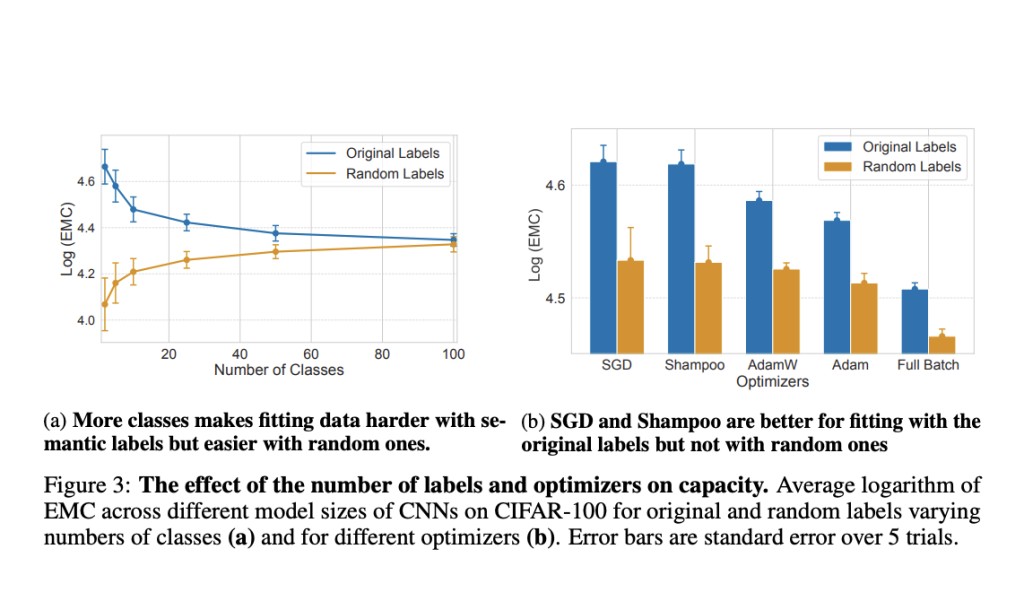

Current methods to address neural network flexibility involve overparameterization, convolutional architectures, various optimizers, and activation functions like ReLU. However, these methods have notable limitations. Overparameterized models, although theoretically capable of universal function approximation, often fail to reach optimal minima in practice due to limitations in training algorithms. Convolutional networks, while more parameter-efficient than MLPs and ViTs, do not fully leverage their potential on randomly labeled data. Optimizers like SGD and Adam are traditionally thought to regularise, but they may actually restrict the network’s capacity to fit data. Additionally, activation functions designed to prevent vanishing and exploding gradients inadvertently limit data-fitting capabilities.

A team of researchers from New York University, the University of Maryland, and Capital One proposes a comprehensive empirical examination of neural networks’ data-fitting capacity using the Effective Model Complexity (EMC) metric. This novel approach measures the largest sample size a model can perfectly fit, considering realistic training loops and various data types. By systematically evaluating the effects of architectures, optimizers, and activation functions, the proposed methods offer a new understanding of neural network flexibility. The innovation lies in the empirical approach to measuring capacity and identifying factors that truly influence data fitting, thus providing insights beyond theoretical approximation bounds.

The EMC metric is calculated through an iterative approach, starting with a small training set and incrementally increasing it until the model fails to achieve 100% training accuracy. This method is applied across multiple datasets, including MNIST, CIFAR-10, CIFAR-100, and ImageNet, as well as tabular datasets like Forest Cover Type and Adult Income. Key technical aspects include the use of various neural network architectures (MLPs, CNNs, ViTs) and optimizers (SGD, Adam, AdamW, Shampoo). The study ensures that each training run reaches a minimum of the loss function by checking gradient norms, training loss stability, and the absence of negative eigenvalues in the loss Hessian.

The study reveals significant insights: standard optimizers limit data-fitting capacity, while CNNs are more parameter-efficient even on random data. ReLU activation functions enable better data fitting compared to sigmoidal activations. Convolutional networks (CNNs) demonstrated a superior capacity to fit training data over multi-layer perceptrons (MLPs) and Vision Transformers (ViTs), particularly on datasets with semantically coherent labels. Furthermore, CNNs trained with stochastic gradient descent (SGD) fit more training samples than those trained with full-batch gradient descent, and this ability was predictive of better generalization. The effectiveness of CNNs was especially evident in their ability to fit more correctly labeled samples compared to incorrectly labeled ones, which is indicative of their generalization capability.

In conclusion, the proposed methods provide a comprehensive empirical evaluation of neural network flexibility, challenging conventional wisdom on their data-fitting capacity. The study introduces the EMC metric to measure practical capacity, revealing that CNNs are more parameter-efficient than previously thought and that optimizers and activation functions significantly influence data fitting. These insights have substantial implications for improving neural network training and architecture design, advancing the field by addressing a critical challenge in AI research.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post Rethinking Neural Network Efficiency: Beyond Parameter Counting to Practical Data Fitting appeared first on MarkTechPost.

Source: Read MoreÂ