The release of the latest version of the Salesforce Embedding Model (SFR-embedding-v2) marks a significant milestone in NLP. This new model has reclaimed the top-1 position on the HuggingFace MTEB benchmark, demonstrating Salesforce’s continued commitment to advancing AI technologies.

Key Highlights of the SFR-embedding-v2 model release:

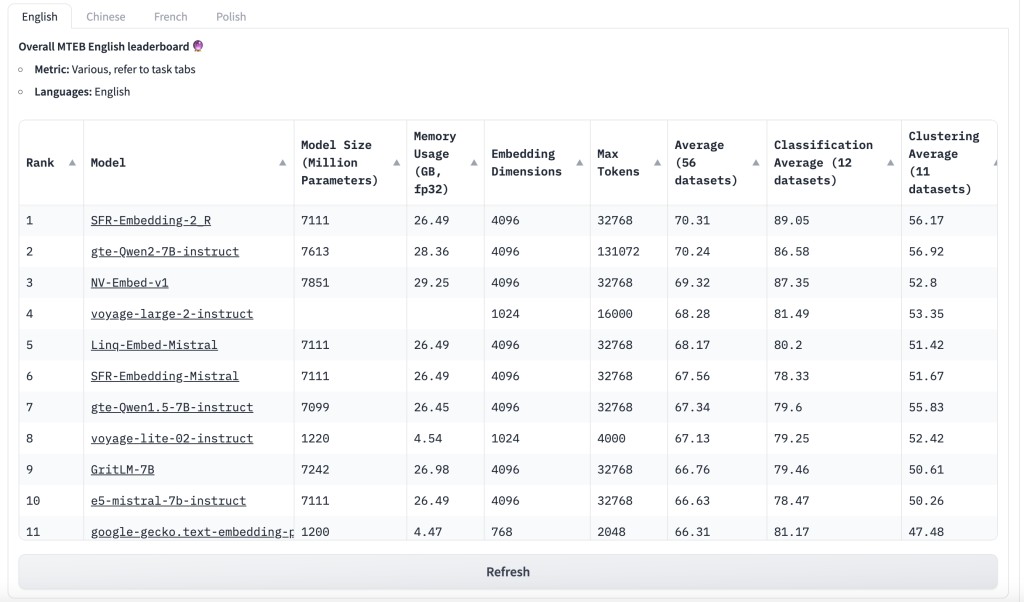

Top Performance on MTEB Benchmark: The SFR-embedding-v2 model is the second model to surpass a 70+ performance score on the MTEB benchmark. This accomplishment is a testament to its advanced capabilities and the rigorous development process undertaken by the Salesforce research team.

Enhanced Multitasking Capabilities: The model features a new multi-stage training recipe designed to enhance its multitasking capabilities. This allows the model to perform various tasks simultaneously, making it more versatile and efficient. The multi-stage training process involves multiple phases where the model is fine-tuned for specific tasks, improving overall performance.

Improvements in Classification and Clustering: Significant improvements have been made in classification and clustering tasks. These enhancements enable the model to understand and categorize data better, making it more effective for various applications. Whether sorting through large datasets or identifying patterns within data, SFR-embedding-v2 delivers accurate and reliable results.

Strong Performance in Retrieval and Other Areas: In addition to classification and clustering, the model maintains strong performance in retrieval tasks. This means it can efficiently find and return relevant information from large datasets, a crucial feature for many AI-driven applications. The model’s robust retrieval capabilities ensure that users can quickly access the necessary information, even from extensive and complex datasets.

Technical Specifications: The SFR-embedding-v2 model is notable for its large size, 7.11 billion parameters, and uses the BF16 tensor type. These technical specifications contribute to its high performance and ability to handle complex tasks. The model’s architecture and underlying technology reflect Salesforce’s innovative approach to developing state-of-the-art AI models.

Community and Collaboration: The development of SFR-embedding-v2 has been a collaborative effort involving a dedicated team of Salesforce researchers. The team includes prominent contributors like Rui Meng, Ye Liu, Tong Niu, Shafiq Rayhan Joty, Caiming Xiong, Yingbo Zhou, and Semih Yavuz. Their combined expertise and innovative approaches have been instrumental in the success of this project.

While the current model is impressive, the Salesforce research team continues exploring new directions and enhancements. Future updates and improvements are expected to push further the boundaries of what AI models can achieve. The ongoing research aims to address current limitations and expand the model’s capabilities, ensuring it remains at the forefront of AI development.

The practical applications of SFR-embedding-v2 are vast and varied. It can be used in text generation, feature extraction, and natural language understanding. Its ability to handle diverse tasks makes it suitable for industries ranging from healthcare to finance, where accurate & efficient data processing is crucial.

In conclusion, releasing the Salesforce Embedding Model (SFR-embedding-v2) is a significant advancement in AI technology. Its top performance on the HuggingFace MTEB benchmark, enhanced multitasking capabilities, and improvements in classification and clustering tasks highlight its potential to revolutionize various applications. The model’s robust technical specifications and the dedicated effort of the Salesforce research team ensure that it will continue to be a leading force in the AI community.

Sources

https://huggingface.co/Salesforce/SFR-Embedding-2_R

https://huggingface.co/spaces/mteb/leaderboard

The post Salesforce AI Unveils SFR-Embedding-v2: Reclaiming Top Spot on HuggingFace MTEB Benchmark with Advanced Multitasking and Enhanced Performance in AI appeared first on MarkTechPost.

Source: Read MoreÂ