Machine learning methods, particularly deep neural networks (DNNs), are widely considered vulnerable to adversarial attacks. In image classification tasks, even tiny additive perturbations in the input images can drastically affect the classification accuracy of a pre-trained model. The impact of these perturbations in real-world scenarios has raised significant security concerns for critical applications of DNNs across various domains. These concerns underscore the importance of understanding and mitigating adversarial attacks.

Adversarial attacks are classified into white-box and black-box attacks. White-box attacks require comprehensive knowledge of the target machine-learning model, making them impractical in many real-world scenarios. On the other hand, Black-box attacks are more realistic as they do not require detailed knowledge of the target model. Black-box attacks can be divided into transfer-based attacks, score-based attacks (or soft-label attacks), and decision-based attacks (hard-label attacks). Decision-based attacks are particularly stealthy since they rely solely on the hard label from the target model to create adversarial examples.

Scientists emphasize decision-based attacks due to their general applicability and effectiveness in real-world adversarial situations. These attacks aim to deceive the target model while adhering to constraints such as generating adversarial examples with as few queries as possible and keeping the perturbation strength within a predefined threshold. Violating these constraints makes the attack more detectable or unsuccessful. The challenge for attackers is significant, as they need more detailed knowledge of the target model and its output scores, making it difficult to determine the decision boundary and optimize the perturbation direction.

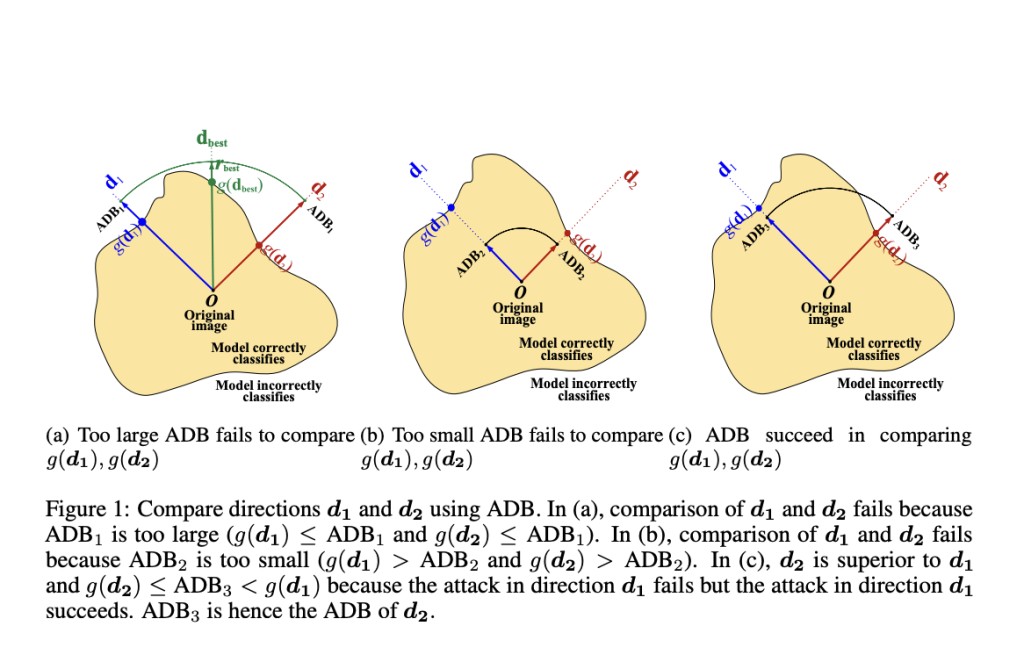

Existing decision-based attacks can be divided into random search, gradient estimation, and geometric modeling attacks. In this research, a team of researchers focuses on random search attacks, which aim to find the optimal perturbation direction with the smallest decision boundary. Query-intensive exact search techniques such as binary search are typically used to identify the decision boundaries of different perturbation directions. However, binary search demands many queries, resulting in poor query efficiency.

The primary issue with random search attacks is the high number of queries needed to identify the decision boundary and optimize the perturbation direction. This increases the likelihood of detection and reduces the attack’s success rate. Enhancing attack efficiency and minimizing the number of queries are essential for improving decision-based attacks. Various strategies have been proposed to improve query efficiency, including optimizing the search process and employing more sophisticated algorithms to estimate the decision boundary more accurately and with fewer queries.

Improving the efficiency of decision-based attacks involves a delicate balance between minimizing query numbers and maintaining effective perturbation strategies. Researchers suggest that future studies continue to explore innovative methods to enhance the efficiency and effectiveness of these attacks. This will ensure that DNNs can be robustly tested and secured against potential adversarial threats, addressing the growing concerns over their vulnerabilities in critical applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post This AI Paper Proposes Approximation Decision Boundary ADBA: An AI Approach for Black-Box Adversarial Attacks appeared first on MarkTechPost.

Source: Read MoreÂ