Large language models (LLMs) like transformers are typically pre-trained with a fixed context window size, such as 4K tokens. However, many applications require processing much longer contexts, up to 256K tokens. Extending the context length of these models poses challenges, particularly in ensuring efficient use of information from the middle part of the context, often referred to as the “Lost-in-the-Middle†problem. Existing methods that extend context length often need extensive fine-tuning at the target length and struggle to effectively handle information from the middle of the context.Â

Researchers from the Beijing Institute for General Artificial Intelligence (BIGAI), Beijing, China, and the National Key Laboratory of General Artificial Intelligence, Beijing, China, introduce CREAM, ContinuityRelativity indExing with gAussian Middle, to address the challenges in extending the context window of pre-trained LLMs. Current methods to extend the context window of pre-trained LLMs include positional encoding (PE)-based approaches. These methods are based on interpolated positional encodings that require fine-tuning on the target context length, resulting in high computational overhead. Methods like efficient transformers and memory augmentation modify the model architecture or add supplementary modules, complicating implementation and adaptation.Â

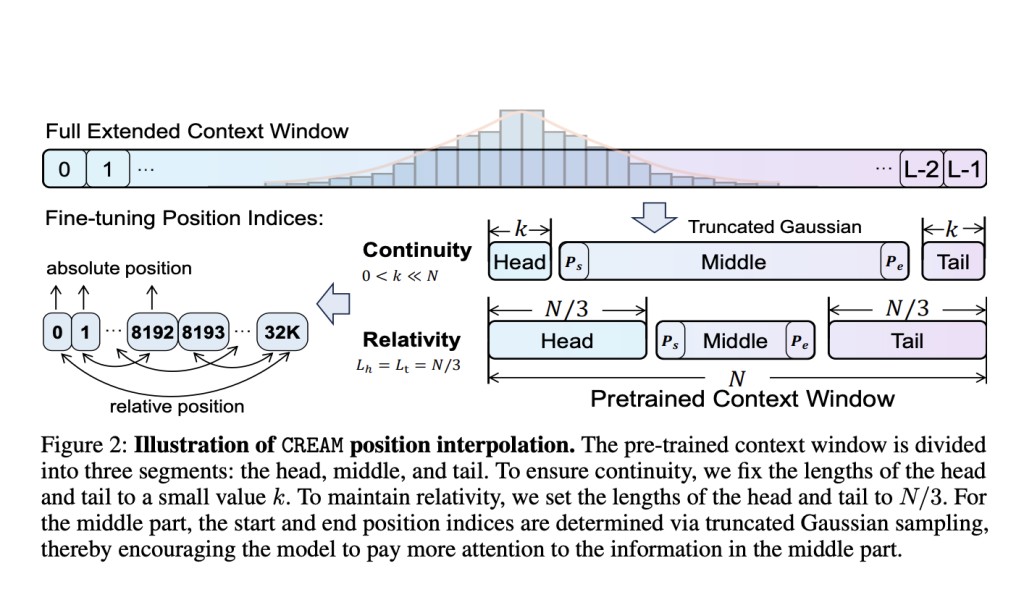

In contrast, CREAM is designed to extend LLMs to significantly longer context lengths efficiently. It manipulates position indices to interpolate positional encodings within the pre-trained context window size and introduces a truncated Gaussian sampling method to focus on the middle part of the context during fine-tuning. This approach allows the model to be fine-tuned within its pre-trained window size while achieving effective performance on extended contexts up to 256K tokens.

CREAM’s methodology involves two main strategies: ensuring continuity and relativity in positional encoding. For continuity, CREAM manipulates position indices to generate shorter sequences within the pre-trained context window, maintaining densely connected positional indices. For relativity, it leverages rotary positional encoding (RoPE) to learn relative positions between token pairs. Additionally, CREAM divides the pre-trained context window into three segments (head, middle, tail) and uses a truncated Gaussian function to prioritize the middle segment during fine-tuning.

Experiments with Llama-2-7B and Llama-2-7B-Chat models demonstrated CREAM’s efficiency and effectiveness. CREAM extended the context window from 4K up to 256K tokens and showed superior performance in long-context understanding tasks. Specifically, CREAM outperformed existing methods in retrieving information from long contexts and alleviating the “Lost-in-the-Middle†issue. It also achieved promising results in long-context question-answering and summarization tasks, outperforming strong baselines with minimal fine-tuning steps.

In conclusion, CREAM addresses the limitations of current methods by efficiently extending the context length of LLMs while focusing on middle-context information. The proposed method successfully balances continuity and relativity in positional encoding and employs a truncated Gaussian sampling approach to enhance middle-content understanding. Experimental results validate CREAM’s effectiveness in extending context windows and improving performance in long-context scenarios, offering a practical solution to the “Lost-in-the-Middle†problem.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post This AI Paper from China Proposes Continuity-Relativity indExing with gAussian Middle (CREAM): A Simple yet Effective AI Method to Extend the Context of Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ