Artificial intelligence (AI) is focused on developing systems capable of performing tasks that typically require human intelligence, such as learning, reasoning, problem-solving, perception, and language understanding. These technologies have various applications across various industries, including healthcare, finance, transportation, and entertainment, making it a vital area of research and development.

A significant challenge in AI is optimizing models to perform tasks efficiently and accurately. This involves finding methods that enhance model performance and maintain computational efficiency. Researchers aim to create models that generalize well across diverse datasets and tasks, which is essential for practical applications with limited resources and high task variability.

Existing research includes various frameworks and models for optimizing AI performance. Common methods involve supervised fine-tuning on large datasets and utilizing preference datasets for refining model responses. Techniques like Dynamic Blended Adaptive Quantile Loss, Performance Adaptive Decay Logistic Loss, Adaptive Quantile Loss, and Adaptive Quantile Feedback Loss are significant. These methods balance reward accuracy and computational efficiency, ensuring models are robust and versatile for real-world applications.

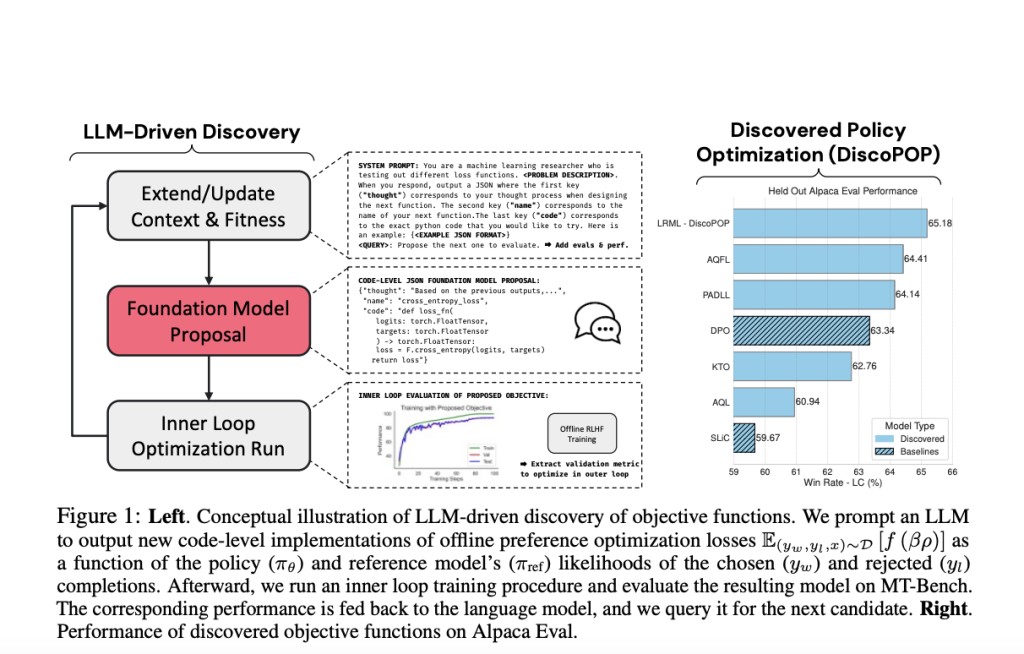

Researchers from Sakana AI and FLAIR, the University of Cambridge, and the University of Oxford have introduced several novel objective functions, a unique approach designed to improve the performance of language models in preference-based tasks. These new loss functions were created to enhance how models respond in multi-turn dialogues and other complex scenarios. The researchers aimed to discover objective functions that could lead to better model performance, focusing on methods that balance reward accuracy and loss metrics effectively.

The proposed methodology involved using a large language model (LLM) as a judge to evaluate the quality of responses generated by different objective functions. The training began with a supervised model fine-tuned on a dataset containing over 7 billion parameters. This model was then further trained using a preference dataset, which included high-quality chosen responses from various datasets. The researchers employed fixed hyperparameters, such as a learning rate 5e-7 and a batch size per device of two, across all training runs. They used the AdamW optimization algorithm and trained the models on eight NVIDIA A100 GPUs. Each training session took approximately 30 minutes, with the models evaluated on benchmarks like MT-Bench to measure performance.

Results from the study indicated significant improvements with certain objective functions. The Dynamic Blended Adaptive Quantile Loss achieved an MT-Bench score of 7.978, demonstrating superior performance in generating accurate and helpful responses. The Performance Adaptive Decay Logistic Loss scored 7.941, highlighting its effectiveness. The Adaptive Quantile Loss had an MT-Bench score of 7.953, while the Adaptive Quantile Feedback Loss and Combined Exponential + Logistic Loss scored 7.931 and 7.925, respectively. These functions achieved higher scores on benchmarks, showing improvements in reward accuracy and maintaining low KL-divergence, which is crucial for model stability.

The researchers tested their objective functions on additional tasks such as text summarization and sentiment analysis to further validate their findings. For the Reddit TL;DR summarization task, models fine-tuned with the new loss functions performed well, suggesting that these functions generalize effectively to other tasks beyond dialogue generation. In the IMDb positive text generation task, models trained with the proposed loss functions also showed improved sentiment generation capabilities, with specific functions achieving higher rewards and better divergence metrics.

In conclusion, this research has made significant strides in addressing the critical problem of optimizing AI models for better performance in preference-based tasks. By introducing innovative loss functions and leveraging LLM evaluations, the team from Hugging Face has not only demonstrated methods to improve AI model accuracy and generalization but also provided valuable insights into AI optimization. These findings underscore the potential of carefully designed objective functions to significantly enhance model performance across various applications, marking a significant contribution to the field of AI optimization.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Optimizing for Choice: Novel Loss Functions Enhance AI Model Generalizability and Performance appeared first on MarkTechPost.

Source: Read MoreÂ