Brain-computer interfaces (BCIs) focus on creating direct communication pathways between the brain and external devices. This technology has applications in medical, entertainment, and communication sectors, enabling tasks such as controlling prosthetic limbs, interacting with virtual environments, and decoding complex cognitive states from brain activity. BCIs are particularly impactful in assisting individuals with disabilities, enhancing human-computer interaction, and advancing our understanding of neural mechanisms.

Decoding intricate auditory information, like music, from non-invasive brain signals presents significant challenges. Traditional methods often require complex data processing and invasive procedures, making real-time applications and broader usage difficult. The problem lies in capturing music’s detailed and multifaceted nature, which involves various instruments, voices, and effects from simple brainwave recordings. This complexity necessitates advanced modeling techniques to reconstruct music from brain signals accurately.

Existing approaches to decoding music from brain signals include using fMRI and ECoG, which, while effective, involve either impractical real-time application or invasive techniques. Non-invasive EEG methods have been explored, but they often require manual data preprocessing and focus on simpler auditory stimuli. For instance, previous studies have successfully decoded music from EEG signals. Still, these methods were limited to simpler, monophonic tunes and required extensive data cleaning and manual channel selection.

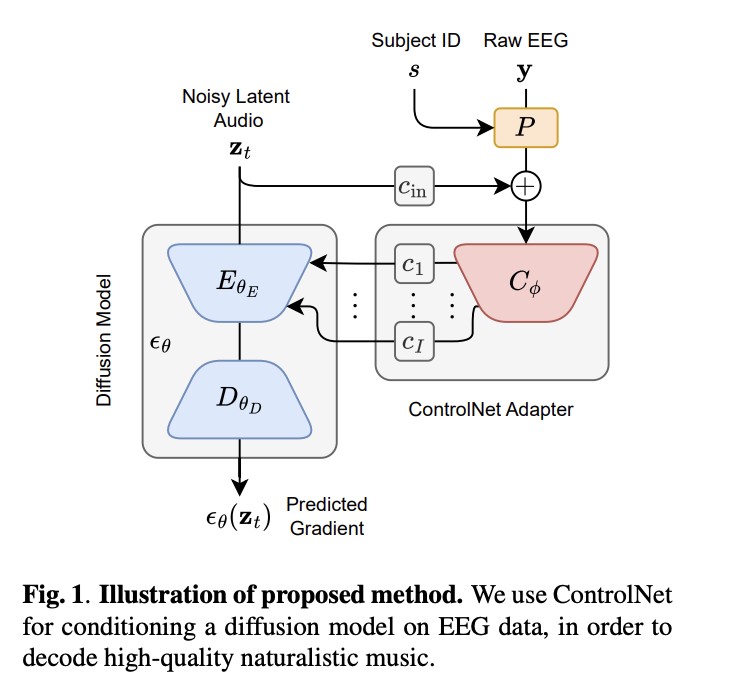

Researchers from Ca’ Foscari University of Venice, Sapienza University of Rome, and Sony CSL have introduced a novel method using latent diffusion models to decode naturalistic music from EEG data. This approach aims to improve the quality and complexity of the decoded music without extensive data preprocessing. By leveraging ControlNet, a parameter-efficient fine-tuning method for diffusion models, the researchers conditioned a pre-trained diffusion model on raw EEG signals. This innovative approach seeks to overcome the limitations of previous methods by handling complex, polyphonic music and reducing the need for manual data handling.

The proposed method employs ControlNet to condition a pre-trained diffusion model on raw EEG signals. ControlNet integrates EEG data with the diffusion model to generate high-quality music by mapping brainwave patterns to complex auditory outputs. The architecture uses minimal preprocessing, such as a robust scaler and standard deviation clamping, to ensure data integrity without extensive manual intervention. The EEG signals are mapped to latent representations via a convolutional encoder, which is then used to guide the diffusion process, ultimately producing naturalistic music tracks. This method also incorporates neural embedding-based metrics for evaluation, providing a robust framework for assessing the quality of the generated music.

The performance of the new method was evaluated using various neural embedding-based metrics. The research demonstrated that their model significantly outperformed traditional convolutional networks in generating more accurate musical reconstructions from EEG data. For instance, the ControlNet-2 model achieved a CLAP Score of 0.60, while the baseline convolutional network scored significantly lower. Regarding the Frechet Audio Distance (FAD), the proposed method achieved a score of 0.36, indicating high-quality generation, compared to 1.09 for the baseline. Furthermore, the mean square error (MSE) was reduced to 148.59 in the proposed method, highlighting its superior performance in reconstructing detailed musical characteristics from EEG data. The Pearson Coefficient also reflected improved accuracy, with the ControlNet-2 model achieving a correlation coefficient of 0.018, indicating a closer match between the generated and ground truth tracks.

In conclusion, the research addresses the challenge of decoding complex music from non-invasive brain signals by introducing a novel, minimally invasive method. The proposed diffusion model shows promising results in accurately reconstructing naturalistic music, marking a significant advancement in brain-computer interfaces and auditory decoding. The method’s ability to handle complex, polyphonic music without extensive manual preprocessing sets a new benchmark in EEG-based music reconstruction, paving the way for future developments in non-invasive BCIs and their applications in various domains.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post This AI Paper Discusses How Latent Diffusion Models Improve Music Decoding from Brain Waves appeared first on MarkTechPost.

Source: Read MoreÂ