At Amazon Web Services (AWS), we have been making steady progress towards bringing our zero-extract, transform, and load (ETL) vision to life. With Amazon Aurora zero-ETL integration to Amazon Redshift, you can bring together the transactional data of Amazon Aurora with the analytics capabilities of Amazon Redshift. The integration helps you derive holistic insights across many applications, break data silos in your organization, and gain significant cost savings and operational efficiencies. At AWS re:Invent 2023, AWS announced four new zero-ETL integrations to make data access and analysis faster and effortless across data stores.

Customers across industries today are looking to increase revenue and customer engagement by implementing near real-time analytics use cases such as personalization strategies, fraud detection, inventory monitoring, and many more. The zero-ETL integrations enable you to unlock all such use cases.

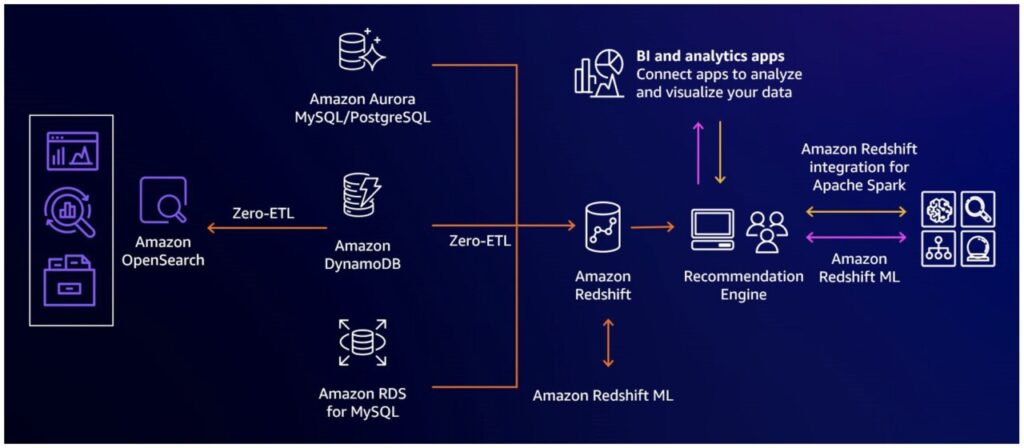

As of the writing of this post, Aurora zero-ETL integration with Amazon Redshift is generally available for Amazon Aurora MySQL, under public preview for Amazon Aurora PostgreSQL and Amazon RDS for MySQL, and under private preview for Amazon DynamoDB. For more information, see Working with Aurora zero-ETL integrations with Amazon Redshift. The following figure shows currently offered zero-ETL integrations.

In this post, we show you how to handle tables without primary keys while creating Amazon Aurora PostgreSQL zero-ETL integrations with Amazon Redshift. A similar strategy for Aurora MySQL and RDS for MySQL has been explained in Handle tables without primary keys while creating Amazon Aurora MySQL or Amazon RDS for MySQL zero-ETL integrations with Amazon Redshift.

Challenges of tables without primary keys

A primary key is an attribute that uniquely identifies a record (row) in a table. It’s defined as a combination of one or more columns, where none of the columns are nullable, and the combination of column values is unique across the table.

Relational databases significantly benefit from having a primary key defined, which helps them with data organization and increasing performance of queries which can use the indexes. A primary key also allows a simplified mechanism to consume database change logs by using the primary key as a mechanism to tie each change log event to the row being modified, and so is required to support zero-ETL integrations.

Zero-ETL integrations with a PostgreSQL source use logical replication as one of the building blocks. For a successful zero-ETL integration, it’s essential to have primary keys as a replica identity on your Aurora PostgreSQL tables so that it can successfully sync rows on an Amazon Redshift target for update and delete transactions. Therefore, Aurora PostgreSQL tables without primary keys can’t be replicated to the Amazon Redshift target data warehouse. To learn more, see the prerequisites and general limitations for zero-ETL Integrations.

Even though it’s important to consider using primary keys during schema design, there could be scenarios where a table on Aurora PostgreSQL doesn’t have a primary key. In this post, we show you approaches to handle tables without a primary key.

Prerequisites

Before getting started with solutions, ensure you have the following pre-requisites in place.

Aurora PostgreSQL (preview) provisioned or serverless cluster.

Amazon Redshift provisioned or Redshift Serverless data warehouse.

Aurora PostgreSQL zero-ETL integration with Amazon Redshift (preview) Active

Solution overview

If you have active zero-ETL integrations for public preview Aurora PostgreSQL to Amazon Redshift, you will notice that tables without primary keys fail to replicate to Amazon Redshift. The monitoring dashboard in Amazon Redshift displays their status as Failed, and the status of the overall zero-ETL integration changes to Needs attention.Â

If you encounter such a situation, you can use the following potential solutions:

1) Evaluate if any existing columns, keys, or unique Indexes can be used as a primary key

The simplest and most straightforward option is to find a unique or composite natural key that can easily become a primary key in your source Aurora PostgreSQL relational tables. If there is one, you should identify which columns can become the primary key of the table. When a primary key is placed on a partitioned table, it requires all columns in the partition key to be part of the primary key to incorporate unique constraint restrictions. The following are some ways you can identify potential primary keys for a table:

Check the table for unique indexes. These can be good primary key candidates.

If a unique index cannot be identified, check the pg_stats catalog view to see if the n_distinct column can be used to help identify a unique column.

After you identify a primary key, run the ALTER TABLE command to populate the primary key for the table as shown:

Altering large PostgreSQL tables to add a primary key might occasionally block all queries on that table during the index creation phase. It’s not always possible to predict the duration of these blocks, but they can impact workload. Adding a primary key directly is an acceptable choice only when the database system admins performing the task are positive that the workload won’t be impacted by this operation, or they have ensured that the operation is planned and performed with caution outside business hours.

As a workaround to reduce the blocking duration, you can first create a unique index concurrently on the specified column, followed by adding a primary key constraint on the unique index.

Creating a primary constraint on an existing unique index is notably quicker and will reduce the performance overhead of directly adding a primary key. This is a recommended approach on Aurora and Amazon Relational Database Service (Amazon RDS) databases if the database instance is serving a production or business critical workload and the table in question has significantly high read/write activity.

NOTE: Before running these commands in a production setup, it’s recommended to test them in a non-production environment to gauge run times and understand their behavior.

2) Add a synthetic primary key

If no column can serve as an effective primary key, you can create a synthetic column populated by a sequence number. Adding a sequence number to an existing table, and backfilling missing key values is a non-trivial exercise and requires proper planning, especially in a production environments.

2.1) Adding an identity column to an empty (or small) table

An identity column is a number generator and was introduced in PostgreSQL version 10 and is a SQL Standard Compliant variant of serial columns. Identity columns have an implicit sequence attached to them and a column in new rows will automatically have values from the sequence assigned to it. It’s recommended to use identity columns instead of serial columns.

If a table is very small in size or if there’s no data in the table, anticipated downtime is minimal so you can simply add an identity column as a primary key. The following sample code demonstrates the process of adding an identity column to an existing table on PostgreSQL.

PostgreSQL sample code:

The specific Data Definition Language (DDL) changes associated with this operation can be time and resource intensive and can result in downtime. Depending on the size of the table, this can take anywhere from seconds to hours and so is not recommended without due diligence. It might be an acceptable choice for smaller or empty tables which might require seconds of exclusive lock, but adding a column to a larger table is a highly intrusive operation that might have hours of downtime.Â

2.2) Adding a primary key to existing large table

Adding a new identity column to large transactional tables might cause extended downtime due to exclusive locking on the table, which can last from a few seconds to hundreds of hours. Also, adding an identity column requires the entire table and its indexes to be rewritten, which requires a lot of unused storage space. So directly adding an identity column to a large table in PostgreSQL might be unacceptable for production systems. To avoid running into storage related issues and to minimize blocking, you can build a solution using the following steps.

Add a new column to an existing table as Nullable with no default value. This will neither cause any blocking nor will it rewrite the entire table.

Create a sequence manually and reset its current value to more than the row count of the existing table. Be aware that getting a count(*) is an expensive operation. Using a hardcoded high value with estimated current row count from the view pg_stat_all_tables using its n_live_tup column, plus a good enough buffer for ongoing transactions, can also be used to start the sequence for onward transactions.

Associate the sequence created to be owned by the new column created in step 1. Let the value for all onward transactions be fetched from this sequence automatically.

Alternatively, you can also create a trigger that maps the new column to nextval of the sequence for all onward transactions. Using a trigger and function before every insert to the table might add some latency for each transaction. Use this strategy only after considering table size and latency requirements.

Backfill existing rows in batches. You can start with a backfill sequence starting from 1 and let it increment by 1 until existing rows are backfilled for the new column. This process requires row exclusive lock (locks a few rows at a time) and would take typically hours if the table is large. Therefore, doing this in batches is recommended. Date filters make suitable batches, other low cardinality columns—like department for an employee table—can also be considered. An evenly distributed acceptable row count batch is important. Plan ahead and position the updates strategically to cover larger batches when workloads are light.

Before you can make this new column a primary key, you must enforce the not null constraint. There are couple of ways you can achieve this.

Create a unique index with a concurrently clause, which allows the index to be built without an exclusive lock on the table. This will expedite the primary key creation phase, which requires exclusive lock to enforce not null and primary key constraints, but it will still perform relatively better as it will skip the index-creation phase of the process.

Alternatively, you can also add unique constraint by using CHECK with NOT VALID and later on VALIDATE the constraint. The validation phase doesn’t acquire an ACCESS EXCLUSIVE but only a SHARE UPDATE EXCLUSIVE lock so read/writes can continue.

PostgreSQL sample code:

There are other strategies to add an identity column to an existing large table in PostgreSQL such as cloning, duplicating, or copying existing table data to a new table with a primary key and using a combination of sequences or triggers for onward transactions plus a batch update of existing data to sync both tables. However, it might prove to be a complex operation because there might be foreign key implications in the existing table, which could be a difficult problem to solve. Other challenges like keeping the updated batches in sync between the two tables and handling in-flight transactions during cut-over to sync the two tables need to be dealt with as well.

Confirming a successful zero-ETL integration

From the zero-ETL integration point of view, adding a primary key to an existing table should automatically result in a re-synchronization of the table. The integration status will turn from Needs attention to Active, and the table status should transition from Failed to Resync Initiated (complete resync in preview), and finally to Synced when re-synchronization is complete. It might take a few minutes for the table to re-synchronize.

Regardless of the strategy you choose, it’s essential to test your approach using the preceding sample code or other alternatives that meet your use case in a non-production environment before making final changes in a production environment. To ensure minimum downtime, efficient planning and testing is always key.

Conclusion

In this post, we explained in detail how you can continue to use Aurora PostgreSQL zero-ETL integration to Amazon Redshift (preview) for scenarios where your source tables don’t have a primary key defined. We also provided a general guidance, strategies and perspective on how to handle primary key creation and modification in general in your Aurora PostgreSQL environment.

Get started using zero-ETL integration feature to efficiently run analytics on transactional or operational datasets.

About the authors

Rohit Vashishtha is a Senior Redshift Specialist Solutions Architect at AWS based in Dallas, Texas. He has over 17 years of experience architecting, building, leading, and maintaining big data platforms. Rohit helps customers modernize their analytic workloads using the breadth of AWS services and ensures that customers get the best price/performance with utmost security and data governance.

Sikandra Chaudhary is a Sr Database Specialist Solutions Architect at AWS. Sikandra helps AWS customers with their architecture design and provides efficient solutions to migrate and run their database workloads on AWS. He has been working with Microsoft SQL Server deployments since 2008.

Abhinav Dhandh is a Senior Product Manager – Technical for Amazon Aurora, and he leads all zero-ETL and change data capture (CDC) streaming product vision and roadmap for Aurora MySQL and PostgreSQL databases within AWS. He also leads the Aurora MySQL product management and has launched multiple capabilities around version currency, upgrades, migrations, availability, scaling, and performance.

Kanishka Chaturvedi is a Principal Engineer for Amazon Aurora, leading the technical direction of ZeroETL initiatives for Aurora engines. His background revolves around databases, having incubated other Amazon database services like Amazon DocumentDB and Amazon Timestream.

Gopal Paliwal is a Principal Engineer for Amazon Redshift, leading the software development of ZeroETL initiatives for Amazon Redshift.

Source: Read More