In the rapidly advancing realm of computer vision, developing models capable of learning and adapting through minimal human intervention has opened new avenues for research and application. A pivotal area of this field is the utilization of machine learning to enable models to switch between tasks efficiently, enhancing their flexibility and applicability across various scenarios.

Computer vision systems require exhaustive datasets tailored to each task to function effectively. This necessity for vast amounts of task-specific data posed a significant challenge, limiting the speed and adaptability of model deployment in dynamic environments. Recent strides have been made in introducing in-context learning models that adapt to new tasks using only a few contextual examples. This method simplifies the training process and reduces the dependency on large datasets.

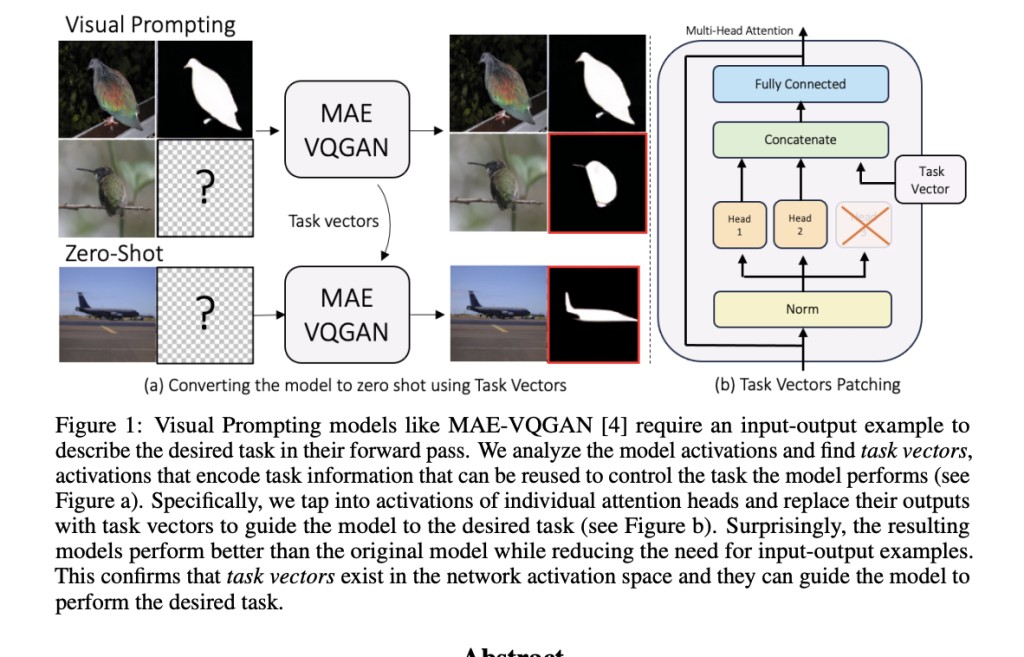

Researchers from UC Berkeley and Tel Aviv University present a breakthrough in task adaptability without requiring input-output examples. Their research focuses on identifying and utilizing ‘task vectors’, specific patterns of activations within a model’s neural network that encode task-related information. These vectors can be manipulated to direct the model’s focus, enabling it to switch tasks with minimal external input.

The researchers’ methodology involves analyzing the activation patterns of the MAE-VQGAN model, a prominent visual prompting model. By scrutinizing these activations, the team identified specific vectors that consistently encoded information relevant to various visual tasks. Utilizing the REINFORCE algorithm, they strategically searched for and modified these task vectors to optimize the model’s performance across multiple tasks.

The modified model reduced its computational demands by 22.5% by employing task vectors, significantly lowering the resources needed while maintaining high accuracy. The experiments showed increased task performance, with the patched model achieving better results than the original setup in several benchmarks. For instance, the model demonstrated improved mean intersection over union (mIOU) and lower mean squared error (MSE) metrics in tasks like image segmentation and color enhancement.

This innovative approach harnesses the inherent capabilities within neural networks to identify and adjust task-specific vectors, and researchers have effectively demonstrated a method to enhance a model’s adaptability and efficiency. The implications of these findings are vast, suggesting that future models could be designed with an inherent capability to adapt on-the-fly to new tasks, thereby revolutionizing their use in real-world applications.

Research Snapshot

In conclusion, the study effectively addresses the limitations of traditional computer vision models, which depend heavily on extensive task-specific datasets, by introducing an innovative method utilizing internal ‘task vectors.’ These vectors, specific activation patterns within the MAE-VQGAN model’s neural network, are identified and manipulated to enhance task adaptability without traditional training datasets. The results are significant: a 22.5% reduction in computational demands and improved performance across various tasks, highlighted by better mIOU and lower MSE scores.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post This Study by UC Berkeley and Tel Aviv University Enhances Task Adaptability in Computer Vision Models Using Internal Network Task Vectors appeared first on MarkTechPost.

Source: Read MoreÂ