Cohere, an emerging leader in the field of artificial intelligence, has announced the release of Rerank 3, its latest foundation model designed specifically for improving enterprise search and Retrieval Augmented Generation (RAG) systems. This development promises a significant upgrade over its predecessors by boosting enterprise data management systems’ accuracy, efficiency, and cost-effectiveness.

The primary advantage of Rerank 3 lies in its ability to process complex, semi-structured data across diverse formats like emails, invoices, JSON documents, code, and tables. Enterprises dealing with vast arrays of multi-aspect data can now enjoy streamlined search functionalities with Rerank 3. Its refined handling of various metadata fields, including recency, ensures highly relevant search results.

One of Rerank 3’s standout features is its capability to support over 100 languages, addressing the needs of global organizations that manage multilingual data sources. This enhancement not only simplifies data retrieval across different languages but also promotes inclusivity and accessibility for non-English-speaking users.

Moreover, Rerank 3’s architecture allows it to integrate seamlessly into existing search systems or legacy applications with minimal effort—a single line of code is all that’s needed. This ease of integration, combined with the model’s ability to process longer documents (up to 4k context length), significantly reduces the need for chunking data, thus maintaining the integrity and context of the search queries.

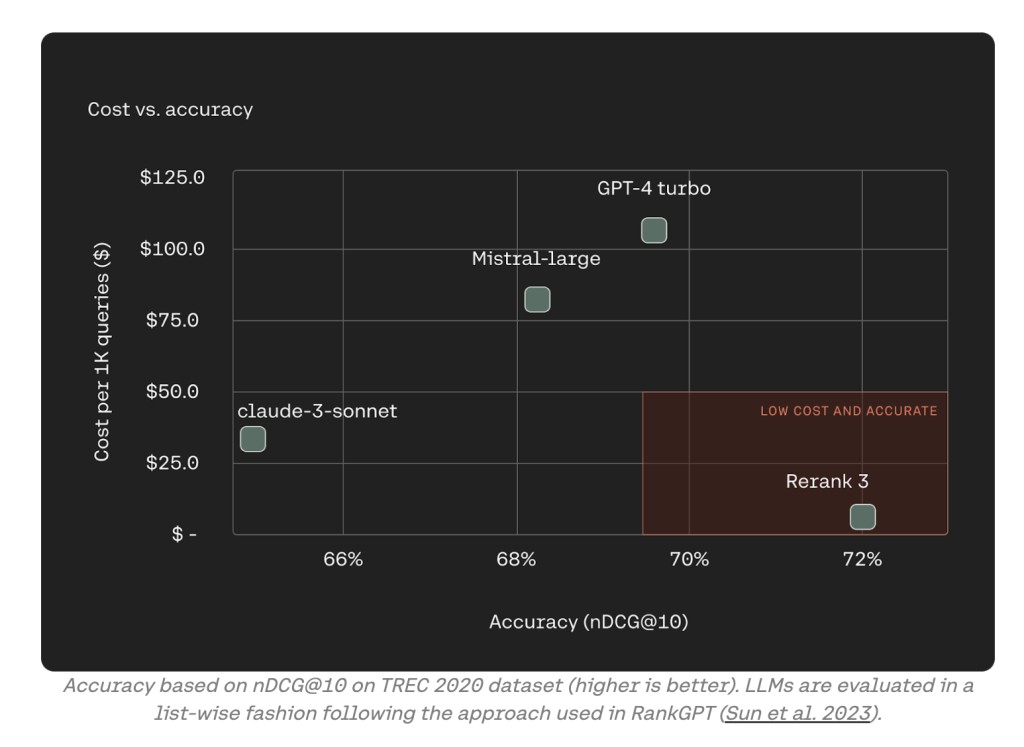

In terms of performance, Cohere has taken a significant leap forward. Rerank 3 achieves up to three times lower latency in search operations compared to its previous version, making it highly suitable for time-sensitive applications in domains like e-commerce and customer service. Furthermore, the integration with Cohere’s Command R series models for RAG applications results in a dramatic reduction in total cost of ownership (TCO)—up to 98% lower than other generative large language models (LLMs) without sacrificing quality.

The enhanced capabilities of Rerank 3 do not just stop at improving search efficiency and accuracy. They also extend to special data types like JSON and tabular data in databases, CSVs, and Excel sheets, which are crucial for enterprises but traditionally challenging for retrieval models.

As we move forward, Cohere’s Rerank 3 model represents a key development in the AI landscape, particularly for enterprise search solutions. Its comprehensive approach to handling diverse and complex data structures and its cost-efficiency and multilingual support position Rerank 3 as an essential tool for any data-driven organization looking to optimize its information retrieval processes.

Key Takeaways:

Enhanced Efficiency:Â Rerank 3 handles complex data and supports multilingual retrieval, improving search efficiency across varied enterprise data formats.

Reduced Costs: Integration with Cohere’s Command R models drastically reduces the TCO for RAG systems, making it up to 98% more cost-effective than other LLMs.

Improved Accuracy and Lower Latency:Â The model delivers enhanced accuracy with lower latency, crucial for businesses requiring fast and reliable search results.

Ease of Integration:Â Rerank 3 can be seamlessly integrated into existing systems with just a single line of code, facilitating smooth adoption and upgrade paths for enterprises.

Multifaceted Data Handling: The model’s capability to process and search through semi-structured data like JSON and tabular data broadens the scope of searchable enterprise data, enhancing retrieval capabilities.

Developers and businesses can access Rerank 3 on both Cohere’s hosted API and AWS Sagemaker. You can also access our model directly through the inference API in Elasticsearch to perform semantic reranking on your existing Elasticsearch index.Â

The post Cohere AI Unveils Rerank 3: A Cutting-Edge Foundation Model Designed to Optimize Enterprise Search and RAG (Retrieval Augmented Generation) Systems appeared first on MarkTechPost.

Source: Read MoreÂ