Artificial intelligence has witnessed remarkable advancements, with large language models (LLMs) emerging as fundamental tools driving various applications. However, the excessive computational costs of training these LLMs have created barriers, limiting accessibility and hindering wider development. Initiatives such as BLOOM, StarCoder, StarCoder-2, Pythia, and OLMo have emerged as open-source efforts to democratize access to pretrained LLMs, stimulating innovation and allowing researchers and developers to leverage existing advancements. Despite their contributions, several challenges persist in the domain of open-source LLM development.

Primarily, numerous studies have highlighted the ongoing struggle of LLMs with non-English texts, particularly in low- or extremely low-resource languages, as the training data predominantly consists of English. There is a pressing need to promote the development of multilingual models to democratize LLMs and alleviate performance disparities across different languages. Secondly, continual pretraining – a technique involving further updating pretrained models on new data distributions to enhance their capabilities – often leads to catastrophic forgetting, where the model loses previously acquired knowledge. This challenge is exacerbated when considering the continual pre-training of models across diverse grammatical and lexical structures. Lastly, ensuring compliance with recent regulations mandating safe and secure AI development practices represents another critical aspect often overlooked in open-source LLM development, specifically for multilingual models.

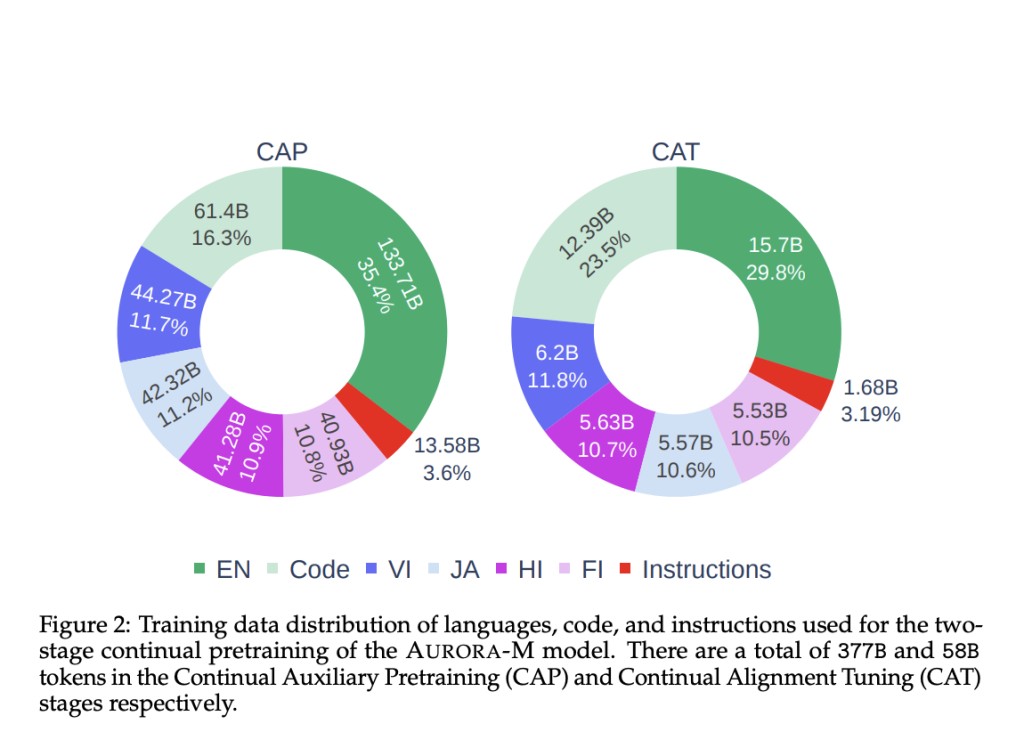

Recognizing these challenges, researchers have developed AURORA-M, a novel open-source multilingual LLM with 15 billion parameters. AURORA-M is tailored to cater to six diverse languages: English, Finnish, Hindi, Japanese, Vietnamese, and code. Starting from the StarCoderPlus model, AURORA-M underwent continual pretraining on an extensive dataset comprising 435 billion tokens, resulting in an impressive total training token count of 2 trillion. Â

This rigorous pretraining regimen equips AURORA-M with a comprehensive understanding of diverse languages and code. Moreover, safety is a fundamental design principle, making AURORA-M the first open-source multilingual LLM to be fine-tuned on a collection of human-reviewed safety instructions addressing concerns outlined in the Biden-Harris Executive Order on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.

The researchers curated an extensive dataset of instruction-response pairs to bolster AURORA-M’s safety and resilience. This dataset specifically addresses key concerns outlined in the Biden-Harris US Executive Order on AI, encompassing areas such as harm prevention, cyber-attacks, illegal activities, privacy infringement, and circumventing safety controls. By fine-tuning AURORA-M on this dataset, the researchers aimed to align the model with legal standards and responsible AI development practices.

To evaluate AURORA-M’s efficacy, the researchers conducted rigorous assessments across various tasks spanning various domains and languages. The results demonstrate that AURORA-M successfully avoids catastrophic forgetting on English and coding tasks while achieving competitive performance on multilingual benchmarks. Safety evaluations affirm AURORA-M’s commitment to safety and adherence to responsible AI development practices.

In summary, this paper presents AURORA-M, a significant step towards democratizing access to multilingual and safe LLMs. By addressing the challenges of accessibility, language diversity, continual learning, and legal compliance, this model opens up new possibilities for researchers and developers worldwide. While AURORA-M prioritizes safety and responsible development, users must still exercise caution and assess the potential implications of generated content.Â

Check out the Paper and HF Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post AURORA-M: A 15B Parameter Multilingual Open-Source AI Model Trained in English, Finnish, Hindi, Japanese, Vietnamese, and Code appeared first on MarkTechPost.

Source: Read MoreÂ