Digital artistry intersects seamlessly with technological innovation, and generative models have carved a niche, transforming how graphic designers and artists conceive and realize their creative visions. Among these, models like Stable Diffusion and DALL-E stand out, capable of distilling vast troves of online imagery into distinct artistic styles. This capability, while remarkable, introduces a complex challenge: discerning whether a piece of generated art merely mimics the style of existing works or stands as a unique creation.

Researchers from New York University, ELLIS Institute, and the University of Maryland have delved deeper into the nuances of style replication by generative models. Their Contrastive Style Descriptors (CSD) model analyzes images’ artistic styles by emphasizing stylistic over semantic attributes. Developed through self-supervised learning and refined with a unique dataset, LAION-Styles, the model identifies and quantifies the stylistic nuances between images. Their study also led to the development of a framework aimed at dissecting and understanding the stylistic DNA of images. Unlike earlier methods prioritizing semantic similarity, this approach is distinctive for its focus on the subjective attributes of style, encompassing elements such as color palettes, texture, and form.

The main standing point of this research is the construction of a specialized dataset, LAION-Styles, designed to bridge the gap between the subjective nature of style and the objective goals of the study. The dataset is the foundation for a multi-label contrastive learning scheme that meticulously quantifies the stylistic correlations between generated images and their potential inspirations. This methodology captures the essence of style as humans perceive it, highlighting the complexity and subjectivity inherent in artistic endeavors.

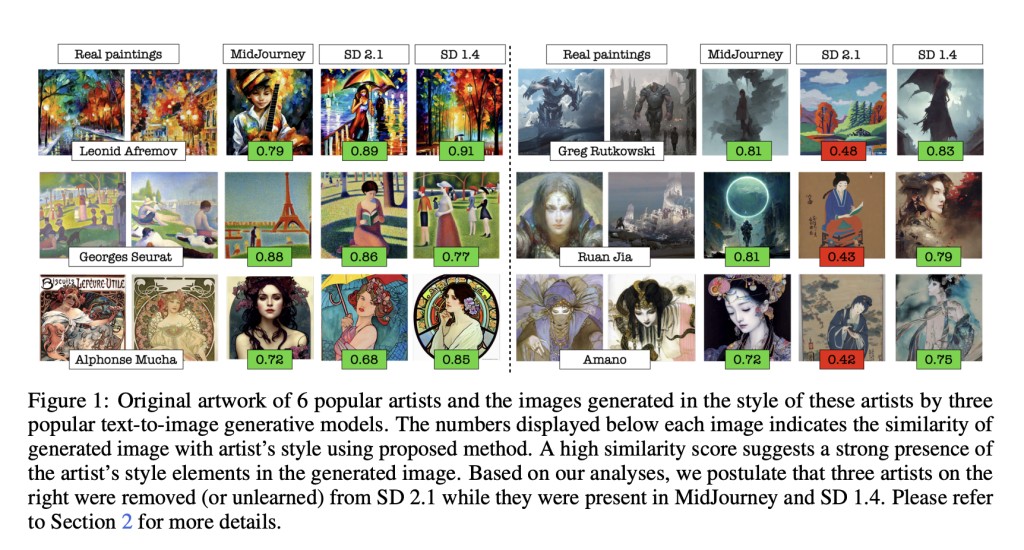

The practical application unveils intriguing insights into the Stable Diffusion model’s ability to replicate the styles of various artists. The research reveals a spectrum of fidelity in style replication, ranging from near-perfect mimicry to more nuanced interpretations. This variability underscores the critical role of training datasets in shaping the output of generative models, suggesting a preference for certain styles based on their representation within the dataset.

The research also sheds light on the quantitative aspects of style replication. For instance, the methodology’s application to Stable Diffusion highlights how the model scores on style similarity metrics, offering a granular view of its capabilities and limitations. These findings are pivotal not only for artists vigilant about the integrity of their stylistic signatures but also for users seeking to understand the origins and authenticity of their generated artworks.

The framework prompts a reevaluation of how generative models interact with diverse styles. It posits that these models may exhibit preferences for certain styles over others, influenced heavily by the dominance of those styles in their training data. This phenomenon raises pertinent questions about the inclusivity and diversity of styles that generative models can faithfully emulate, spotlighting the nuanced interplay between input data and artistic output.

In conclusion, the study addresses a pivotal challenge of generative art: quantifying the extent to which models like Stable Diffusion replicate the styles of training data images. By devising a novel framework that emphasizes stylistic over semantic elements, grounded in the LAION-Styles dataset and a sophisticated multi-label contrastive learning scheme, the researchers offer insights into the mechanics of style replication. Their findings quantify style similarities with remarkable precision and highlight training datasets’ critical influence on generative models’ outputs.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post Researchers from NYU and the University of Maryland Unveil an Artificial Intelligence Framework for Understanding and Extracting Style Descriptors from Images appeared first on MarkTechPost.

Source: Read MoreÂ