The future of robotics has advanced significantly. For many years, there have been expectations of human-like robots that can navigate our environments, perform complex tasks, and work alongside humans. Examples include robots conducting precise surgical procedures, building intricate structures, assisting in disaster response, and cooperating efficiently with humans in various settings such as factories, offices, and homes. However, actual progress has historically been limited.

Researchers from NVIDIA, Carnegie Mellon University, UC Berkeley, UT Austin, and UC San Diego introduced HOVER, a unified neural controller aimed at enhancing humanoid robot capabilities. This research proposes a multi-mode policy distillation framework, integrating different control strategies into one cohesive policy, thereby making a notable advancement in humanoid robotics.

The Achilles Heel of Humanoid Robotics: The Control Conundrum

Imagine a robot that can execute a perfect backflip but then struggles to grasp a doorknob.

The problem? Specialization.

Humanoid robots are incredibly versatile platforms, capable of supporting a wide range of tasks, including bimanual manipulation, bipedal locomotion, and complex whole-body control. However, despite impressive advances in these areas, researchers have typically employed different control formulations designed for specific scenarios.

- Some controllers excel at locomotion, using “root velocity tracking” to guide movement. This approach focuses on controlling the robot’s overall movement through space.

- Others prioritize manipulation, relying on “joint angle tracking” for precise movements. This approach allows for fine-grained control of the robot’s limbs.

- Still others use “kinematic tracking” of key points for teleoperation. This method enables a human operator to control the robot by tracking their own movements.

Each speaks a different control language, creating a fragmented landscape where robots are masters of one task and inept at others. Switching between tasks has been clunky, inefficient, and often impossible. This specialization creates practical limitations. For example, a robot designed for bipedal locomotion on uneven terrain using root velocity tracking would struggle to transition smoothly to precise bimanual manipulation tasks that require joint angle or end-effector tracking.

In addition to that, many pre-trained manipulation policies operate across different configuration spaces, such as joint angles and end-effector positions. These constraints highlight the need for a unified low-level humanoid controller capable of adapting to diverse control modes.

HOVER: The Unified Field Theory of Robotic Control

HOVER is a paradigm shift. It’s a “generalist policy”—a single neural network that harmonizes diverse control modes, enabling seamless transitions and unprecedented versatility. HOVER supports diverse control modes, including over 15 useful configurations for real-world applications on a 19-DOF humanoid robot. This versatile command space encompasses most of the modes used in previous research.

- Learning from the Masters: Human Motion Imitation

‘s brilliance lies in its foundation: learning from human movement itself. By training an “oracle motion imitator” on a massive dataset of human motion capture data (MoCap), HOVER absorbs the fundamental principles of balance, coordination, and efficient movement. This approach utilizes human movements’ natural adaptability and efficiency, providing the policy with rich motor priors that can be reused across multiple control modes.

The researchers ground the training process in human-like motion, allowing the policy to develop a deeper understanding of balance, coordination, and motion control, crucial elements for effective whole-body humanoid behavior.

- From Oracle to Prodigy: Policy Distillation

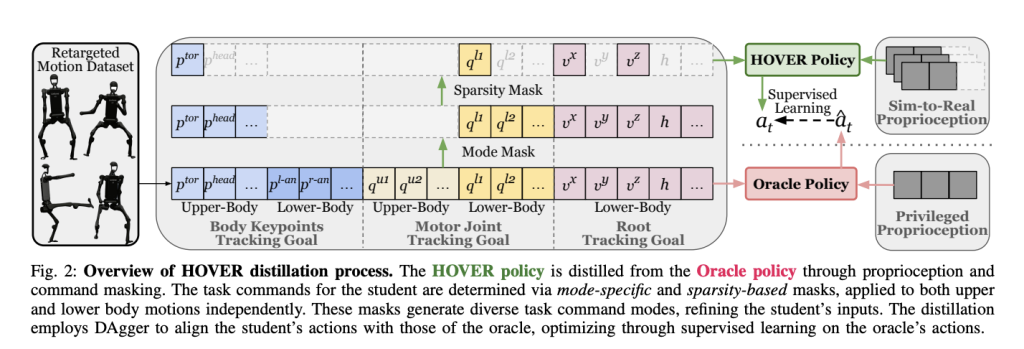

The magic truly happens through “policy distillation.” The oracle policy, the master imitator, teaches a “student policy” (HOVER) its skills. Through a process involving command masking and a DAgger framework, HOVER learns to master diverse control modes, from kinematic position tracking to joint angle control and root tracking. This creates a “generalist” capable of handling any control scenario.

Through policy distillation, these motor skills are transferred from the oracle policy into a single “generalist policy” capable of handling multiple control modes. The resulting multi-mode policy supports diverse control inputs and outperforms policies trained individually for each mode. The researchers hypothesize this superior performance stems from the policy using shared physical knowledge across modes, such as maintaining balance, human-like motion, and precise limb control. These shared skills enhance generalization, leading to better performance across all modes, while single-mode policies often overfit specific reward structures and training environments.

HOVER‘s implementation involves training an Oracle policy followed by knowledge distillation to create a versatile controller. The oracle policy processes proprioceptive information, including position, orientation, velocities, and previous actions alongside reference poses, to generate optimal movements. The oracle achieves robust motion imitation using a carefully designed reward system with penalty, regularization, and task components. The student policy then learns from this oracle through a DAgger framework, incorporating model-based and sparsity-based masking techniques that allow selective tracking of different body parts. This distillation process minimizes the action difference between teacher and student, creating a unified controller capable of handling diverse control scenarios.

The researchers formulate humanoid control as a goal-conditioned reinforcement learning task where the policy is trained to track real-time human motion. The state includes the robot’s proprioception and a unified target goal state. Using these inputs, they define a reward function for policy optimization. The actions represent target joint positions that are fed into a PD controller. The system employs Proximal Policy Optimization (PPO) to maximize cumulative discounted rewards, essentially training the humanoid to follow target commands at each timestep.

The research methodology utilizes motion retargeting techniques to create feasible humanoid movements from human motion datasets. This three-step process begins with computing keypoint positions through forward kinematics, fitting the SMPL model to align with these key points, and retargeting the AMASS dataset by matching corresponding points between models using gradient descent. The “sim-to-data” procedure converts the large-scale human motion dataset into feasible humanoid motions, establishing a strong foundation for training the controller.

The research team designed a comprehensive command space for humanoid control that overcomes the limitations of previous approaches. Their unified framework accommodates multiple control modes simultaneously, including kinematic position tracking, joint angle tracking, and root tracking. This design satisfies key criteria of generality (supporting various input devices) and atomicity (enabling arbitrary combinations of control options).

HOVER Unleashed: Performance That Redefines Robotics

HOVER‘s capabilities are proven by rigorous testing:

- Dominating the Specialists:

outperforms specialized controllers across the board. The research team evaluated HOVER against specialist policies and alternative multi-mode training approaches through comprehensive tests in both IsaacGym simulation and real-world implementations using the Unitree H1 robot.

To address whether HOVER could outperform specialized policies, they compared it against various specialists, including ExBody, HumanPlus, H2O, and OmniH2O – each designed for different tracking objectives such as joint angles, root velocity, or specific key points.

In evaluations using the retargeted AMASS dataset, HOVER consistently demonstrated superior generalization, outperforming specialists in at least 7 out of 12 metrics in every command mode. HOVER performed better than specialists trained for specific useful control modes like left-hand, right-hand, two-hand, and head tracking.

- Multi-Mode Mastery: A Clean Sweep When compared to other multi-mode training methods, they implemented a baseline that used the same masking process but trained from scratch with reinforcement learning. Radar charts visualizing tracking errors across eight distinct control modes showed HOVER consistently achieving lower errors across all 32 metrics and modes. HOVER achieved consistently lower tracking errors across all 32 metrics and 8 distinct control modes. This decisive victory underscores the power of HOVER’s distillation approach. This comprehensive performance advantage underscores the effectiveness of distilling knowledge from an oracle policy that tracks full-body kinematics rather than training with reinforcement learning from scratch.

- From Simulation to Reality: Real-World Validation ‘s prowess is not confined to the digital world. The experimental setup included motion tracking evaluations using the retargeted AMASS dataset in simulation and 20 standing motion sequences for the real-world tests on the 19-DOF Unitree H1 platform, weighing 51.5kg and standing 1.8m tall. The experiments were structured to answer three key questions about HOVER’s generalizability, comparative performance, and real-world transferability.

On the Unitree H1 robot, a 19-DOF humanoid weighing 51.5kg and standing 1.8m tall, HOVER flawlessly tracked complex standing motions, dynamic running movements, and smoothly transitioned between control modes during locomotion and teleoperation. Experiments conducted in both simulation and on a physical humanoid robot show that HOVER achieves seamless transitions between control modes and delivers superior multi-mode control compared to baseline approaches.

HOVER: The Future of Humanoid Potential

HOVERunlocks the vast potential of humanoid robots. The multi-mode generalist policy also enables seamless transitions between modes, making it robust and versatile.

Imagine a future where humanoids:

- Perform intricate surgery with unparalleled precision.

- Construct complex structures with human-like dexterity.

- Respond to disasters with agility and resilience.

- Collaborate seamlessly with humans in factories, offices, and homes.

The age of truly versatile, capable, and intelligent humanoids is on the horizon, and HOVER is leading the way. Their evaluations collectively illustrate HOVER‘s ability to handle diverse real-world control modes, offering superior performance compared to specialist policies.

Sources:

- https://arxiv.org/pdf/2410.21229

- https://github.com/NVlabs/HOVER/tree/main

- https://github.com/NVlabs/HOVER/tree/main?tab=readme-ov-file

- https://arxiv.org/abs/2410.21229

Thanks to the NVIDIA team for the thought leadership/ Resources for this article. NVIDIA team has supported and sponsored this content/article.

The post NVIDIA AI Releases HOVER: A Breakthrough AI for Versatile Humanoid Control in Robotics appeared first on MarkTechPost.

Source: Read MoreÂ