Customers need better accuracy to take generative AI applications into production. In a world where decisions are increasingly data-driven, the integrity and reliability of information are paramount. To address this, customers often begin by enhancing generative AI accuracy through vector-based retrieval systems and the Retrieval Augmented Generation (RAG) architectural pattern, which integrates dense embeddings to ground AI outputs in relevant context. When even greater precision and contextual fidelity are required, the solution evolves to graph-enhanced RAG (GraphRAG), where graph structures provide enhanced reasoning and relationship modeling capabilities.

Lettria, an AWS Partner, demonstrated that integrating graph-based structures into RAG workflows improves answer precision by up to 35% compared to vector-only retrieval methods. This enhancement is achieved by using the graph’s ability to model complex relationships and dependencies between data points, providing a more nuanced and contextually accurate foundation for generative AI outputs.

In this post, we explore why GraphRAG is more comprehensive and explainable than vector RAG alone, and how you can use this approach using AWS services and Lettria.

How graphs make RAG more accurate

In this section, we discuss the ways in which graphs make RAG more accurate.

Capturing complex human queries with graphs

Human questions are inherently complex, often requiring the connection of multiple pieces of information. Traditional data representations struggle to accommodate this complexity without losing context. Graphs, however, are designed to mirror the way humans naturally think and ask questions. They represent data in a machine-readable format that preserves the rich relationships between entities.

By modeling data as a graph, you capture more of the context and intent. This means your RAG application can access and interpret data in a way that aligns closely with human thought processes. The result is a more accurate and relevant answer to complex queries.

Avoiding loss of context in data representation

When you rely solely on vector similarity for information retrieval, you miss out on the nuanced relationships that exist within the data. Translating natural language into vectors reduces the richness of the information, potentially leading to less accurate answers. Also, end-user queries are not always aligned semantically to useful information in provided documents, leading to vector search excluding key data points needed to build an accurate answer.

Graphs maintain the natural structure of the data, allowing for a more precise mapping between questions and answers. They enable the RAG system to understand and navigate the intricate connections within the data, leading to improved accuracy.

Lettria demonstrated improvement on correctness of answers from 50% with traditional RAG to more than 80% using GraphRAG within a hybrid approach. The testing covered datasets from finance (Amazon financial reports), healthcare (scientific studies on COVID-19 vaccines), industry (technical specifications for aeronautical construction materials), and law (European Union directives on environmental regulations).

Proving that graphs are more accurate

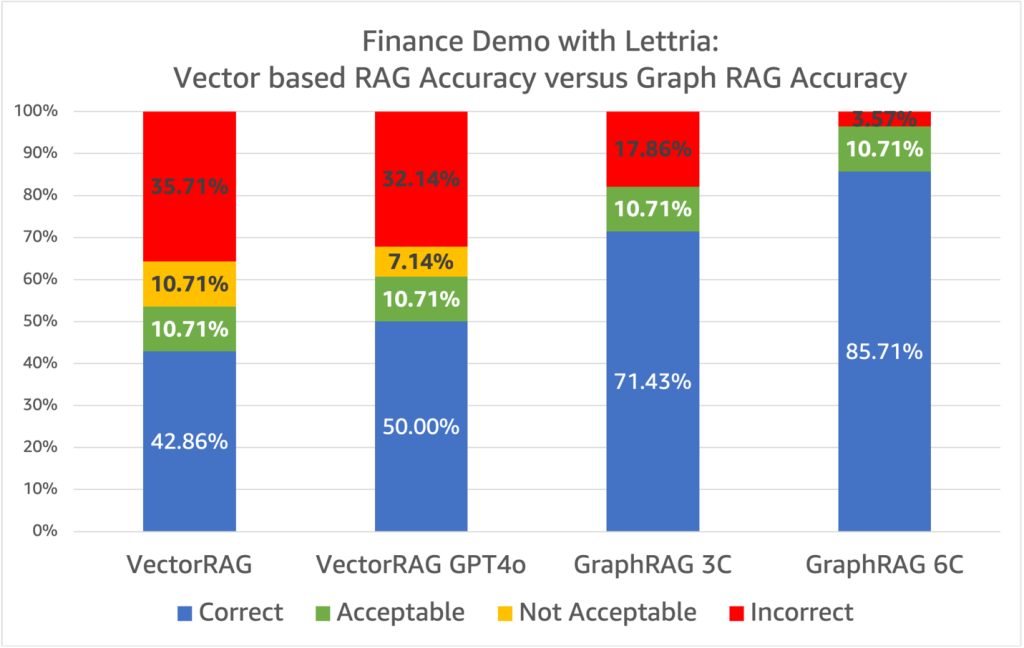

To substantiate the accuracy improvements of graph-enhanced RAG, Lettria conducted a series of benchmarks comparing their GraphRAG solution—a hybrid RAG using both vector and graph stores—with a baseline vector-only RAG reference.

Lettria’s hybrid methodology to RAG

Lettria’s hybrid approach to question answering combines the best of vector similarity and graph searches to optimize performance of RAG applications on complex documents. By integrating these two retrieval systems, Lettria uses both structured precision and semantic flexibility in handling intricate queries.

GraphRAG specializes in using fine-grained, contextual data, ideal for answering questions that require explicit connections between entities. In contrast, vector RAG excels at retrieving semantically relevant information, offering broader contextual insights. This dual system is further reinforced by a fallback mechanism: when one system struggles to provide relevant data, the other compensates. For example, GraphRAG pinpoints explicit relationships when available, whereas vector RAG fills in relational gaps or enhances context when structure is missing.

The benchmarking process

To demonstrate the value of this hybrid method, Lettria conducted extensive benchmarks across datasets from various industries. Using their solution, they compared GraphRAG’s hybrid pipeline against a leading open source RAG package, Verba by Weaviate, a baseline RAG reference reliant solely on vector stores. The datasets included Amazon financial reports, scientific texts on COVID-19 vaccines, technical specifications from aeronautics, and European environmental directives—providing a diverse and representative test bed.

The evaluation tackled real-world complexity by focusing on six distinct question types, including fact-based, multi-hop, numerical, tabular, temporal, and multi-constraint queries. The questions ranged from simple fact-finding, like identifying vaccine formulas, to multi-layered reasoning tasks, such as comparing revenue figures across different timeframes. An example multi-hop query in finance is “Compare the oldest booked Amazon revenue to the most recent.”

Lettria’s in-house team manually assessed the answers with a detailed evaluation grid, categorizing results as correct, partially correct (acceptable or not), or incorrect. This process measured how the hybrid GraphRAG approach outperformed the baseline, particularly in handling multi-dimensional queries that required combining structured relationships with semantic breadth. By using the strengths of both vector and graph-based retrieval, Lettria’s system demonstrated its ability to navigate the nuanced demands of diverse industries with precision and flexibility.

The benchmarking results

The results were significant and compelling. GraphRAG achieved 80% correct answers, compared to 50.83% with traditional RAG. When including acceptable answers, GraphRAG’s accuracy rose to nearly 90%, whereas the vector approach reached 67.5%.

The following graph shows the results for vector RAG and GraphRAG.

In the industry sector, dealing with complex technical specifications, GraphRAG provided 90.63% correct answers, almost doubling vector RAG’s 46.88%. These figures highlight how GraphRAG offers substantial advantages over the vector-only approach, particularly for clients focused on structuring complex data.

GraphRAG’s overall reliability and superior handling of intricate queries allow customers to make more informed decisions with confidence. By delivering up to 35% more accurate answers, it significantly boosts efficiency and reduces the time spent sifting through unstructured data. These compelling results demonstrate that incorporating graphs into the RAG workflow not only enhances accuracy, but is essential for tackling the complexity of real-world questions.

Using AWS and Lettria for enhanced RAG applications

In this section, we discuss how you can use AWS and Lettria for enhanced RAG applications.

AWS: A robust foundation for generative AI

AWS offers a comprehensive suite of tools and services to build and deploy generative AI applications. With AWS, you have access to scalable infrastructure and advanced services like Amazon Neptune, a fully managed graph database service. Neptune allows you to efficiently model and navigate complex relationships within your data, making it an ideal choice for implementing graph-based RAG systems.

Implementing GraphRAG from scratch usually requires a process similar to the following diagram.

The process can be broken down as follows:

- Based on domain definition, the large language model (LLM) can identify the entities and relationship contained in the unstructured data, which are then stored in a graph database such as Neptune.

- At query time, user intent is turned into an efficient graph query based on domain definition to retrieve the relevant entities and relationship.

- Results are then used to augment the prompt and generate a more accurate response compared to standard vector-based RAG.

Implementing such process requires teams to develop specific skills in topics such as graph modeling, graph queries, prompt engineering, or LLM workflow maintenance. AWS released an open source GraphRAG Toolkit to make it simple for customers who want to build and customize their GraphRAG workflows. Iterations on extraction process and graph lookup are to be expected in order to get accuracy improvement.

Managed GraphRAG implementations

There are two solutions for managed GraphRAG with AWS: Lettria’s solution, soon available on AWS Marketplace, and Amazon Bedrock integrated GraphRAG support with Neptune. Lettria provides an accessible way to integrate GraphRAG into your applications. By combining Lettria’s expertise in natural language processing (NLP) and graph technology with the scalable and managed AWS infrastructure, you can develop RAG solutions that deliver more accurate and reliable results.

The following are key benefits of Lettria on AWS:

- Simple integration – Lettria’s solution simplifies the ingestion and processing of complex datasets

- Improved accuracy – You can achieve up to 35% better performance in question-answering tasks

- Scalability – You can use scalable AWS services to handle growing data volumes and user demands

- Flexibility – The hybrid approach combines the strengths of vector and graph representations

In addition to Lettria’s solution, Amazon Bedrock introduced managed GraphRAG support on December 4, 2024, integrating directly with Neptune. GraphRAG with Neptune is built into Amazon Bedrock Knowledge Bases, offering an integrated experience with no additional setup or additional charges beyond the underlying services. GraphRAG is available in AWS Regions where Amazon Bedrock Knowledge Bases and Amazon Neptune Analytics are both available (see the current list of supported Regions). To learn more, see Retrieve data and generate AI responses with Amazon Bedrock Knowledge Bases.

Conclusion

Data accuracy is a critical concern for enterprises adopting generative AI applications. By incorporating graphs into your RAG workflow, you can significantly enhance the accuracy of your systems. Graphs provide a richer, more nuanced representation of data, capturing the complexity of human queries and preserving context.

GraphRAG is a key option to consider for organizations seeking to unlock the full potential of their data. With the combined power of AWS and Lettria, you can build advanced RAG applications that help meet the demanding needs of today’s data-driven enterprises and achieve up to 35% improvement in accuracy.

Explore how you can implement GraphRAG on AWS in your generative AI application:

- Understanding GraphRAG with Lettria

- Amazon Bedrock Knowledge Bases now supports GraphRAG (preview)

- Using knowledge graphs to build GraphRAG applications with Amazon Bedrock and Amazon Neptune

- GraphRAG Toolkit

- Create a Neo4j GraphRAG Workflow Using LangChain and LangGraph

About the Authors

Denise Gosnell is a Principal Product Manager for Amazon Neptune, focusing on generative AI infrastructure and graph data applications that enable scalable, cutting-edge solutions across industry verticals.

Denise Gosnell is a Principal Product Manager for Amazon Neptune, focusing on generative AI infrastructure and graph data applications that enable scalable, cutting-edge solutions across industry verticals.

Vivien de Saint Pern is a Startup Solutions Architect working with AI/ML startups in France, focusing on generative AI workloads.

Vivien de Saint Pern is a Startup Solutions Architect working with AI/ML startups in France, focusing on generative AI workloads.

Source: Read MoreÂ