Organizations rely on real-time analytics to gain insights into their core business drivers, enhance operational efficiency, and maintain a competitive edge. Traditionally, this has involved the use of databases, data warehouses, and complex extract, transform, and load (ETL) pipelines. AWS Database Migration Service (AWS DMS) is built to help you migrate workloads between databases, but you can also use AWS DMS or self-managed pipelines for ongoing change data capture (CDC) replication. Building and managing ETL pipelines can require significant resources and expertise, often resulting in undifferentiated heavy lifting to monitor, update, and troubleshoot these pipelines that detracts from an organization’s ability to innovate.

The zero-ETL integrations for Amazon Redshift are designed to automate data movement into Amazon Redshift, eliminating the need for traditional ETL pipelines. With zero-ETL integrations, you can reduce operational overhead, lower costs, and accelerate your data-driven initiatives. This enables organizations to focus more on deriving actionable insights and less on managing the complexities of data integration.

In this post, we discuss the best practices for migrating your ETL pipeline from AWS DMS to zero-ETL integrations for Amazon Redshift.

Why switch to zero-ETL?

Transitioning to zero-ETL integration with Amazon Redshift for ongoing CDC replication offers several significant advantages:

- Reduced costs – Zero-ETL integration is provided at no additional charge. You don’t need to provision a separate ETL pipeline that would have resulted in extra costs for provisioning and maintaining it.

- Reduced end-to-end latency – In mixed workload settings comprised of

INSERTs,UPDATEs,DELETEs, and DDLs, zero-ETL integration performance is in near real time and can result in lower latency compared to AWS DMS. You can access transactional data from Amazon Aurora in Amazon Redshift within seconds with zero-ETL integration. - Simplified data replication – Zero-ETL integration simplifies the data replication process by eliminating the need for provisioning AWS DMS replication instances in your virtual private cloud (VPC). Zero-ETL takes care of managing the ETL for you by automating the creation and management of data replication from the data source to the Redshift cluster or Amazon Redshift Serverless

- Streamlined operations – Zero-ETL integration automates data movement, minimizing operational overhead. This streamlining of operations enables your organization to allocate resources more effectively and improve overall efficiency. This reduces the complexity of your end-to-end architecture and allows your team to focus on higher-value activities.

- Minimize impact on source and destination databases – Zero-ETL integration reduces production systems impact from CDC replication. Zero-ETL integration reduces computational load in Amazon Redshift because it avoids the concurrent transaction of a SQL

COPY. Traditional ETL solutions for Amazon Redshift migrate data using the SQLCOPYcommand after data is temporarily stored in Amazon Simple Storage Service (Amazon S3), whereas zero-ETL uses storage-level movement of data between target and source. Whereas AWS DMS was originally designed for one-time migrations and added CDC features, zero-ETL integrations were designed specifically for ongoing CDC comprisingDELETEs andUPDATEs.

Key considerations for migrating to zero-ETL

Make sure to review Considerations when using zero-ETL integrations with Amazon Redshift. Not all configurations and use cases are best supported by zero-ETL integration. For those situations, we recommend continuing to use AWS DMS. For example, external sources such as Microsoft Azure SQL databases, Google Cloud for MySQL/PostgreSQL, SAP ASE, or MongoDB, or proprietary databases such as Oracle database and IBM Db2 are not available with zero-ETL integration (for a list of compatible source endpoints, see Sources for AWS DMS). Zero-ETL integrations are an area of active development with additional features in the near-term roadmap, so we recommend reviewing the latest announcements to understand the latest developments. For the current list of zero-ETL integrations with Amazon Redshift, see Zero-ETL integrations.

Solution overview

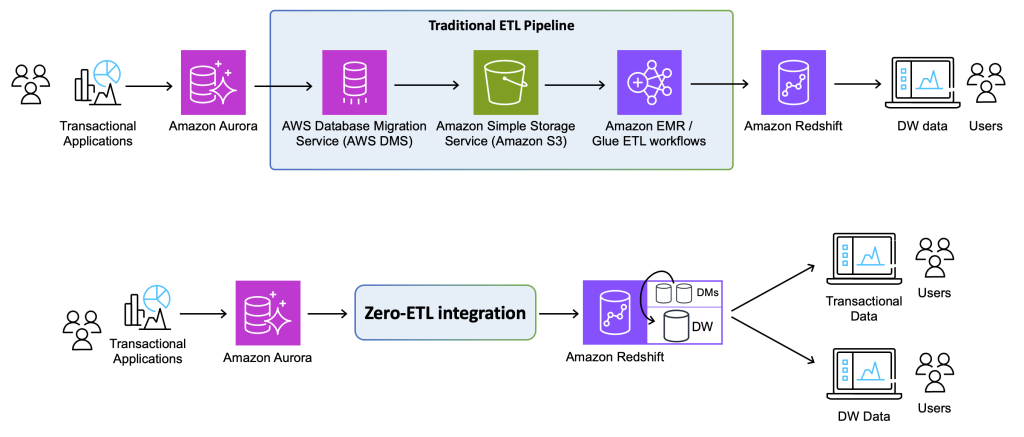

The following figure compares the traditional ETL pipeline migration plan with zero-ETL integration with Amazon Redshift.

Your goal is to transition from AWS DMS to zero-ETL integration while minimizing operational challenges and maintaining a seamless data integration experience. How can we achieve this? To make sure the data is fully migrated and there is no loss in replication, we don’t recommend disabling your AWS DMS connection until the zero-ETL integration is set up and you’ve verified that the data in Amazon Redshift is complete and consistent from your source databases. For a limited period of time, you can configure parallel CDC integrations between Aurora and Redshift. Due to the nature of how AWS DMS and zero-ETL integrations function, explained in more detail later in this post, the same source tables will be replicated by the two pipelines into distinct target tables and databases in Amazon Redshift.

After your zero-ETL integration is set up, you can perform data quality checks and confirm satisfactory performance of zero-ETL replication. When you’re satisfied with the query performance and quality checks on the target tables, your new integration is available to use in Amazon Redshift after you update your consumer connections to point to the new zero-ETL database. Finally, after all data consumer applications are verified to function properly, you can disable the AWS DMS replication pipeline.

Prerequisites

For this post, we assume you already have AWS DMS set up and performing CDC replication to your Redshift data warehouse. As always, ensure you are following the Redshift and Aurora security best practices.

Set up zero-ETL integration between Aurora and Redshift

It’s out of scope for this post to walk through how to configure zero-ETL integrations. For instructions, refer to Getting started guide for near-real time operational analytics using Amazon Aurora zero-ETL integration with Amazon Redshift, Working with Aurora zero-ETL integrations with Amazon Redshift, and Getting started with zero-ETL integrations. In this post, we assume you have already set up zero-ETL integrations between an Amazon Aurora MySQL-Compatible Edition database and Amazon Redshift. We use both services in serverless mode, but in the context of zero-ETL integrations, there is no difference for provisioned deployments.

As mentioned previously, one of the differences between AWS DMS and zero-ETL integrations for Amazon Redshift is the way data arrives to Amazon Redshift. With AWS DMS, data is loaded through an intermediate storage in Amazon S3, typically arriving in Amazon Redshift using the COPY command. AWS DMS targets in Amazon Redshift are normal tables with full Amazon Redshift functionality. With zero-ETL integrations, data is loaded directly into Amazon Redshift at the storage layer, making the application of DELETEs and UPDATEs very efficient. Another notable difference between AWS DMS and zero-ETL integration is the zero-ETL target table is configured as read-only. Mutating zero-ETL integration target tables in place is not possible.

We discuss the implications of this later in this post. The main takeaway is that you have two replication target databases in Amazon Redshift: one from AWS DMS and one from zero-ETL integration.

For this demonstration, we configure Aurora with the database and tables shown in the workshop The Zero-ETL Approaches. We have also configured an Amazon EventBridge rule and AWS Lambda function to continuously add transactions to the orders database.

Monitor and confirm replication in zero-ETL

After you have configured zero-ETL integration with Amazon Redshift, you now have two CDC pipelines replicating from the same source to two distinct target databases in Redshift. It’s a best practice to make sure all data is synced, data replication is occurring efficiently and in a timely manner, and there are no failed replication jobs.

Zero-ETL integration includes a built-in checkpointing functionality to make sure data moved from the source is complete and transactionally consistent. This means data movement will include all transactions or nothing for a given checkpoint. It also includes a smart replication tracking mechanism where the data will be replicated from the last checkpoint in case the Redshift cluster was paused and resumed or in case there are other transactional events on the source.

Amazon Redshift exposes Amazon CloudWatch metrics and system tables to help make sure your zero-ETL integration is healthy. In the following example, we use Query Editor v2 to review the system tables and the information exposed. Notably, the SVV_INTEGRATION system table allows you to monitor the number of tables replicated and the state of replication, which is useful for making sure there are no errors during CDC replication process. In addition, SYS_INTEGRATION_ACTIVITY allows you to monitor the zero-ETL’s checkpointing process, whereas SVV_INTEGRATION_TABLE_STATE describes the state of each table within an integration, and SYS_INTEGRATION_TABLE_STATE_CHANGE displays table state change logs for an integration.

The following queries and screenshot responses demonstrate the type of information available in these system tables.

We recommend following monitoring best practices by automating alerts using Amazon CloudWatch to make sure your ETL pipeline state is healthy. The CloudWatch metrics exposed through zero-ETL integrations are shown in the following screenshot. These metrics are identifiable by filtering CloudWatch metrics with the IntegrationID, which is found on the Amazon Redshift console on the Zero-ETL integrations page. Depending on your use case, setting threshold alarms on these metrics will be useful to help maintain real-time data replication for downstream consumers.

Validate replicated data

After you have validated that the zero-ETL integration is functioning properly, we recommend performing data validation to provide business assurance, such as validating sales transactions totals to the same sums over multiple historical and near-term periods. Your unique business requirements will dictate the data validation needed, and we strongly recommend performing comprehensive validation at this point before moving to the next step.

To perform data validation, a simple method is to check the row counts of the tables being replicated across the two CDC pipelines, as shown in the following screenshot. These counts may not be exactly equal at all times, because at that moment one CDC method will be leading or lagging compared to the other, but results should be very close. The following screenshot demonstrates querying for the row counts of target tables populated by DMS and zero-ETL in our demo.

Migrate data consumer tools connections

After you have validated the replicated data, the next step is to make sure the downstream consumers of this replicated data can access it. Target databases for zero-ETL integration are different from a standard AWS DMS replication target table, in that zero-ETL target tables are read-only. To make sure your downstream consumers can still access the replicated data from zero-ETL integration, you need to update the consumer connections to point to the zero-ETL integration database. Complete the following steps:

- Identify the existing data consumer tools connections that need to be updated.

- Update the connection strings of the identified data consumers to point to the new database wherever needed.

- Make sure the connection parameters (hostname, port, database name, user credentials) for Amazon Redshift remain unchanged. The transition to zero-ETL doesn’t alter these details, so your data consumer tools will connect to Amazon Redshift as they did before.

- After you transition to zero-ETL, thoroughly test all data consumer tool connections to make sure they’re functioning correctly. Perform queries and data retrieval operations to validate the integrity and performance of the connections.

Update or create views

If you have any views or materialized views created on the tables replicated by AWS DMS, you can either update the view definitions to point to the new table created by the zero-ETL integration, or you can create new views on top of the new target table.

Disable AWS DMS replication

After you have configured the consumer tools and confirmed that they can read from the data replicated by zero-ETL integrations, you can disable the AWS DMS replication. Complete the following steps:

- Stop and delete the AWS DMS CDC replication task.

- Delete the AWS DMS replication instance being used for this replication task.

- If they’re no longer needed, drop the tables in Amazon Redshift that were being replicated by this AWS DMS CDC replication task.

Conclusion

In this post, we demonstrated how to plan and implement the migration from a traditional ETL approach using AWS DMS to a more efficient, cost-effective zero-ETL integration for your Redshift data warehouse. Following the best practices and considerations discussed in this post, you can achieve a smooth transition from AWS DMS to zero-ETL with no data loss.

AWS DMS remains our recommended service for migrating your data into AWS databases, whereas zero-ETL integration is better suited for CDC and ETL within AWS. As always, we recommend using the right tool for the job, and both AWS DMS and zero-ETL can coexist in an overall data strategy. If you’re unsure which tool is right for you, reach out to your AWS Solutions Architect, AWS Account Team contact, or submit a general AWS Support question requesting guidance.

About the Authors

Ekta Ahuja is an Analytics Specialist Solutions Architect at AWS. She is passionate about helping customers build scalable and robust data and analytics solutions. Before AWS, she worked in several different data engineering and analytics roles. Outside of work, she enjoys landscape photography, traveling, and board games.

Wallace Printz is a Principal Solutions Architect at AWS, supporting global customers underpinning the entire telecommunications and internet ecosystem. Before AWS, he led a computational research team in semiconductor manufacturing and worked with startups using machine learning. Outside of work, he enjoys cooking, shuttling his teenagers, and playing with his German Shepherd, Chloe.

Wallace Printz is a Principal Solutions Architect at AWS, supporting global customers underpinning the entire telecommunications and internet ecosystem. Before AWS, he led a computational research team in semiconductor manufacturing and worked with startups using machine learning. Outside of work, he enjoys cooking, shuttling his teenagers, and playing with his German Shepherd, Chloe.

Sudipta Bagchi is a Specialist Solutions Architect at AWS. He has over 14 years of experience in data and analytics, and helps customers design and build scalable and high-performant analytics solutions. Outside of work, he loves running, traveling, and playing cricket.

Sudipta Bagchi is a Specialist Solutions Architect at AWS. He has over 14 years of experience in data and analytics, and helps customers design and build scalable and high-performant analytics solutions. Outside of work, he loves running, traveling, and playing cricket.

Source: Read More