Instruction-tuned large language models (LLMs) have redefined natural language processing (NLP), offering significant improvements in generating coherent, context-aware responses. However, a pressing challenge persists—access to high-quality, diverse, and task-specific instruction-response datasets. Traditional instruction-tuning approaches often depend on curated datasets that are costly and time-intensive to develop. Moreover, such datasets may lack the breadth and depth needed to fine-tune LLMs across a wide array of domains, including text editing, creative writing, and coding. This limitation hinders the deployment of LLMs optimized for practical applications, leaving a gap in achieving versatility and generalization.

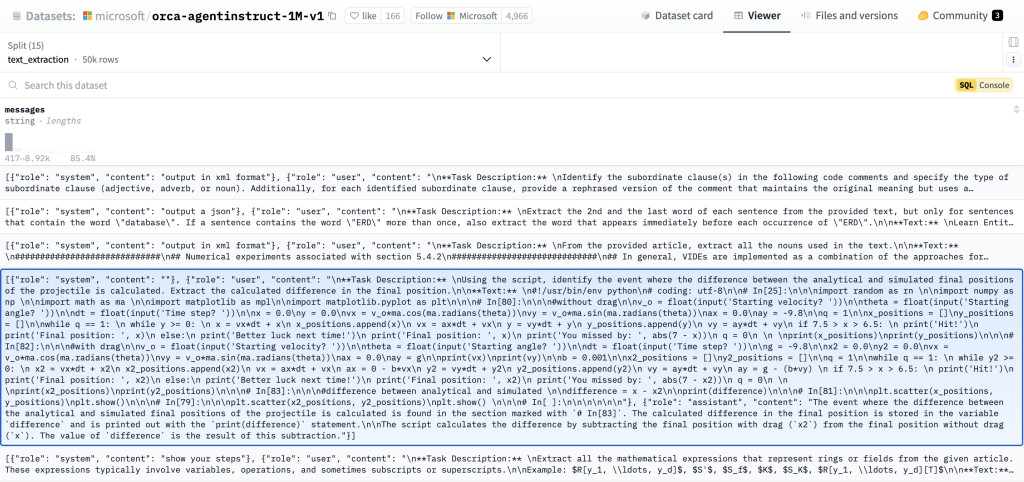

To tackle these challenges, Microsoft Research released a groundbreaking dataset of 1 million synthetic instruction-response pairs, aptly named AgentInstruct-1M-v1. This dataset, generated using the innovative AgentInstruct framework, represents a fully synthetic collection of tasks. Spanning diverse capabilities such as text editing, creative writing, coding, and reading comprehension, this dataset is a significant leap forward in enabling instruction tuning for base language models. By leveraging publicly available web text seeds, Microsoft Research created a corpus that is not only expansive but also representative of real-world use cases.

AgentInstruct-1M-v1 serves as a subset of a larger dataset comprising approximately 25 million instruction-response pairs. Notably, this larger set was instrumental in post-training the Mistral-7b model, culminating in the enhanced Orca-3-Mistral model. These synthetic datasets address the dual problem of scale and diversity, providing a robust foundation for advancing LLM performance across benchmarks.

Technical Details and Benefits

The AgentInstruct framework, the cornerstone of this dataset, synthesizes instruction-response pairs by processing web text seeds. This approach ensures scalability, enabling the generation of massive datasets without manual intervention. The resulting data encapsulates a rich variety of tasks and prompts, capturing nuances across creative, technical, and analytical domains.

The most notable application of the dataset is its role in training Orca-3-Mistral, a derivative of Mistral-7b. Compared to its predecessor, Orca-3-Mistral demonstrates impressive performance improvements across multiple benchmarks. Key gains include a 40% improvement on AGIEval (General Intelligence Evaluation), 19% on MMLU (Massive Multitask Language Understanding), 54% on GSM8K (math problem-solving), 38% on BBH (Big Bench Hard), and 45% on AlpacaEval. These metrics underscore the transformative impact of synthetic datasets in instruction-tuning methodologies.

Importance and Implications

The release of AgentInstruct-1M-v1 holds immense significance for the NLP and AI communities. First, it democratizes access to high-quality instruction-tuning data, paving the way for researchers and developers to experiment with and enhance LLMs without the resource constraints tied to manual dataset creation. Second, the synthetic nature of the dataset circumvents privacy and licensing issues commonly associated with using proprietary data, ensuring ethical and legal compliance.

The performance improvements achieved with Orca-3-Mistral highlight the dataset’s practical benefits. For instance, a 54% improvement on GSM8K showcases its potential in advancing models’ problem-solving capabilities, a critical requirement in educational and professional settings. Similarly, a 40% gain on AGIEval reflects enhanced general intelligence, making models more reliable for decision-making tasks. These results validate the dataset’s design and its ability to drive tangible advancements in LLM performance.

Conclusion: A Step Toward Smarter AI

Microsoft Research’s release of 1 million synthetic instruction pairs represents a pivotal moment in AI research. By addressing the limitations of existing instruction-tuning datasets, the AgentInstruct-1M-v1 dataset empowers the development of more versatile, efficient, and capable LLMs. The associated benefits, evidenced by Orca-3-Mistral’s benchmark performance, underscore the value of synthetic datasets in overcoming scalability challenges.

As the NLP field continues to evolve, initiatives like this not only push the boundaries of what LLMs can achieve but also lower the barriers for innovation. For researchers, developers, and end-users alike, Microsoft’s synthetic instruction pairs signify a promising step toward building smarter, more reliable AI systems that cater to real-world complexities.

Check out the Dataset. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions– From Framework to Production

The post Microsoft AI Research Released 1 Million Synthetic Instruction Pairs Covering Different Capabilities appeared first on MarkTechPost.

Source: Read MoreÂ