In this post, we show you how to use Amazon ElastiCache as a write-through cache for an application that uses an Amazon Keyspaces (for Apache Cassandra) table to store data about book awards. We use a Cassandra Python client driver to access Amazon Keyspaces programmatically and a Redis client to connect to the ElastiCache cluster.

Amazon Keyspaces is a scalable, highly available, and fully managed Cassandra-compatible database service that offers single-digit millisecond read and write response times at any scale. Because Amazon Keyspaces is serverless, instead of deploying, managing, and maintaining storage and compute resources for your workload through nodes in a cluster, Amazon Keyspaces allocates storage and read/write throughput resources directly to tables.

For most workloads, the single-digit millisecond response times provided by Amazon Keyspaces is sufficient, you don’t need to cache results returned by Amazon Keyspaces. However, there may be cases where applications need submillisecond response times for read operations, the application is read-intensive but also cost-sensitive, or the application needs repeated reads such that reads per partition consume over 3,000 read capacity units (RCUs) per second. Amazon ElastiCache is a fully managed, highly available, distributed, fast in-memory data store that can be used as a cache for Amazon Keyspaces tables to decrease read latency to submillisecond values, increase read throughput, and scale for higher loads without increasing the costs for your backend database. Amazon ElastiCache is compatible with Valkey and Redis OSS. The approach and code discussed in this post works with both these engines.

This post uses the write-through caching strategy and lazy loading. Write-through caching improves the initial response times and keeps cached data up to date with the underlying database. With lazy loading, data is loaded into the cache only when there is a cache miss, decreasing resource usage on the cache. For more details on caching strategies, refer to Caching patterns and Caching strategies.

Prerequisites

The prerequisites for connecting to Amazon Keyspaces include downloading the TLS certificate and configuring the Python driver to use TLS, downloading relevant Python packages, and setting up a connection with your keyspace and table.

The following is the boilerplate code to connect to an Amazon Keyspaces table using the Amazon Keyspaces SigV4 authentication plugin.

from cassandra.cluster import *

from ssl import SSLContext, PROTOCOL_TLSv1_2 , CERT_REQUIRED

from cassandra.auth import PlainTextAuthProvider

from cassandra_sigv4.auth import SigV4AuthProvider

from cassandra.query import SimpleStatement

import time

import boto3

ssl_context = SSLContext(PROTOCOL_TLSv1_2)

ssl_context.load_verify_locations('/home/ec2-user/sf-class2-root.crt')

ssl_context.verify_mode = CERT_REQUIRED

boto_session = boto3.Session()

auth_provider = SigV4AuthProvider(boto_session)

cluster = Cluster(['cassandra.us-east-1.amazonaws.com'],

ssl_context=ssl_context,

auth_provider=auth_provider,

port=9142)

session = cluster.connect()

Using sigv4 plugin is the recommended security best practice. However, you can alternatively connect to Amazon Keyspaces using service-specific credentials for backward-compatibility with traditional username and password that Cassandra uses for authentication and access management.

For instructions to connect to your ElastiCache cluster, refer to Connecting to ElastiCache. The following is the boilerplate code to connect to an ElastiCache cluster:

from rediscluster import RedisCluster

import logging

logging.basicConfig(level=logging.ERROR)

redis = RedisCluster(startup_nodes=[{"host": "keyspaces-cache.eeeccc.clustercfg.use1.cache.amazonaws.com",

"port": "6379"}],

decode_responses=True,

skip_full_coverage_check=True)

if redis.ping():

logging.info("Connected to Redis")

Amazon Keyspaces table schema

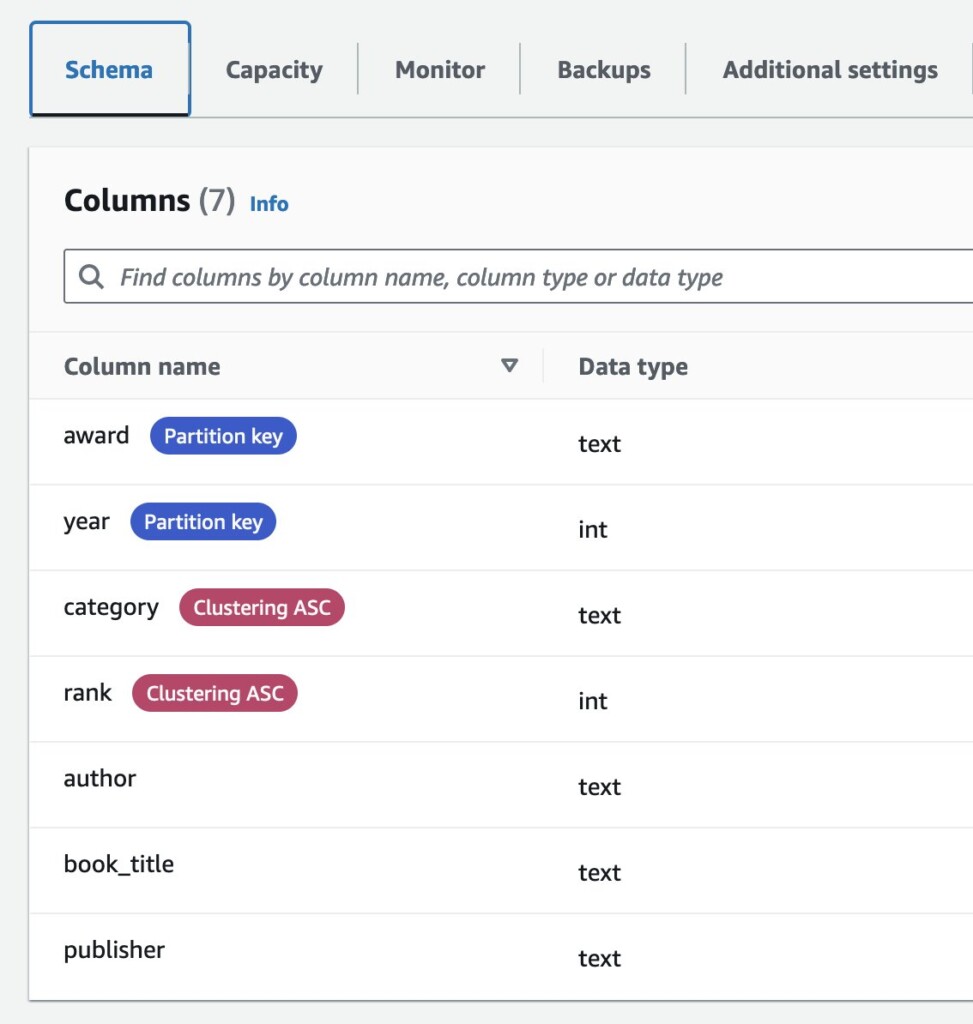

In this example, the Amazon Keyspaces table called book_awards is used to store data about book awards in a keyspace called catalog. The partition key consists of the columns award and year. The table has two clustering key columns, category and rank, as shown in the following screenshot. This key schema provides an even distribution of data across the partitions and caters to the access patterns that we explore in this post. For best practices on data modeling for Amazon Keyspaces, refer to Data modeling best practices: recommendations for designing data models.

The following screenshot shows a few sample rows from this table.

The next section explores the sample code snippets for Amazon Keyspaces operations and the caching results of these operations. The code snippets in this post are for reference only, input validation and error handling are not included with the samples.

Single-row INSERT and DELETE operations

With the write-through caching process, the cache is proactively updated immediately following the primary database update. The fundamental logic can be summarized as follows:

- The application inserts or deletes a row in Amazon Keyspaces

- Immediately afterward, the row is also inserted or deleted in the cache

The following figure illustrates the write-through caching strategy:

The following sample code shows how to implement the write-through strategy for INSERT and DELETE operations on the book_awards data:

#Global variables

keyspace_name="catalog"

table_name="book_awards"

#Write a row

def write_award(book_award):

write_award_to_keyspaces(book_award)

write_award_to_cache(book_award)

#write row to the Keyspaces table

def write_award_to_keyspaces(book_award):

stmt=SimpleStatement(f"INSERT INTO {keyspace_name}.{table_name} (award, year, category, rank, author, book_title, publisher) VALUES (%s, %s, %s, %s, %s, %s, %s)",

consistency_level=ConsistencyLevel.LOCAL_QUORUM)

session.execute(stmt,(book_award["award"],

book_award["year"],

book_award["category"],

book_award["rank"],

book_award["author"],

book_award["book_title"],

book_award["publisher"]))

#write row to the cache

def write_award_to_cache(book_award):

#construct Redis key name

key_name=book_award["award"]+str(book_award["year"])+book_award["category"]+str(book_award["rank"])

#write to cache using Redis set, ex=300 sets TTL for this row to 300 seconds

redis.set(key_name, str(book_award), ex=300)

#Delete a row

def delete_award(award, year, category, rank):

delete_award_from_keyspaces(award, year, category, rank)

delete_award_from_cache(award, year, category, rank)

#delete row from Keyspaces table

def delete_award_from_keyspaces(award, year, category, rank):

stmt = SimpleStatement(f"DELETE FROM {keyspace_name}.{table_name} WHERE award=%s AND year=%s AND category=%s AND rank=%s;",

consistency_level=ConsistencyLevel.LOCAL_QUORUM)

session.execute(stmt, (award, int(year), category, int(rank)))

#delete row from cache

def delete_award_from_cache(award, year, category, rank):

#construct Redis key name

key_name=award+str(year)+category+str(rank)

#delete the row from cache if it exists

if redis.get(key_name) is not None:

book_award=redis.delete(key_name)

Retrieve a single book award by primary key

The fundamental data retrieval logic with lazy loading can be summarized as follows:

- When your application needs to read data from the database, it checks the cache first to determine whether the data is available. If the data is available (a cache hit), the cached data is returned, and the response is issued to the caller

- If the data isn’t available (a cache miss):

- The database is queried for the data

- The cache is populated with the data that is retrieved from the database, and the data is returned to the caller

The following figure illustrates the data retrieval logic:

One of the most common access patterns assumed in this use case is to request a book award when all the primary key columns are known. With the lazy loading strategy, the application first tries to retrieve the requested data from the cache. If the row is not found in the cache, it fetches the row from the database and caches it for future use. Time to live (TTL) is an integer value that specifies the number of seconds until the key expires. Valkey or Redis OSS can specify seconds or milliseconds for this value. The TTL value is set to 300 seconds in this example, but you can configure it according to your application needs. Additionally, we can use the Python time library to compare the round-trip response time between fetching results from the database and from the cache.

#Global variables

keyspace_name="catalog"

table_name="book_awards"

#Read a row

def get_award(award, year, category, rank):

#construct Redis key name from parameters

key_name=award+str(year)+category+str(rank)

start=time.time()

book_award=redis.get(key_name)

end=time.time()

elapsed=(end - start)*1000

#if row not in cache, fetch it from Keyspaces table

if not book_award:

print("Fetched from Cache: ", book_award)

stmt = SimpleStatement(f"SELECT * FROM {keyspace_name}.{table_name} WHERE award=%s AND year=%s AND category=%s AND rank=%s;")

start=time.time()

res=session.execute(stmt, (award, int(year), category, int(rank)))

end=time.time()

elapsed=(end - start)*1000

if not res.current_rows:

print("Fetched from Database: None")

return None

else:

#lazy-load into cache

book_award=redis.set(key_name, str(res.current_rows), ex=300)

print("Fetched from Database in: ", elapsed, "ms")

return res.current_rows

else:

print("Fetched from Cache in: ", elapsed, "ms")

return book_award

Retrieve a result set based on multiple parameters

One of the other common access patterns here is assumed to be “Fetch top n ranks for an award in year x and category y.†For this post, we concatenate the request parameters and use this concatenated string as the Redis key for the list of awards matching the request parameters:

#Global variables

keyspace_name="catalog"

table_name="book_awards"

#Read one or more rows based on parameters

def get_awards(parameters):

#construct key name from parameters

key_name=""

for p in parameters:

key_name=key_name+str(p)

start=time.time()

book_awards=redis.lrange(key_name, 0, -1)

end=time.time()

elapsed=(end - start)*1000

#if result set not in cache, fetch it from Keyspaces table

if not book_awards:

print("Fetched from Cache: ", book_awards)

stmt = SimpleStatement(f"SELECT * FROM {keyspace_name}.{table_name} WHERE award=%s AND year=%s AND category=%s AND rank<=%s;")

start = time.time()

res=session.execute(stmt, parameters)

end=time.time()

elapsed=(end - start)*1000

if not res.current_rows:

print("Fetched from Database: None")

return None

else:

#lazy-load into cache

redis.rpush(key_name, str(res.current_rows))

redis.expire(key_name, 300)

print("Fetched from Database in: ", elapsed, "ms")

return res.current_rows

else:

print("Fetched from Cache: ", elapsed, "ms")

return book_awards

Test cases

The first test case validates caching and lazy load behavior on single-row data insertion, cache hit, cache miss, and data deletion scenarios.

def test_case_1():

book_award={"award": "Golden Read",

"year": 2021,

"category": "sci-fi",

"rank": 2,

"author": "John Doe",

"book_title": "Tomorrow is here",

"publisher": "Ostrich books"}

#insert an award to the DB and cache

write_award(book_award)

print("Test Case 1:")

print("New book award inserted.")

#cache hit - get award from cache

print("n")

print("Verify cache hit:")

res=get_award(book_award["award"],

book_award["year"],

book_award["category"],

book_award["rank"])

print(res)

#let the cache entry expire

print("n")

print("Waiting for cached entry to expire, sleeping for 300 seconds...")

time.sleep(300)

#cache miss - get award from DB and lazy load to cache

print("n")

print("Entry expired in cache, award expected to be fetched from DB:")

res=get_award(book_award["award"],

book_award["year"],

book_award["category"],

book_award["rank"])

print(res)

#cache hit - get award from cache

print("n")

print("Verify that award is lazy loaded into cache:")

res=get_award(book_award["award"],

book_award["year"],

book_award["category"],

book_award["rank"])

print(res)

#delete the award from cache and DB

print("n")

print("Deleting book award.")

delete_award(book_award["award"],

book_award["year"],

book_award["category"],

book_award["rank"])

#confirm the award was deleted from cache and DB

print("n")

print("Verify that the award was deleted from cache and DB:")

res=get_award(book_award["award"],

book_award["year"],

book_award["category"],

book_award["rank"])

if res:

print(res)

Running this test case generates the following output, as expected. We can observe submillisecond round-trip latency for data fetched from cache, and millisecond latency can be observed for a round-trip to the database.

Test Case 1:

New book award inserted.

Verify cache hit:

Fetched from Cache in: 0.3809928894042969 ms

{'award': 'Golden Read', 'year': 2021, 'category': 'sci-fi', 'rank': 2, 'author': 'John Doe', 'book_title': 'Tomorrow is here', 'publisher': 'Ostrich books'}

Waiting for cached entry to expire, sleeping for 300 seconds...

Entry expired in cache, award expected to be fetched from DB:

Fetched from Cache: None

Fetched from Database in: 14.202594757080078 ms

[Row(year=2021, award='Golden Read', category='sci-fi', rank=2, author='John Doe', book_title='Tomorrow is here', publisher='Ostrich books')]

Verify that award is lazy loaded into cache:

Fetched from Cache in: 0.4191398620605469 ms

[Row(year=2021, award='Golden Read', category='sci-fi', rank=2, author='John Doe', book_title='Tomorrow is here', publisher='Ostrich books')]

Deleting book award.

Verify that the award was deleted from cache and DB:

Fetched from Cache: None

Fetched from Database: None

The second test case validates caching and lazy load behavior for fetching a result set based on multiple parameters. We have some book award data preloaded in the Amazon Keyspaces table, as described in the Amazon Keyspaces table schema section of this post. This test case deals with the preloaded data instead of inserting new rows in the database.

def test_case_2():

print("Test Case 2:")

print("Get top 3 Must Read book awards for year 2021 in the Sci-Fi category")

print("n")

res=get_awards(["Must Read", 2021, "Sci-Fi", 3])

print(res)

#cache-hit - get awards from cache

print("n")

print("Verify cache hit on subsequent query with same parameters:")

res=get_awards(["Must Read", 2021, "Sci-Fi", 3])

print(res)

#let the cache entry expire

print("n")

print("Waiting for cached entry to expire, sleeping for 300 seconds...")

time.sleep(300)

#cache miss - get award from DB and lazy load to cache

print("n")

print("Entry expired in cache, awards expected to be fetched from DB.")

res=get_awards(["Must Read", 2021, "Sci-Fi", 3])

print(res)

#cache hit - get award from cache

print("n")

print("Verify that awards are lazy loaded into cache:")

res=get_awards(["Must Read", 2021, "Sci-Fi", 3])

print(res)

You observe the workflow for lazy loading and caching in action. The first call does not find the result in the cache, so it fetches data from the Amazon Keyspaces table underlying the cache and loads it into the cache. The second call fetches the results from the cache. After the cached result expires, results are fetched again from the database and lazy loaded into the cache for fast retrieval from the cache on subsequent get_awards calls with same parameters. Submillisecond round-trip latency can be observed for data fetched from cache and millisecond latency can be observed for a round-trip to the database.

Test Case 2:

Get top 3 Must Read book awards for year 2021 in the Sci-Fi category

Fetched from Cache: []

Fetched from Database in: 21.03400230407715 ms

[Row(year=2021, award='Must Read', category='Sci-Fi', rank=1, author='Polly Gon', book_title='The mystery of the 7th dimension', publisher='PublishGo'), Row(year=2021, award='Must Read', category='Sci-Fi', rank=2, author='Kai K', book_title='Time travellers guide', publisher='Publish123'), Row(year=2021, award='Must Read', category='Sci-Fi', rank=3, author='Mick Key', book_title='Key to the teleporter', publisher='Penguins')]

Verify cache hit on subsequent query with same parameters:

Fetched from Cache: 0.36835670471191406 ms

["[Row(year=2021, award='Must Read', category='Sci-Fi', rank=1, author='Polly Gon', book_title='The mystery of the 7th dimension', publisher='PublishGo'), Row(year=2021, award='Must Read', category='Sci-Fi', rank=2, author='Kai K', book_title='Time travellers guide', publisher='Publish123'), Row(year=2021, award='Must Read', category='Sci-Fi', rank=3, author='Mick Key', book_title='Key to the teleporter', publisher='Penguins')]"]

Waiting for cached entry to expire, sleeping for 300 seconds...

Entry expired in cache, awards expected to be fetched from DB.

Fetched from Cache: []

Fetched from Database in: 32.64594078063965 ms

[Row(year=2021, award='Must Read', category='Sci-Fi', rank=1, author='Polly Gon', book_title='The mystery of the 7th dimension', publisher='PublishGo'), Row(year=2021, award='Must Read', category='Sci-Fi', rank=2, author='Kai K', book_title='Time travellers guide', publisher='Publish123'), Row(year=2021, award='Must Read', category='Sci-Fi', rank=3, author='Mick Key', book_title='Key to the teleporter', publisher='Penguins')]

Verify that awards are lazy loaded into cache:

Fetched from Cache: 0.3757476806640625 ms

["[Row(year=2021, award='Must Read', category='Sci-Fi', rank=1, author='Polly Gon', book_title='The mystery of the 7th dimension', publisher='PublishGo'), Row(year=2021, award='Must Read', category='Sci-Fi', rank=2, author='Kai K', book_title='Time travellers guide', publisher='Publish123'), Row(year=2021, award='Must Read', category='Sci-Fi', rank=3, author='Mick Key', book_title='Key to the teleporter', publisher='Penguins')]"]

Example script

You can find the sample script and test functions in this GitHub repository.

Considerations

This post shows you a basic implementation to cache results of the two most common access patterns against the book awards data. There are more options to cache data based on the nature of your access patterns:

- Cache results based on partition key values only (filtering on the client side) – Rather than handling all request parameters in the application, you may choose to cache the result set based on partition key columns only (for example, based on award and year). All the other filtering is handled on the client side. This may be useful when only a handful of rows with this partition key value need to be discarded from the result set across different queries. You cache the result set only once and handle different clustering column values or filter criteria on the client side.

- Sort all key parameters in an order (for example, ascending) and hash them – You can use the hash value as the key for cached result. When the same result using the same key parameters is requested, the hash remains idempotent and cache returns the needed result set. This option may be helpful when queries are dynamic and key conditions may be out of order across different queries.

- Sort all query parameters (key conditions and filters) in an order (for example, ascending) and hash them – You can use the hash value as the key for cached result. When the same query parameters are requested in a different order, the hash remains idempotent and the cache returns the needed result set. This option may be helpful when query parameters and filters are dynamic and when parameters are out of order across different queries.

Conclusion

In this post, we showed you how to use Amazon ElastiCache as a cache for read-intensive and cost-sensitive applications that store data in Amazon Keyspaces and that may need submillisecond read response times. Amazon ElastiCache gives you flexibility to design your custom caching strategy based on the nature of your queries. You can also set up your own cache hydration and eviction logic along with suitable TTL values.

For more information, refer to Designing and managing your own ElastiCache cluster.

About the Author

Juhi Patil is a London-based NoSQL Specialist Solutions Architect with a background in big data technologies. In her current role, she helps customers design, evaluate, and optimize their Amazon Keyspaces and Amazon DynamoDB based solutions.

Source: