Generative artificial intelligence (AI) chatbots use advanced models like transformers to generate real-time, context-aware responses, making them highly effective for various applications, including customer service and personal assistants. Unlike traditional rule-based chatbots, generative AI chatbots continuously learn from interactions, improving their accuracy and relevance over time.

An effective chatbot system requires several key components: real-time response generation, scalability to handle varying loads, efficient data retrieval for quick access to past interactions, and the ability to store and manage user-specific metadata. These requirements make sure that the chatbot can deliver accurate, personalized, and timely responses.

Amazon DynamoDB is ideal for storing chat history and metadata due to its scalability and low latency. DynamoDB can efficiently store chat history, allowing quick access to past interactions. User-specific metadata, such as preferences and session information, can be stored to personalize responses and manage active sessions, enhancing the overall chatbot experience.

In this post, we explore how to design an optimal schema for chatbots, whether you’re building a small proof of concept application or deploying a large-scale production system.

Access pattern definitions

Defining your access patterns before designing a data model for DynamoDB is crucial because it makes sure your database schema is optimized for the specific queries your application will perform. By understanding how your chatbot will access and use data—such as retrieving chat history, updating user profiles, or managing sessions—you can design a schema that minimizes latency and maximizes throughput. This approach makes sure your chatbot performs efficiently, as usage scales.

The following table summarizes the access patterns and APIs we discuss in this post.

| API | Description | Request Type | Estimated RPS |

| ListUserChats | Get a list of chat sessions for a user | Read | 100 |

| GetChatMessages | Get messages for a conversation, with messages ordered in ascending order | Read | 200 |

| CreateChat | Create a new conversation | Write | 10 |

| PutMessage | Add a message to a chat, from both user and bot | Write | 1000 |

| EditMessage | Edit a message, user-only messages | Write | 5 |

| DeleteMessage | Delete a message, user-only messages | Write | 4 |

| DeleteChat | Remove an entire conversation | Write | 10 |

Data modeling

Our use case will need to store two entities: conversation metadata and a list of conversation messages. In relational database systems, you might choose to store these two entities in different tables and join them during requests. However, with DynamoDB, we model the data in such a way that we store data relevant to a single request in the same table, in a denormalized fashion. This approach maintains the high performance and responsiveness expected from a chatbot system.

We use NoSQL Workbench to design our data model, which offers an intuitive interface for schema design, tools for visualizing and optimizing access patterns, and the ability to simulate interactions before deployment. This makes sure our DynamoDB schema is optimized for performance and scalability, tailored to our chatbot’s specific needs.

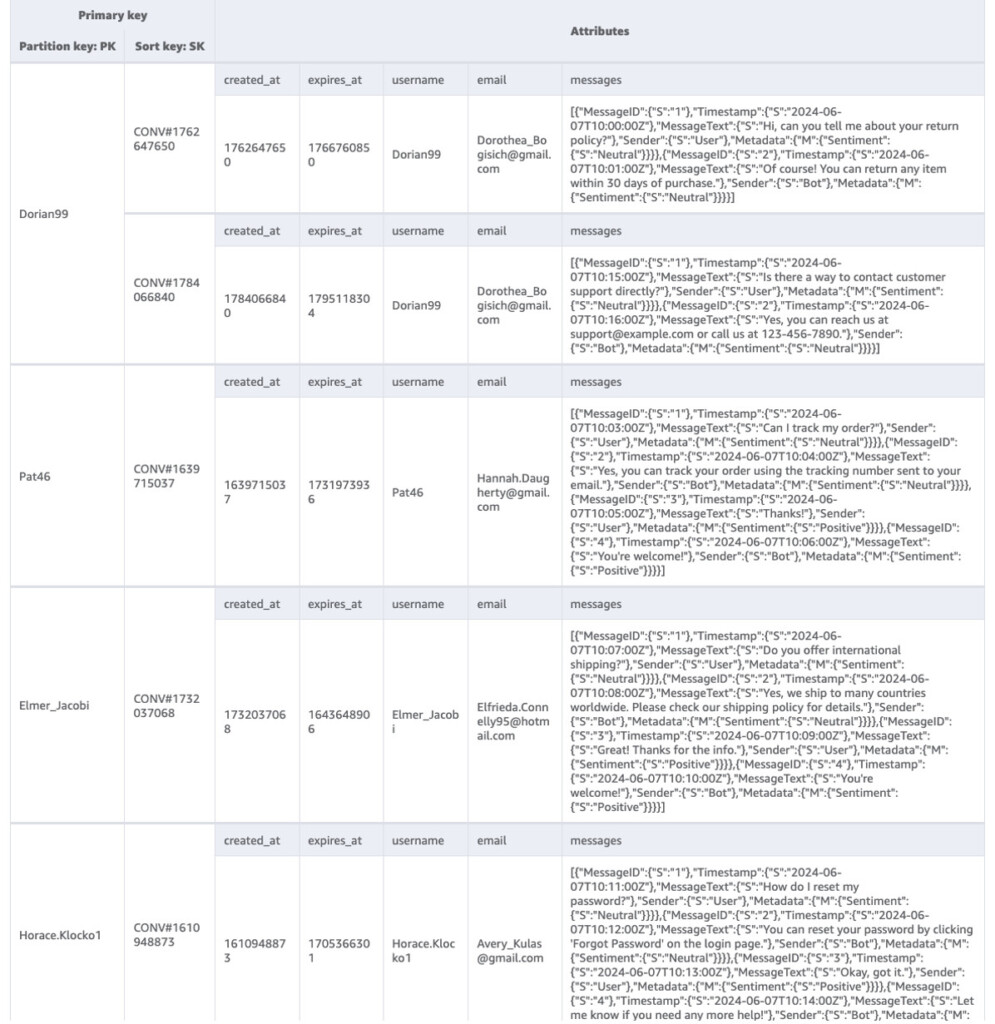

The following table illustrates the data model in NoSQL Workbench that would support the preceding access patterns list and APIs.

Let’s break down the preceding data model with sample data and each item in DynamoDB.

The following is the ChatApp schema:

Our initial data model uses username as the partition key (PK) and created_at as the sort key (SK). This structure makes sure interactions from a specific user are stored in chronological order, facilitating efficient query operations. Each conversation is stored as a single item in the table, with a list attribute that holds the messages exchanged during the conversation. This approach allows us to efficiently retrieve and manage entire conversation histories, making sure context is maintained and accessible for each user interaction. This pattern can also fulfill our access pattern requirements.

However, although this data model may seem convenient initially, it doesn’t scale well for two main reasons:

- There is a risk of exceeding the maximum item size for DynamoDB, which is 400 KB, because the list of messages per conversation is potentially unbounded. As users continue to interact with the chatbot, the accumulated messages can quickly surpass this limit.

- Each additional message increases the item’s size, making subsequent writes increasingly costly. As the item grows, the cost of updating the item with each new message also grows, impacting both performance and expense. For example, if you have a conversation item that is 300 KB in size, and you input a message to the chatbot that contains 2 bytes, such as “hiâ€, this will consume 300 WCU because that is the size of the item.

Vertical Partitioning

To address the issue of large items, you can use vertical partitioning by breaking them into smaller chunks of data and associating relevant pieces using the same partition key. You use a sort key string to identify and organize the related information. This approach allows you to group multiple items under a single partition key, effectively creating an item collection.

This data model also uses username as the partition key, so the relationship remains the same, and one user has many conversations. In the following table, user Dorian99 has two conversations, one highlighted in green, the other in orange.

Our new data model now has two distinct entities, a metadata item and a message item.

The following is the conversation metadata item:

The following is the conversation message item:

With this data model, you can store up to 400 KB per message. If you exceed this limit, consider techniques discussed in Best practices for storing large items and attributes, such as compression or offloading the item to Amazon Simple Storage Service (Amazon S3) while keeping the metadata in DynamoDB. This approach makes sure you only pay to store each message individually, avoiding the costs associated with updating potentially large items. For example, writing a simple “hi†message to the chatbot will cost just 1 WCU, regardless of the total data size of the entire conversation, because it’s distributed across multiple smaller items.

To better satisfy our access patterns, we chose to prepend the conversation message item sort key with CHAT# instead of CONV#, which we used for the metadata item. This distinction was necessary to efficiently support our primary access pattern of listing conversations for a user. If we had used CONV# for both conversation metadata and message items, querying for user conversations would have returned both types of items, resulting in unnecessary data retrieval.

One important decision for the conversation message item was using a Universally Unique Lexicographically Sortable Identifier (ULID) for the messageId. A ULID makes sure messages are uniquely identified and can be sorted lexicographically. A ULID offers 128-bit compatibility with UUID, 1.21e+24 unique IDs per millisecond, lexicographical sorting, a compact 26-character string, efficient Crockford’s base32 encoding, case insensitivity, URL safety, and monotonic sort order to handle identical milliseconds. This allows you to render items in ascending order on the chatbot frontend while making sure no two messages share the same ID or timestamp.

Data storage

We have designed an efficient data model that supports thousands of users, each potentially having tens or hundreds of conversations. Given the expected growth rate of our data, with conversations and associated messages accumulating rapidly, we implemented the DynamoDB Time-to-Live (TTL) feature. This makes sure items, including conversations and messages, are automatically deleted after 90 days, helping you manage storage costs and maintain optimal performance without incurring additional deletion expenses.

Data access patterns

Having established an optimal data model, let’s explore how we can implement our access patterns using Python and Boto3 with the following example code.

The following is example code for ListConversations:

The following is example code for GetChatMessages:

The following is example code for CreateChat:

The following is example code for PutMessage:

The following is example code for EditMessage:

The following is example code for DeleteMessge:

The following is example code for DeleteChat:

Summary

Designing an optimal schema for generative AI chatbots using DynamoDB is important for providing efficient performance, scalability, and cost management. By defining access patterns upfront and employing strategies like vertical partitioning and TTL, you can effectively handle large volumes of chat history and metadata. This approach not only enhances the chatbot’s responsiveness and personalization, but also provides a seamless and scalable user experience.

Ready to take your generative AI chatbot to the next level? Start taking advantage of the power of DynamoDB today. Dive deeper into our comprehensive documentation and explore the full capabilities of NoSQL Workbench for DynamoDB to design and optimize your chatbot’s data model. Transform your customer interactions with real-time, context-aware responses by integrating DynamoDB into your generative AI solutions.

About the Author

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads using the capabilities of DynamoDB.

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads using the capabilities of DynamoDB.

Source: Read More