In today’s data-driven world, data analysts play a crucial role in various domains. Businesses use data extensively to inform strategy, enhance operations, and obtain a competitive edge. Professionals known as data analysts enable this by turning complicated raw data into understandable, useful insights that help in decision-making. They navigate the whole data analysis cycle, from discovering and collecting pertinent data to getting it ready for analysis, interpreting the findings, and formulating suggestions. Whether it’s determining the best client segments, increasing operational effectiveness, or predicting future trends, this approach assists firms in answering particular questions, finding patterns, and comprehending trends that affect important results.

Data analysts are in high demand, and possibilities are opening up in industries like government, business, healthcare, and finance. As businesses depend more and more on data to make quicker, more accurate, and more intelligent decisions, the field of data analytics keeps growing. Today, many businesses view data analytics as a crucial role, creating a stable need for qualified workers and a bright future for ambitious analysts.Â

This article has covered what it means to be a data analyst, the necessary tools and abilities, the top learning resources, and doable strategies for establishing a prosperous career in this fulfilling sector.

Table of contents

- What Do Data Analysts Do?

- Skills Required for Success

- Online Courses to Become Data Analysts

- Key Tools and Technologies for Data Analysis

- Building Practical Experience in Data Analytics

- Projects and Portfolio Development

- Kaggle Competitions and Other Platforms

- Networking and Community EngagementÂ

- Job Search and Career Advancement Tips Â

- Websites for Interview Preparation

- Conclusion

Understanding the Role of a Data Analyst

What Do Data Analysts Do?

Depending on the organization’s adoption of data-driven decision-making procedures, a data analyst’s position can vary greatly. But fundamentally, the job of a data analyst is to turn unprocessed data into insights that can be used to inform business choices. In order to solve particular business questions, this process usually includes developing and managing data systems, collecting and cleaning data, analyzing it statistically, and interpreting the findings.

Creating and managing databases to guarantee data integrity, locating and obtaining pertinent data sources, and applying statistical tools to find significant trends and patterns are some of the major responsibilities. In order to help stakeholders make well-informed decisions based on current market or operational trends, analysts frequently create comprehensive reports and dashboards that emphasize these insights.Â

To find chances for process enhancements, suggest system changes, and maintain data governance standards, data analysts also work with programmers, engineers, and company executives. For example, a data analyst can look at demographics associated with the campaign to find out if the target audience is being reached, analyze the campaign’s effectiveness, and consider whether to invest in similar advertising campaigns in the future.Â

Skills Required for Success

Strong analytical skills and technological knowledge are essential for a successful data analyst. To query and manipulate data, one must be proficient in computer languages like Python, R, and SQL. For statistical analysis, spreadsheet programs like Microsoft Excel are frequently utilized, and data visualization tools like Tableau and Power BI allow analysts to visually convey their findings. Accurate data interpretation, projection, and conclusion-making require a solid mathematical and statistical foundation.

Leadership abilities that enable analysts to think strategically and communicate their findings to stakeholders who are not technical, like problem-solving and good communication, are equally crucial. For example, project managers use data analysts to monitor important project indicators, identify problems, and assess the possible effects of different remedies. Data analysts succeed in this dynamic sector when they possess a combination of curiosity, attention to detail, and a dedication to remaining current with industry trends.Â

Online Courses to Become Data Analysts

Key Tools and Technologies for Data Analysis

Tableau is well known for its user-friendly data visualization features, which let users make dynamic, interactive dashboards without knowing any code. Tableau helps convey complicated information in a way that stakeholders can understand, making it very helpful for analysts and non-technical users alike. Tableau is a cost-effective option for businesses concentrating on data-driven storytelling and visualization.

Data scientists can create, train, and implement models with Microsoft Azure Machine Learning, a cloud-based platform. Because of its connectivity with other Azure services, it is quite flexible for purposes involving the deployment of AI and large-scale data processing. Users can choose between using its visual interface or code-based solutions depending on user preference and technical proficiency.Â

For businesses looking to integrate AI and improve their data analysis capabilities, Microsoft Power BI is a crucial tool. Its advanced text analysis features allow users to extract significant phrases and do sentiment analysis, improving the overall caliber of data insights. Power BI assists companies in gaining a thorough grasp of consumer preferences and market sentiment by converting unstructured data, such as internal papers, social media posts, and customer feedback, into structured insights.Â

IBM AI-driven insights are used by Watson Analytics, a cloud-based data analysis and visualization tool, to assist users in understanding their data. Users can rapidly find trends, patterns, and relationships in data using its automatic data discovery tool. For business customers who require actionable information without requiring sophisticated analytics expertise, Watson Analytics is a great fit.Â

Databricks provides a single cloud-based platform for the large-scale deployment of enterprise-grade AI and data analytics solutions. Databricks, which is well-known for having a solid foundation in Apache Spark, is perfect for companies looking to incorporate data science and machine learning into product development. It is frequently used to spur creativity and quicken the creation of data-driven applications in a variety of industries, including technology and finance.

The deep learning framework PyTorch is well-known for its adaptability and broad support for applications like computer vision, reinforcement learning, and natural language processing. PyTorch, an open-source framework, is widely used in both commercial and academic applications, especially when neural networks are needed. Deep learning practitioners choose it because of its large community and libraries. PyTorch usage is priced according to cloud provider fees and usually starts at $0.07 per hour, making it affordable for both production and experimentation.

An open-source AI and machine learning platform called H2O.ai has a strong emphasis on in-memory processing, which greatly accelerates data analysis. Because of the platform’s automatic machine-learning features, users can develop predictive models without requiring much technical knowledge. Businesses that require quick insights and effective management of large datasets can benefit from H2O.ai. Because it is a free tool, it appeals to businesses that wish to study some good ML models without making a financial commitment.

Google Cloud Smart Analytics delivers AI-powered tools for enterprises looking to transform data into strategic assets. Leveraging Google’s expertise in data handling and AI innovation, this platform offers extensive analytics capabilities that range from marketing and business intelligence to data science. Google Cloud Smart Analytics supports organizations in building data-driven workflows and implementing AI at scale.

Polymer provides an agentless platform for data security that simplifies business intelligence and improves data security by utilizing machine learning. Without knowing any code, users can construct dashboards, embed analytics in presentations, and create data visualizations with its user-friendly features. By producing visualizations based on natural language input, Polymer’s conversational AI assistant, PolyAI, significantly increases productivity and saves analysts time.

RapidMiner is a potent AI platform that can be used by users of all skill levels because of its intuitive drag-and-drop interface. From data preparation to model deployment, it provides a comprehensive solution. RapidMiner’s versatility for a wide range of data types includes the ability to analyze text, pictures, and audio. Businesses can swiftly extract insights and automate procedures because of its interaction with machine learning and deep learning frameworks. RapidMiner is perfect for small-scale projects and accessible for novices because it is free and has minimal functionality.

Akkio is a flexible tool for forecasting and business analytics. It offers a user-friendly starting point for anyone who wants to examine their data and predict results. After choosing a target variable and uploading their dataset, users can use Akkio to build a neural network centered on that particular variable. Because it doesn’t require coding knowledge, it’s perfect for predictive analytics, particularly in marketing and sales. Eighty percent of the dataset is used for training, and the remaining twenty percent is used for validation in Akkio’s model-building process.Â

With Alteryx’s new no-code AI studio, users can create custom analytics apps with their own company data and can query using a natural language interface that incorporates models such as OpenAI’s GPT-4. The platform, which is driven by the Alteryx AiDIN engine, is notable for its approachable and intuitive design in terms of insight production and predictive analytics. The Workflow Summary Tool, which converts intricate procedures into concise summaries in natural language, is one of its highlights. In order to effectively reach their target customers, users can additionally select particular report formats, such as PowerPoint or email.Â

- Â SisenseÂ

Sisense is an analytics platform that helps developers and analysts create smart data products by combining AI with easy-to-use, no-code tools. By integrating intelligence into processes and products, Sisense’s AI-driven analytics help businesses make well-informed decisions and increase user engagement. More than 2,000 businesses have been using Sisense Fusion as a powerful AI and analytics platform to develop distinctive, high-value products.

- Â Â Julius AI

Julius AI automates difficult data analysis, simplifying findings and visualizations for both entry-level and seasoned analysts. Integrating easily with existing platforms, Julius accelerates data processing with intuitive interfaces and advanced predictive analytics, making it a key asset for teams trying to translate massive datasets into meaningful insights.

Luzmo is a no-code, user-friendly analytics tool made especially for SaaS platforms that enable users to generate interactive charts and dashboards easily. Businesses wishing to improve their analytics skills without needing a lot of coding expertise can use this tool. Users can save time while still receiving high-quality insights with the help of Luzmo’s API compatibility with well-known AI products like ChatGPT, which makes dashboard generation effective and automatic. Everyone can interact with data more easily because of the user-friendly interface, which also promotes teamwork and expedites the analytics process.Â

- Â KNIMEÂ

KNIME is an open-source platform that democratizes access to data science tools, facilitating advanced analytics and machine learning for users of all skill levels. KNIME promotes a more inclusive data science environment by enabling users to construct data workflows without the need for complex programming knowledge through its user-friendly drag-and-drop interface. Modern analytics requires smooth integration with a wide range of data sources, which is made possible by the platform’s support for more than 300 data connectors.Â

AnswerRocket serves as an AI-powered data analysis helper, making it easier to glean insights from a variety of data sources. AnswerRocket breaks down conventional barriers to data insights by enabling non-technical individuals to communicate with their data through natural language queries. Without requiring in-depth analytical training, the platform’s AI capabilities allow it to provide proactive insights and data-driven suggestions, assisting users in finding significant patterns and trends.Â

DataLab is a powerful AI-powered data notebook that combines generative AI technology with a strong IDE to expedite the conversion of data into meaningful insights. Users can engage directly with their data using an easy-to-use chat interface, developing, updating, and debugging code, analyzing datasets, and producing comprehensive reports all on one platform. By allowing users to chat with their data, DataLab’s AI Assistant further increases productivity by streamlining processes like coding, providing context-specific recommendations to improve workflow efficiency, and explaining data structures. DataLab’s collaborative capabilities facilitate real-time teamwork by enabling several people to work on projects at once, exchange ideas, and easily handle version control. DataLab automatically creates editable, real-time reports that users can share with ease as they dive into data research. It guarantees simple data importation and extensive analytical capabilities in a single, user-friendly environment by being compatible with a variety of data sources, such as CSV files, Google Sheets, Snowflake, and BigQuery.

- Â Looker

Google Cloud’s Looker is a powerful no-code platform for business intelligence and data analysis with a wide range of integration possibilities. It effectively manages huge datasets, allowing users to combine several data sources into a single, coherent view and generate numerous dashboards and reports. The platform takes advantage of Google’s robust support infrastructure and provides sophisticated data modeling capabilities.Â

Echobase is an AI-powered platform designed for companies looking to use data to improve productivity and cooperation. Echobase streamlines procedures that call for analytics and creativity by using AI agents trained for jobs like content production, data analysis, and Q&A. Echobase integration is easy as users don’t need to know any code to connect cloud storage or upload files. The platform facilitates teamwork by enabling users to interact with AI bots for data searches and analysis by offering role assignments and permission management. AWS encryption protects sensitive data while giving users total control over it, demonstrating the importance of data security. In addition to analysis, Echobase provides a range of AI tools to assist with operational and creative activities, such as narrative creators and email and paragraph generators.Â

BlazeSQL is an AI-powered tool that converts natural language queries into SQL, making it easier for people who don’t know much about SQL to retrieve data. Teams can easily extract and visualize data from databases with the help of the SQL queries it automatically generates. Compatibility with several SQL databases, including MySQL, PostgreSQL, Microsoft SQL Server, Snowflake, BigQuery, and Redshift, accounts for BlazeSQL’s adaptability. It prioritizes data security and privacy by storing data interactions on the user’s local device and is available in both desktop and cloud versions. One of the main benefits is no-code SQL generation, which enables users to quickly convert text prompts into SQL queries.Â

MonkeyLearn is a no-code AI platform that simplifies data organization and visualization. MonkeyLearn’s powerful text analysis features enable it to change data visualization quickly and let customers configure classifiers and extractors to automatically categorize data by subject or purpose or to extract important product aspects and user information. It is useful for managing customer feedback and support tickets since it can automate processes and remove hours of manual data processing by utilizing machine learning. Its ability to highlight particular text for fast sorting and categorizing incoming data, like emails or tickets by keywords, is a good feature.Â

With integrated machine learning capabilities that facilitate data analysis and visualization, Google Sheets is a versatile spreadsheet application. For individuals and small teams seeking a flexible platform for data work, it provides real-time communication, data visualization, and ML-powered analytical capabilities. Personal users can use it for free, and business users can choose from pricing options under G Suite.Â

An AI-powered analytics tool called ThoughtSpot enables users to ask sophisticated business queries in normal language and receive perceptive, AI-powered responses. With features like interactive visualizations, data modeling support, AI-guided search, and real-time data monitoring, it’s perfect for analysts and business users who require quick, clear insights. ThoughtSpot is a cloud-based solution that offers adjustable pricing to accommodate different requirements.Â

Talend is a comprehensive platform for data integration, monitoring, and administration, which allows users to process and analyze data from a variety of big data sources, such as Hadoop, Spark, and Hive. It is an effective tool for companies that prioritize safe and legal data management because of its important characteristics, which include strong integration capabilities, data monitoring, and support for large data environments. Talend provides both commercial and open-source versions, with scalable and adaptable pricing options to accommodate a range of requirements. Â

Building Practical Experience in Data Analytics

Internships and Entry-Level Positions

Getting an internship or entry-level job is a crucial first step in developing real-world data analysis experience. Building a strong CV and emphasizing pertinent education, certificates, and any technological abilities like Excel, SQL, Python, or Tableau increases chances. Each application can be made unique to the business and position by highlighting the particular abilities the employer is looking for.Â

In the resume and interviews, personal projects or case studies can be included, whether they were self-initiated or completed as part of studies. In a competitive market, a candidate can differentiate themselves by showcasing readiness to learn and a proactive approach to solving real-world challenges.

Projects and Portfolio Development

To differentiate as a data analyst, building a portfolio of personal work is good practice. Projects that highlight technical and analytical abilities, such as data cleansing, exploratory data analysis, statistical modeling, and data visualization, should be included in the portfolio. One excellent option for publicly displaying work is to host it on GitHub. It shows that one can manage a project, record activities, and adhere to best practices, in addition to giving prospective employers access to code and problem-solving methodology. With concise summaries of each project, an explanation of the issue, and any new insights or solutions discovered, the portfolio should be simple to browse.

Kaggle Competitions and Other Platforms

Data analytics expertise can be used to solve practical issues in competitions hosted by Kaggle, an online community of data scientists and machine learning professionals. Business-related problems can be solved by working with a variety of datasets and honing modeling and data manipulation abilities by taking part in Kaggle tournaments.Â

Networking and Community EngagementÂ

Joining Data Communities and Meetups

One effective strategy for learning, developing, and connecting with other data analytics professionals is networking. Attending meetups and joining data-focused networks can help learn more, become familiar with industry trends, and open doors to possible career prospects. Data science and analytics-focused LinkedIn groups are an excellent place to start because they exchange resources, have discussions, and occasionally post job openings or joint projects. In a similar vein, one can network with analysts, exchange stories, and discover practical uses of data analytics in other sectors by going to local data science meetings.

Another easily accessible choice for networking and skill development is webinars. Numerous organizations hold webinars with presenters who are specialists in the field on subjects like machine learning algorithms and data visualization strategies. In addition to offering insightful information, these sessions allow to interact with experts via group chats and Q&A sessions.

LinkedIn and GitHub for Professional Networking

Any data analyst must have a professional internet profile, and two important sites to use are GitHub and LinkedIn. It should be ensured that one’s LinkedIn profile is comprehensive, highlighting experience, projects, credentials, and abilities. The profile can stand out if one writes a good headline and summary, especially if it contains particular data tools or abilities related to desired work roles.Â

A useful portfolio tool for demonstrating coding and project management abilities is GitHub. Participating in open-source projects, uploading personal projects on a regular basis, and maintaining repository documentation and organization is a good way to showcase skills. This not only shows off technical skills but also makes it simple for industry experts and recruiters to look over the work. Because they offer concrete proof of skills and commitment to lifelong learning, GitHub projects can be a great conversation starter and a useful tool for networking events and job applications.

Job Search and Career Advancement TipsÂ

Preparing for Interviews

Interviews for data analysts frequently consist of multiple steps to evaluate a candidate’s technical proficiency, critical thinking, and good insight communication. The interview procedure usually consists of:

- Initial Screening: To find out more about the candidate’s experience, abilities, and motivation, a recruiter or hiring manager can speak over the phone or via video conference. This is frequently a chance to enquire about the position and the business.

- Technical Tests: To evaluate problem-solving abilities, many data analyst positions call for a technical test that may consist of data manipulation exercises, short coding challenges, or projects involving SQL, Excel, or Python.

- Business scenarios and case studies: Case studies assess one’s capacity to evaluate a business issue, decipher information, and offer practical solutions. In these tasks, the candidate might be asked to describe how they would collect, examine, and interpret data for business decision-making in a hypothetical situation.

- Situational and behavioral interviews concentrate on soft skills, including problem-solving, communication, and teamwork.Â

Websites for Interview Preparation

- DataCamp: It offers interactive courses in SQL, Python, and R, along with practice assessments to prepare for data analyst interviews.

- Pramp – It facilitates mock interviews with peers and includes data analysis problem sets, which are ideal for practicing interview-style questions in real-time.

- AlgoExpert – It is a comprehensive platform with curated problems, video explanations, and mock interview tools for technical interview preparation.

- StrataScratch – It provides real-world SQL interview questions used by top tech companies, which is ideal for honing SQL skills.

- Interview Query – This website specializes in data science and data analytics interview prepration, with practice questions and mock interviews.

- Glassdoor – It provides access to interview questions specific to data analyst roles by company.

- Codeacademy: It provides an exclusive interview preparation kit for data analyst roles.

- Simplilearn: It includes top-asked data analyst interview questions, guiding the candidate in the interview process.

- TechCanvass: This website includes the compilation of the top interview questions and responses related to data analytics. The questions have been categorized for easy learning.Â

- Exponent: This platform helps candidates in appearing for mock interviews.Â

Conclusion

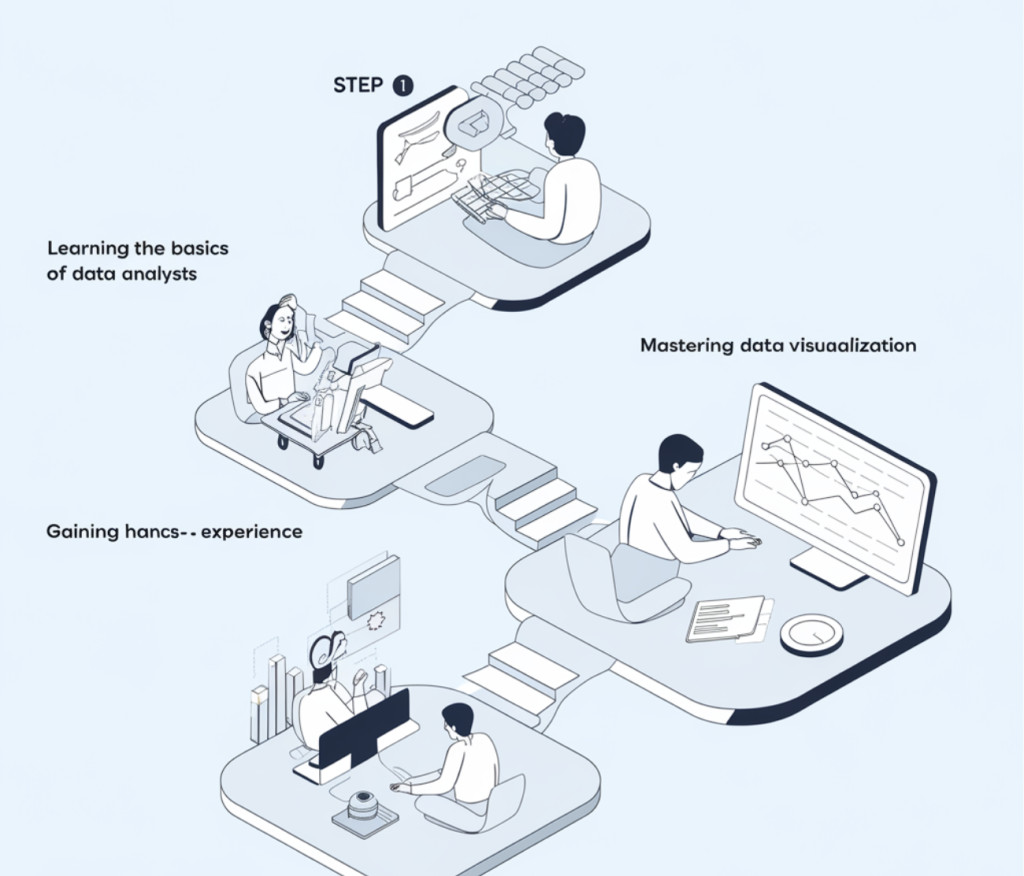

In conclusion, the path to becoming a data analyst is one that is full of chances to truly make an impact. Gaining a firm grasp of the principles of data analytics, gaining real-world experience through projects or internships, improving technical abilities, and actively networking with specialists in the field can help secure a data analyst job. With dedication and a strategic approach, one can be well on their way to a rewarding career as a data analyst.

The post How to Become a Data Analyst? Step by Step Guide appeared first on MarkTechPost.

Source: Read MoreÂ