Tactile sensing plays a crucial role in robotics, helping machines understand and interact with their environment effectively. However, the current state of vision-based tactile sensors poses significant challenges. The diversity of sensors—ranging in shape, lighting, and surface markings—makes it difficult to build a universal solution. Traditional models are often developed and designed specifically for certain tasks or sensors, which makes scaling these solutions across different applications inefficient. Moreover, obtaining labeled data for critical properties like force and slip is both time-consuming and resource-intensive, further limiting the potential of tactile sensing technology in widespread applications.

Meta AI Releases Sparsh: The First General-Purpose Encoder for Vision-Based Tactile Sensing

In response to these challenges, Meta AI has introduced Sparsh, the first general-purpose encoder for vision-based tactile sensing. Named after the Sanskrit word for “touch,†Sparsh aptly represents a shift from sensor-specific models to a more flexible, scalable approach. Sparsh leverages recent advancements in self-supervised learning (SSL) to create touch representations applicable across a wide range of vision-based tactile sensors. Unlike earlier approaches that depend on task-specific labeled data, Sparsh is trained using over 460,000 tactile images, which are unlabeled and gathered from various tactile sensors. By avoiding the reliance on labels, Sparsh opens the door to applications beyond what traditional tactile models could offer.

Technical Details and Benefits of Sparsh

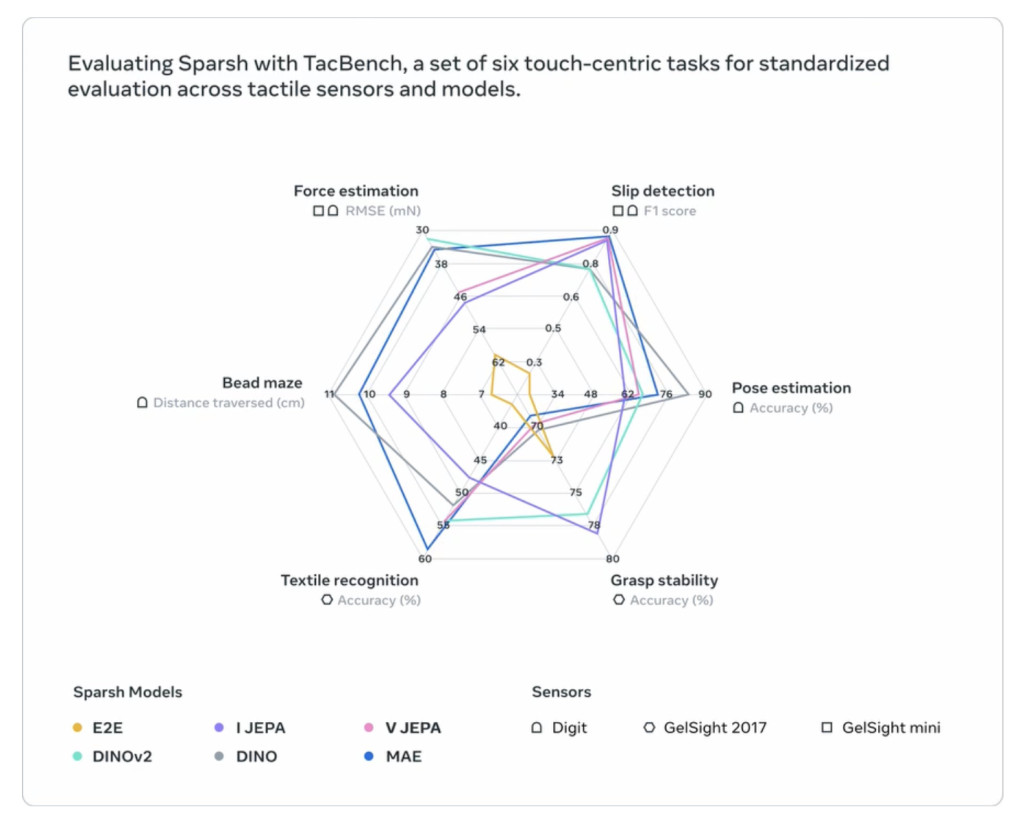

Sparsh is built upon several state-of-the-art SSL models, such as DINO and Joint-Embedding Predictive Architecture (JEPA), which are adapted to the tactile domain. This approach enables Sparsh to generalize across various types of sensors, like DIGIT and GelSight, and achieve high performance across multiple tasks. The encoder family pre-trained on over 460,000 tactile images serves as a backbone, alleviating the need for manually labeled data and enabling more efficient training. The Sparsh framework includes TacBench, a benchmark consisting of six touch-centric tasks, such as force estimation, slip detection, pose estimation, grasp stability, textile recognition, and dexterous manipulation. These tasks evaluate how well Sparsh models perform in comparison to traditional sensor-specific solutions, highlighting significant performance gains—95% on average—while using as little as 33-50% of the labeled data required by other models.

Importance of Sparsh in Robotics and AI

The implications of Sparsh are significant, particularly for robotics, where tactile sensing plays an essential role in improving physical interaction and dexterity. By overcoming the limitations of traditional models that need labeled data, Sparsh paves the way for more advanced applications, including in-hand manipulation and dexterous planning. Evaluations show that Sparsh outperforms end-to-end task-specific models by over 95% in benchmarked scenarios. This means that robots equipped with Sparsh-powered tactile sensors can better understand their physical environment, even with minimal labeled data. Additionally, Sparsh has proven to be highly effective at various tasks, including slip detection (achieving the highest F1 score among tested models) and textile recognition, offering a robust solution for real-world robotic manipulation tasks.

Conclusion

Meta’s introduction of Sparsh marks an important step forward in advancing physical intelligence through AI. By releasing this family of general-purpose touch encoders, Meta aims to empower the research community to build scalable solutions for robotics and AI. Sparsh’s reliance on self-supervised learning allows it to sidestep the expensive and laborious process of collecting labeled data, thereby providing a more efficient path toward creating sophisticated tactile applications. Its ability to generalize across tasks and sensors, as shown by its superior performance in the TacBench benchmark, underscores its transformative potential. As Sparsh becomes more widely adopted, we may see advancements in various fields, from industrial robots to household automation, where physical intelligence and tactile precision are vital for effective performance.

Check out the Paper, GitHub Page, and Models on HuggingFace. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Sponsorship Opportunity with us] Promote Your Research/Product/Webinar with 1Million+ Monthly Readers and 500k+ Community Members

The post Meta AI Releases Sparsh: The First General-Purpose Encoder for Vision-Based Tactile Sensing appeared first on MarkTechPost.

Source: Read MoreÂ