The Evidence Lower Bound (ELBO) is a key objective for training generative models like Variational Autoencoders (VAEs). It parallels neuroscience, aligning with the Free Energy Principle (FEP) for brain function. This shared objective hints at a potential unified machine learning and neuroscience theory. However, both ELBO and FEP lack prescriptive specificity, partly due to limitations in standard Gaussian assumptions in models, which don’t align with neural circuit behaviors. Recent studies propose using Poisson distributions in ELBO-based models, as in Poisson VAEs (P-VAEs), to create more biologically plausible, sparse representations, though challenges with amortized inference remain.

Generative models represent data distributions by incorporating latent variables but often face challenges with intractable posterior computations. Variational inference addresses this by approximating the posterior distribution, making it closer to the true posterior via the ELBO. ELBO is linked to the Free Energy Principle in neuroscience, aligning with predictive coding theories, although Gaussian-based assumptions present limitations in biological models. Recent work on P-VAEs introduced Poisson-based reparameterization to improve biological alignment. P-VAEs generate sparse, biologically plausible representations, though gaps hinder their performance in amortized versus iterative inference methods.

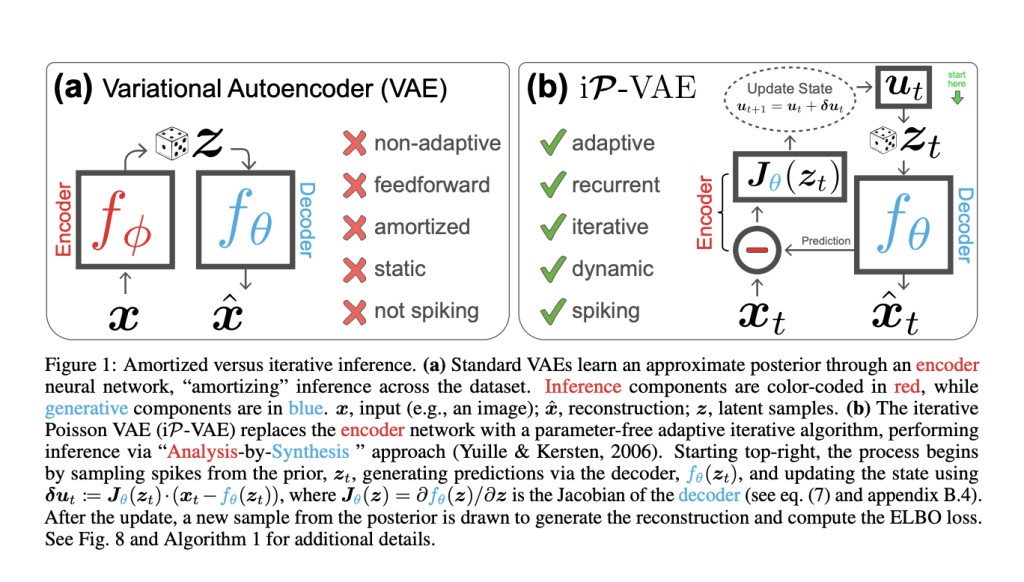

Researchers at the Redwood Center for Theoretical Neuroscience and UC Berkeley developed the iterative Poisson VAE (iP-VAE), which enhances the Poisson Variational Autoencoder by incorporating iterative inference. This model connects more closely to biological neurons than previous predictive coding models based on Gaussian distributions. iP-VAE achieves Bayesian posterior inference through its membrane potential dynamics, resembling a spiking version of the Locally Competitive Algorithm for sparse coding. It shows improved convergence, reconstruction performance, efficiency, and generalization to out-of-distribution samples, making it a promising architecture for NeuroAI that balances performance with energy efficiency and parameter efficiency.

The study introduces the iP-VAE, which derives the ELBO for sequences modeled with Poisson distributions. The iP-VAE architecture implements iterative Bayesian posterior inference based on membrane potential dynamics, addressing limitations of traditional predictive coding. It assumes Markovian dependencies in sequential data and defines priors and posteriors that update iteratively. The model’s updates are expressed in log rates, mimicking membrane potentials in spiking neural networks. This approach allows for effective Bayesian updates and parallels biological neuronal behavior, providing a foundation for future neuro-inspired machine learning models.Â

The study conducted empirical analyses on iP-VAE and various alternative iterative VAE models. The experiments evaluated the performance and stability of inference dynamics, the model’s ability to generalize to longer sequences, and its robustness against out-of-distribution (OOD) samples, particularly with MNIST data. The iP-VAE demonstrated strong generalization capabilities, surpassing traditional reconstruction quality and stability models when tested on OOD perturbations and similar datasets. The model also revealed a compositional feature set that enhanced its generalization across different domains, showing its ability to adapt effectively to new visual information while maintaining high performance.

In conclusion, the study presents the iP-VAE, a spiking neural network designed to maximize the ELBO and perform Bayesian posterior updates through membrane potential dynamics. The iP-VAE exhibits robust adaptability and outperforms amortized and hybrid iterative VAEs for tasks requiring fewer parameters. Its design avoids common issues associated with predictive coding, emphasizing neuron communication through spikes. The model’s theoretical grounding and empirical successes indicate its potential for neuromorphic hardware applications. Future research could explore hierarchical models and nonstationary sequences to further enhance the model’s capabilities.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Trending] LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLMs) for Intel PCs

The post iP-VAE: A Spiking Neural Network for Iterative Bayesian Inference and ELBO Maximization appeared first on MarkTechPost.

Source: Read MoreÂ