Customers migrating Oracle databases to Amazon Relational Database Service (Amazon RDS) for PostgreSQL or Amazon Aurora PostgreSQL-Compatible Edition might encounter the challenge of scheduling jobs that require precise sub-minute scheduling to avoid workflow disruptions and maintain business operations. Oracle provides a scheduler called DBMS_JOB to handle these use cases, and you can use the pg_dbms_job open source extension, which provides similar functionality, to simplify migrations.

In this post, we demonstrate how you can use Trusted Language Extensions (TLEs) for PostgreSQL to install and use pg_dbms_job on Amazon Aurora and Amazon RDS. pg_dbms_jobs allows you to manage scheduled sub-minute jobs. This extension consists of a SQL script to create all the objects related to its operation and a daemon that must be attached to the database where jobs are defined.

pg_tle is a package that includes the TLEs for PostgreSQL that you can use to build high performance PostgreSQL extensions and safely run them on your Amazon RDS for PostgreSQL or Aurora PostgreSQL-compatible database instance.

Solution overview

For customers running critical jobs, database schedulers are essential to ensure jobs run on time and to avoid any adverse business impacts. This is particularly important in use cases that involve business workflows with interdependent jobs that must be run immediately or at a sub-minute frequency to meet defined SLAs.

pg_dbms_job provides similar functionality to the features of Oracle’s DBMS_JOB module, offering similar capabilities through an external process running on Amazon Elastic Compute Cloud (Amazon EC2). This approach allows users to schedule and manage jobs effectively, even though pg_dbms_job isn’t natively available in RDS for PostgreSQL or Aurora PostgreSQL.

In this solution, we demonstrate how pg_tle can be used to integrate the pg_dbms_job extension within these environments. We provide a step-by-step approach to set up and configure the extension, followed by examples of its use cases.

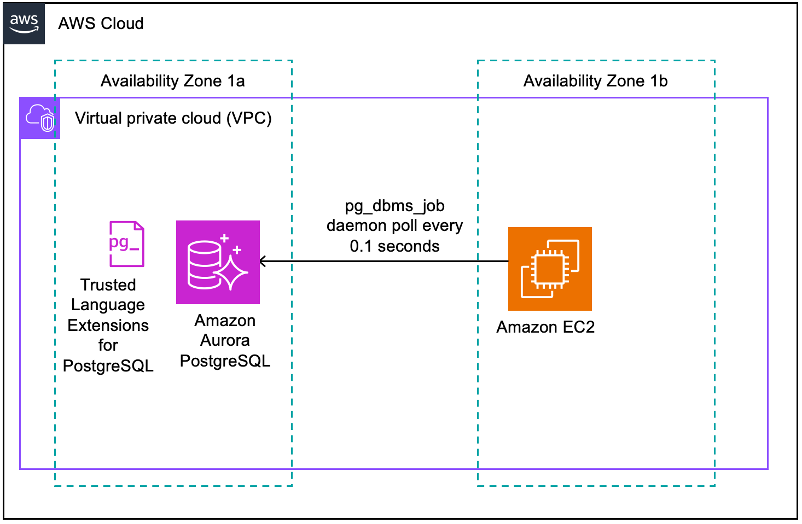

The following diagram shows the solution architecture and the services we use.

Prerequisites

To deploy this solution, you must deploy the following AWS services:

An Aurora PostgreSQL or Amazon RDS for PostgreSQL instance that supports pg_tle. See Extension versions for Amazon Aurora PostgreSQL and Extension versions for Amazon RDS for PostgreSQL.

An Amazon EC2 instance with connectivity to your database instance.

The following are the main components and their role in the solution:

Aurora PostgreSQL – pg_tle is supported on Amazon RDS for PostgreSQL and Aurora PostgreSQL version 13.12 or later. For more information, see Working with Trusted Language Extensions for PostgreSQL.

Amazon EC2 – Amazon EC2 hosts the binaries of pg_dbms_job where the daemon is running. The daemon polls the database every 0.1 seconds to check if any new asynchronous jobs are created. When there is no notification from the daemon, the scheduler polls (job_queue_interval = 5 seconds) the tables where job definitions are stored.

pg_tle – TLE for PostgreSQL

pg_dbms_job – An open source extension, binaries are installed on the EC2 instance.

pg_dbms_job compared to Oracle’s dbms_job

pg_dbms_job uses the same subprograms and APIs as in Oracle and has similar functionality. The following table shows the comparison between Oracle’s dbms_job and PostgreSQL pg_dbms_job.

Subprogram

Oracle (dbms_job)

PostgreSQL (pg_dbms_jobs)

Description

BROKEN

Yes

Yes

Disables job run

CHANGE

Yes

Yes

Alters any of the user-definable parameters associated with a job

INSTANCE

Yes

No

Assigns a job to be run by an instance (Oracle RAC-specific)

INTERVAL

Yes

Yes

Alters the interval between runs for a specified job

NEXT_DATE

Yes

Yes

Alters the next runtime for a specified job

REMOVE

Yes

Yes

Removes a specified job from the job queue

RUN

Yes

Yes

Forces a specified job to run

SUBMIT

Yes

Yes

Submits a new job to the job queue

USER_EXPORT

Yes

No

Recreates a given job for export, or recreates a given job for export with instance affinity (Oracle RAC-specific)

WHAT

Yes

Yes

Alters the job description for a specified job

Table1: comparison of subprograms

Tables that store information about scheduled synchronous and asynchronous jobs are created in a similar format as in Oracle:

all_scheduled_jobs – Any jobs that are scheduled to run with a past date run immediately. You can find information about all_scheduled_jobs in the preceding reference table.

all_async_jobs – Asynchronous jobs are created without a run date. You can find information about all_async_jobs in the preceding reference table.

all_jobs – This view reports all jobs to be run by running a union between all_scheduled_jobs and all_async_jobs.

The following are the high-level steps to deploy and manage this solution:

Set up pg_tle in Amazon Aurora PostgreSQL-compatible database.

Create pg_dbms_job as a TLE extension for Amazon Aurora PostgreSQL-compatible

Set up pg_dbms_job.

Configure pg_dbms_job parameters

Table maintenance

Set up pg_tle in your Amazon Aurora PostgreSQL-compatible database

Follow the guidelines provided in the AWS documentation to set up TLE in Amazon RDS or Aurora PostgreSQL database.

Create pg_dbms_job as a TLE extension for Amazon Aurora PostgreSQL-compatible

Install the pre-requisite binaries to support pg_dbms_job installation.

Clone the pg_tle extension library into your location file system using the following code, this step is required because you will create a TLE extension using sh. However, you can also use the pgtle.install_extension function to obtain similar results.

Clone the pg_dbms_job extension library using the following code:

change the directory on Amazon EC2 where pg_tle was downloaded and install pg_dbms_job as a TLE extension for the Aurora PostgreSQL database. Run the sh using the following parameters and enter the database password when prompted:

You can find the status of the installation by running sh (see the following code) or running the function pgtle.available_extensions().

For more information about creating a local extension using pg_tle, see Managing Trusted Language Extensions for PostgreSQL.

Set up pg_dbms_job

For this post, you create a separate schema which contains all the helper functions to create and manage the scheduler jobs described in Table1. You can run the latest version from the GitHub repo. For this post, we use pg_dbms_job–1.5.0.sql:

Connect to your database instance.

Create a database schema and run the pg_dbms_job–1.5.0.sql.

Set up an admin role and grant privileges on dbms_job to the admin role. This scenario uses the role jobadmin:

Configure pg_dbms_job parameters

You can find all the required pg_dbms_job configurations in the GitHub repo. You can customize the pg_dbms_job.conf file located under pg_dbms_job/etc/.

If you have multiple databases and have one scheduler running, you can create one .conf file for each database using the pattern <databasename>_ pg_dbms_job.conf. In this scenario, the file is named apgdb14_pg_dbms_job.conf.

The following are some key parameters that you might want to modify (they should be evaluated based on your environment’s needs):

job_queue_interval – Poll interval of the jobs queue. Default 5 seconds.

job_queue_processes – Maximum number of jobs processed at the same time. Default 1,000.

Pidfile – Path to the PID file.

Logfile – Log file name pattern. Can include strftime()

Host – Hostname or endpoint of the database.

Port – Port number of the database.

Database – Database where pg_dbms_job was installed.

User – User name to log in to the database.

Passwd – Password to log in to the database.

The following is the default pg_dbms_job.conf file.

Start the scheduler with the following code:

Validate if the process has started successfully using the following command:

Check for the PID in /tmp/pg_dbms_job.pid:

You can configure monitoring for this specific process by following the instructions in Detecting and remediating process issues on EC2 instances using Amazon CloudWatch and AWS Systems Manager.

Check for log files in /tmp/pg_dbms_job.log:

You’ve completed the initial setup of the pg_dbms_job extension. Now you can test the features of dbms_job.

Test the solution

In this section, you test the subprograms of pg_dbms_job and demonstrate the use cases with five examples.

Create a table cust_order and a function insfunc2 to test the subprograms in the subsequent sections:

To validate the function, use the following code to insert a test record in the cust_order table:

Example 1

In this example, you create a scheduled job with a delayed run of 10 seconds. After the first run, the job will run every 5 seconds. The following is the snippet of code that demonstrates the use case to schedule jobs with a sub-minute frequency.

Example 2

In this example, you change the schedule of the job created in Example 1 to run the next day.

Example 3

In this example, you will mark the job created in Example 1 as broken. This addresses a use case where a job has failed. This gives you an opportunity to stop the job, by preventing repeated failure and allowing manual intervention to resolve the issues.

Example 4

In this example, you remove the job from the job queue, such as in a use case where you want to retire the job.

Example 5

In this example, you create a job that will run immediately after it’s submitted, often called asynchronous jobs. This addresses a use case where customers create interdependent jobs or chained jobs with a precise scheduling requirement and should be run immediately and only once.

The preceding job run is successful, and it should insert a record in the cust_order table:

Table maintenance

Based on the level of activity and how busy the scheduler is, you might need to monitor for bloat on the queue tables, because higher bloat can potentially slow down the entire job scheduler. It’s imperative to perform maintenance on these tables in addition to other PostgreSQL tables. It’s recommended that auto vacuum is tuned to optimize the performance. See Working with the PostgreSQL autovacuum on Amazon RDS for PostgreSQL for more information.

For more details on maintenance, review the limitations.

By default, the scheduler log file is stored in the /tmp directory, but you can change this location by changing the value of the logfile parameter in the .conf file. For troubleshooting purposes, you can toggle the debug value to 1 and enable detailed logging. The log file is the central location to check during troubleshooting if pg_dbms_job has issues.

If the number of jobs exceeds the set job_queue_process, the log file shows a warning message: “max job queue size is reached, waiting the end of another jobâ€.

The log files are rotated every week by default. If your use case requires longer retention, you can archive the log files to a different location.

Clean up

When you’re done testing, complete the following steps to clean up your environment:

Unschedule all the jobs in the queue, using the remove API.

Drop the tables and functions that have been created.

Stop the scheduler running on Amazon EC2:

Drop the schema dbms_job in the database.

Uninstall the extension pg_dbms_job running in the database:

Drop extension

Remove the pg_dbms_job binaries from the directory.

If you’re no longer using the database, delete the Aurora database cluster (or, for Amazon RDS for PostgreSQL, the database instance) and the EC2 instance.

Conclusion

Managing critical jobs with precision and reliability is vital for businesses that depend on timely completion of interdependent tasks to meet strict SLAs. This post shows how you can use Trusted Language Extensions for PostgreSQL (pg_tle) to add PostgreSQL extensions to your Aurora PostgreSQL and RDS for PostgreSQL instances.

By following the steps in this post, you can ensure that your business workflows are run promptly and efficiently, minimizing risks and maintaining high performance.

About the Authors

Rajesh Kumar Sabankar is a Senior Database Specialty Architect with Amazon Web Services. He works with internal Amazon customers to build secure, scalable, cost efficient and resilient architectures in the AWS Cloud and helps customers perform migrations at scale.

Bhanu Ganesh Gudivada is a Lead Database Consultant with the Professional Services team at AWS based out of Hyderabad, India, and specializes in database migrations. He helps enable customers to build highly available, cost-effective database solutions and migrate their commercial engines to the AWS Cloud. He’s curious about learning and implementing new technologies around databases and orchestrating migrations through automation.

Samujjwal Roy is a Senior Practice Manager, Database Migration Leader with the Professional Services team at Amazon Web Services. He has been with Amazon for over 19 years and has led migration projects for internal and external Amazon customers to move their on-premises database environments to AWS Cloud database solutions.

Source: Read More