A Large Language Model (LLM) is an advanced type of artificial intelligence designed to understand and generate human-like text. It’s trained on vast amounts of data, enabling it to perform various natural language processing tasks, such as answering questions, summarizing content, and engaging in conversation.

LLMs are revolutionizing education by serving as chatbots that enrich learning experiences. They offer personalized tutoring, instant answers to students’ queries, aid in language learning, and simplify complex topics. By emulating human-like interactions, these chatbots democratize learning, making it more accessible and engaging. They empower students to learn at their own pace and cater to their individual needs.

However, evaluating educational chatbots powered by LLMs is challenging due to their open-ended, conversational nature. Unlike traditional models with predefined correct responses, educational chatbots are assessed on their ability to engage students, use supportive language, and avoid harmful content. The evaluation focuses on how well these chatbots align with specific educational goals, like guiding problem-solving without directly giving answers. Flexible, automated tools are essential for efficiently assessing and improving these chatbots, ensuring they meet their intended educational objectives.

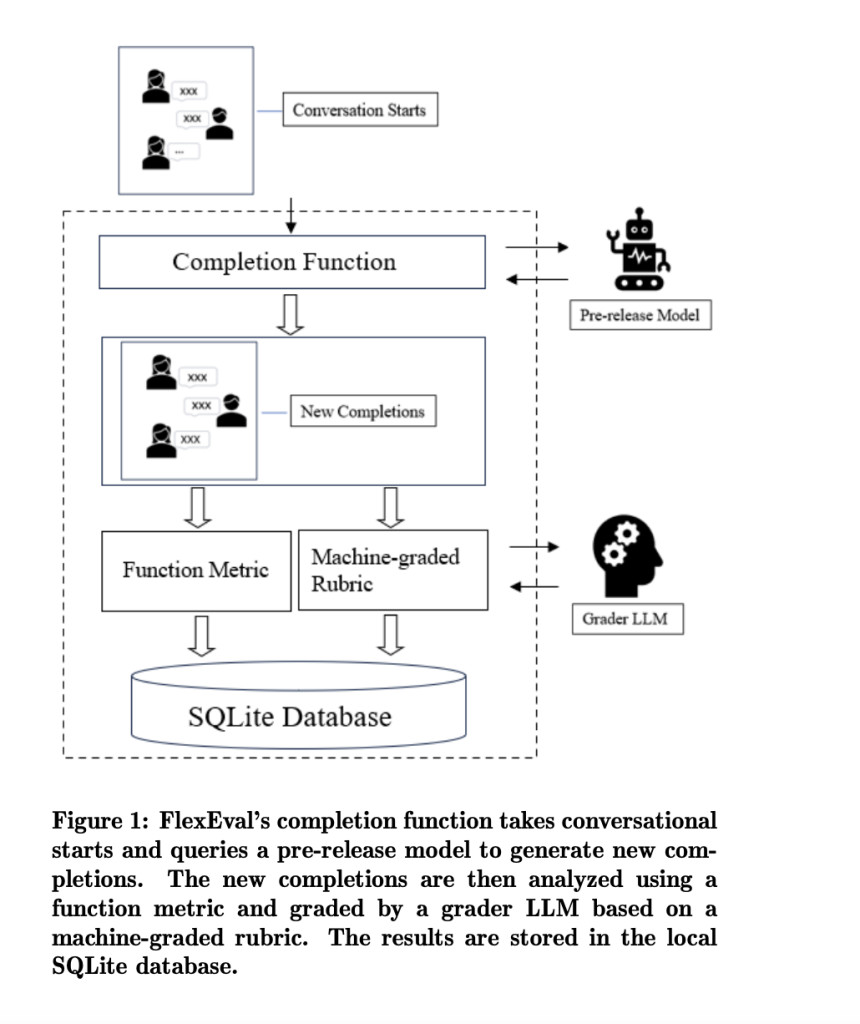

To resolve the challenges cited above, a new paper was recently published introducing FlexEval, an open-source tool designed to simplify and customize the evaluation of LLM-based systems. FlexEval allows users to rerun conversations that led to undesirable behavior, apply custom metrics, and evaluate new and historical interactions. It provides a user-friendly interface for creating and using rubrics, integrates with various LLMs, and safeguards sensitive data by running evaluations locally. FlexEval addresses the complexities of evaluating conversational systems in educational settings by streamlining the process and making it more flexible.

Here are the three parts of the text categorized as requested:

Concretely, FlexEval is designed to reduce the complexity of automated testing by allowing developers to increase visibility into system behavior before and after product releases. It provides editable files in a single directory: `evals.yaml` for test suite specifications, `function_metrics.py` for custom Python metrics, `rubric_metrics.yaml` for machine-graded rubrics, and `completion_functions.py` for defining completion functions. FlexEval supports evaluating new and historical conversations and storing results locally in an SQLite database. It integrates with various LLMs and configures user needs, facilitating system evaluation without compromising sensitive educational data.

To check the effectiveness of FlexEval, two example evaluations were conducted. The first tested model safety using the Bot Adversarial Dialogue (BAD) dataset to determine whether pre-release models agreed with or produced harmful statements. Results were evaluated using the OpenAI Moderation API and a rubric to detect the Yeasayer Effect. The second evaluation involved historical conversations between students and a math tutor from the NCTE dataset, where FlexEval classified tutor utterances as on or off task using LLM-graded rubrics. Metrics such as harassment and F1 scores were calculated, demonstrating FlexEval’s utility in model evaluation.

To conclude, we presented FlexEval in this article, which was proposed recently in a new paper. FlexEval addresses the challenges of evaluating LLM-based systems by simplifying the process and increasing visibility into model behavior. It offers a flexible, customizable solution that safeguards sensitive data and integrates easily with other tools. As LLM-powered products continue to grow in educational settings, FlexEval is important to ensure these systems reliably serve their intended purpose. Future developments aim to further ease-of-use and broaden the tool’s application.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post FlexEval: An Open-Source AI Tool for Chatbot Performance Evaluation and Dialogue Analysis appeared first on MarkTechPost.

Source: Read MoreÂ