With the growth of generative artificial intelligence (AI), more organizations are running proofs of concept or small-scale production applications. However, as these organizations move into large-scale production deployments, costs and performance become primary concerns. To address these concerns, customers would typically turn to traditional caches to control costs on read-heavy workloads. However, in generative AI, part of the difficulty revolves around the ability for applications, such as chatbots, to cache the natural language requests they accept as inputs, given the variations in which inputs can be framed.

With the launch of vector search for Amazon MemoryDB, you can use MemoryDB, an in-memory database with Multi-AZ durability, as a persistent semantic caching layer. Semantic caching can improve the performance of generative AI applications by storing responses based on the semantic meaning or context within the queries.

Specifically, you can store the vector embeddings of requests and the generated responses in MemoryDB, and you can search for similarity between vectors instead of an exact match. This approach can reduce the response latency from seconds to single-digit milliseconds, and decrease costs in compute and requests to additional vector databases and foundation models (FMs), making your generative AI applications a reality from a cost and performance basis.

Furthermore, you can use this functionality with Knowledge Bases for Amazon Bedrock. Implementing the Retrieval Augmented Generation (RAG) technique, the knowledge base searches data to find the most useful information, and then uses it to answer natural language questions.

In this post, we present the concepts needed to use a persistent semantic cache in MemoryDB with Knowledge Bases for Amazon Bedrock, and the steps to create a chatbot application that uses the cache. We use MemoryDB as the caching layer for this use case because it delivers the fastest vector search performance at the highest recall rates among popular vector databases on AWS. We use Knowledge Bases for Amazon Bedrock as a vector database because it implements and maintains the RAG functionality for our application without the need of writing additional code. You can use a different vector database if needed for your application, including MemoryDB.

With this working example, you can get started in your semantic caching journey and adapt it to your specific use cases.

Persistent semantic caches

Durable, in-memory databases can be used as persistent semantic caches, allowing for the storage of vector embeddings (a numerical representation that captures semantic relationships in unstructured data, such as texts) and their retrieval in just milliseconds. Instead of searching for an exact match, this database allows for different types of queries that use the vector space to retrieve similar items. For example, when a user asks a question to a chatbot, the text in the question can be transformed to a numerical representation (an embedding) and used to search similar questions that have been answered before. When a similar question is retrieved, the answer of that question is also available to the application and can be reused without the need to perform lookups in knowledge bases or make requests to FMs. This has two main benefits:

Latency is decreased because calls to FMs and knowledge bases are reduced

The processing time to serve a request is decreased, lowering compute cost—especially for serverless architectures that use AWS Lambda functions

In this post, we deploy an application to showcase these performance and cost improvements.

Solution overview

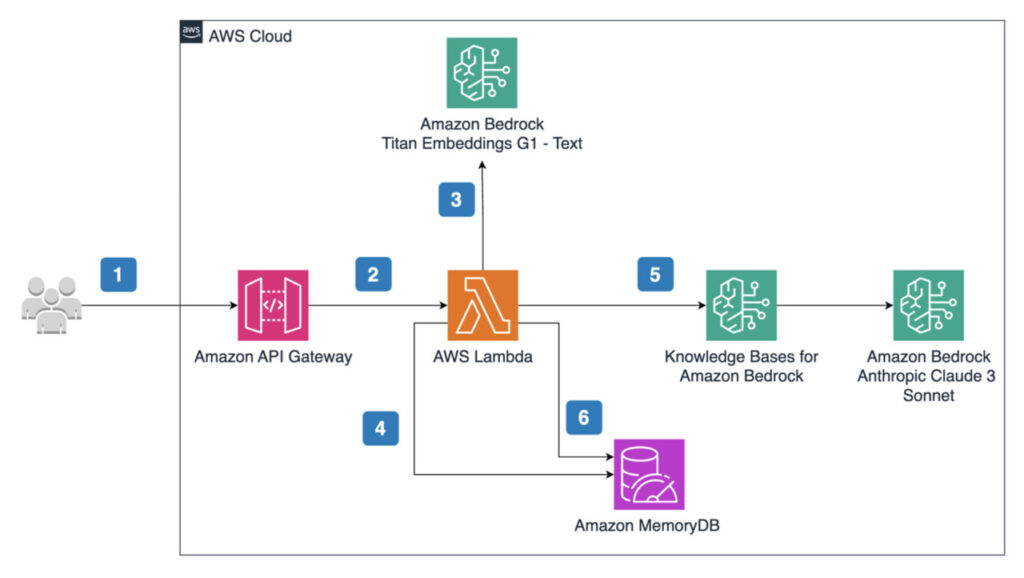

Our application will respond to questions from the AWS Well-Architected Framework guide, and will use a persistent semantic cache in MemoryDB. The following diagram illustrates this architecture.

For any similar questions that have been answered before, the application can find the answer in the cache and return it directly to the user, without the need of searching documents or invoking the FM. The workflow includes the following steps:

Users ask a question from their device using the Amazon API Gateway URL

When API Gateway gets a request, a Lambda function is invoked

The Lambda function converts the question into embeddings using the Amazon Titan Embeddings G1 – Text model. This is needed to search the question in the cache

The Lambda function uses the embedding generated in the previous step to search in the persistent semantic cache, powered by MemoryDB. If a similar question is found, the answer of that question is returned to the user

If a similar question isn’t found, Lambda uses Knowledge Bases for Amazon Bedrock to search articles that are related to the question and to invoke the Anthropic Claude 3 Sonnet model on Amazon Bedrock to generate an answer

Finally, the Lambda function saves the generated answer in the MemoryDB persistent cache to be reused in subsequent similar queries, and returns the answer to the user

In the following steps, we demonstrate how to create and use the infrastructure that is required for the chatbot to work. This includes the following services:

Amazon API Gateway – A fully managed service that makes it straightforward for developers to create, publish, maintain, monitor, and secure APIs at any scale.

Amazon Bedrock – A fully managed service that offers a choice of high-performing FMs from leading AI companies. This example uses Amazon Titan Embeddings G1 – Text and Anthropic Claude 3 Sonnet, but you could use other models available in Amazon Bedrock.

Knowledge Bases for Amazon Bedrock – A fully managed service that provides FMs contextual information from data sources using RAG. Our knowledge base is used with default settings.

AWS Lambda – A serverless compute service for running code without having to provision or manage servers.

Amazon MemoryDB – A Redis OSS-compatible, durable, in-memory database service that delivers ultra-fast performance.

Amazon Simple Storage Service (Amazon S3) – An object storage service offering industry-leading scalability, data availability, security, and performance. We use Amazon S3 to store the documents that will be ingested into Knowledge Bases for Amazon Bedrock.

This example application covers a simple use case of returning a cached answer when a semantically similar question is asked. However, in practicality, many factors in addition to the question feed into a personalized response to your user. In these use cases, you may not want the same cached answer to a semantically similar question. To address this, MemoryDB enables pre-filtering on your vector search query. For example, if you have domain-specific answers, you can tag your cached responses with those domains such that you pre-filter the vector space for cached answers within the domain the user is associated to (for example, geographic location or age), thereby returning only cached answers to a semantically similar question relevant to the user.

Prerequisites

The following are needed in order to proceed with this post:

An AWS account

Terraform

The AWS Command Line Interface (AWS CLI)

Amazon Bedrock model access enabled for Anthropic Claude 3 Sonnet and Amazon Titan Embeddings G1 – Text in the us-east-1 Region

jq and git

curl and time commands (installed by default in Mac and Linux systems)

Python 3.11 with the pip package manager

Considerations

Consider the following before continuing with this post:

We follow the best practice of running cache systems in a private subnet. For this reason, the Lambda function is deployed in the same virtual private cloud (VPC) as the MemoryDB cluster.

The RANGE query is used to find semantically similar questions in the cache with the radius parameter set to 0.4. The radius parameter establishes how similar the questions need to be in order to produce a cache hit (retrieve a result). The lower the value, the more similar it needs to be. It is usually set through experimentation and is dependent on variability of the questions, the knowledge base diversity, and a balance between cache hits, cost, and accuracy. The code that makes this query can be found in the Lambda function included in the GitHub repo.

For simplicity, a NAT gateway is used to access Amazon Bedrock APIs. To avoid networking charges related to the NAT gateway or data transfer costs, consider using AWS PrivateLink.

Amazon X-Ray tracing is enabled for the Lambda function, which provides latency details for each API call performed in the code.

For simplicity, the deployed API has authentication enforced using API keys. It is recommended to use an API gateway with a Lambda authorizer or Amazon Cognito authorizer when user-level authentication is required.

The code is meant to be used in a development environment. Before migrating this solution to production, we recommend following the AWS Well-Architected Framework.

Deploy the solution

Complete the following steps to deploy the solution:

Clone the MemoryDB examples GitHub repo:

Initialize Terraform and apply the plan:

This creates the infrastructure needed for the application, such as the VPC, subnets, MemoryDB cluster, S3 bucket, Lambda function, and API gateway.

Get the Terraform outputs:

Upload the document to the S3 bucket and start the ingestion job:

Query the ingestion job status:

It might take several minutes until it shows a COMPLETE status. When the ingestion job is complete, you’re ready to run API calls against the chatbot.

Retrieve the API ID and key. These will be used to send requests to the application.

Now you can run the API call. The preceding time command provides metrics on how much time the application takes to respond.

Change the time output format to make it easier to read:

Send the request:

It can take several seconds the first time the call is run. However, subsequent API calls take less time (milliseconds) because it’s stored in the persistent cache after the first answer is generated.

For example, the following output shows the first time that the API responds to the question “What is the operational excellence pillar?†and it takes 5.021 seconds:

You can further explore details about the latency of this call on the X-Ray trace console. The following graph shows that most of the time is spent in answering the question with Knowledge Bases for Amazon Bedrock using Anthropic Claude 3 Sonnet (4.30 seconds), whereas adding the answer to the cache only takes 0.005 seconds (or 5 milliseconds).

If you run the same API call again, the latency is decreased to just 0.508 seconds:

For this request, the X-Ray trace shows that most of the time is spent generating the embedding of the question (0.325 seconds), whereas reading from the cache takes only 0.004 seconds (4 milliseconds).

The answer to a similar question, like “Tell me more about the operational excellence pillar,†should be found in the cache too, because it’s semantically similar to the question that was asked before:

In this case, it took 0.589 seconds, even though that exact question hasn’t been asked before:

Results

Now we can calculate how much a persistent semantic cache can save in latency and costs at scale for this chatbot application according to different cache hit rates.

The results table assumes the following:

The chatbot receives 100,000 questions per day.

Answers that are found in the cache (cache hit) take 0.6 seconds (600 milliseconds) to be returned to the user. This accounts for the entire run of the API call, with the cache read taking only single-digit milliseconds.

Answers that are not found in the cache (cache miss) and that need to be generated by Anthropic Claude 3 Sonnet take 6 seconds (6,000 milliseconds) to be returned to the user. This accounts for the entire run of the API call, with the addition of the response to the cache taking only single-digit milliseconds.

Each question requires 1,900 input tokens and 250 output tokens to be answered by Anthropic Claude 3 at $0.003 for each 1,000 input tokens and $0.015 for each 1,000 output tokens.

We use a MemoryDB cluster with three db.r7g.xlarge instances at $0.617 per hour, 100% utilization, and 17 GB of data written per day at $0.20 per GB. The cache has been warmed up in advance.

For simplicity, other services costs are not included in the calculation. There will be additional savings, such as with Lambda costs, because the function needs to run for less time when there is a cache hit.

Cache Hit Ratio

Latency

Total Input Tokens per Day (Anthropic Claude 3 Sonnet)

Total Output Tokens per Day (Anthropic Claude 3 Sonnet)

Answer Generation Cost per Day

MemoryDB Cost per Day

Generation Cost per Month (Includes MemoryDB)

Savings per Month

No cache

100,000 questions answered in 6 seconds

190,000,000

25,000,000

$945

N/A

$28,350.00

$0

10%

10,000 questions answered in 0.6 seconds

90,000 questions answered in 6 seconds

171,000,000

22,500,000

$850.50

$48.44

$26,968.23

$1,381.77

25%

25,000 questions answered in 0.6 seconds

75,000 questions answered in 6 seconds

142,500,000

18,750,000

$708.75

$48.44

$22,715.73

$5,634.27

50%

50,000 questions answered in 0.6 seconds

50,000 questions answered in 6 seconds

95,000,000

12,500,000

$472.50

$48.44

$15,628.23

$12,721.77

75%

75,000 questions answered in 0.6 seconds

25,000 questions answered in 6 seconds

47,500,000

6,250,000

$236.25

$48.44

$8,540.73

$19,809.27

90%

90,000 questions answered in 0.6 seconds

10,000 questions answered in 6 seconds

19,000,000

2,500,000

$94.50

$48.44

$4,288.23

$24,061.77

The table shows that even with a small cache hit ratio of 10%, the savings surpasses the cost of the MemoryDB cluster.

Clean up

To avoid incurring additional costs, clean up all the resources you created previously with Terraform:

Conclusion

In this post, we covered the basic concepts of a persistent semantic cache powered by MemoryDB and the deployment of a simple chatbot that uses Knowledge Bases for Amazon Bedrock to answer questions and save them in the durable semantic cache for their reuse. After we deployed the solution, we tested the API endpoint with the same and semantically similar questions to showcase how latency decreases when the cache is used. Finally, we ran a cost calculation according to the cache hit ratio that showcased considerable savings while reducing the latency of responses to users.

To learn more and get started building generative AI applications on AWS, refer to Vector search overview and Amazon Bedrock.

About the authors

Santiago Flores Kanter is a Senior Solutions Architect specialized in machine learning and serverless at AWS, supporting and designing cloud solutions for digital-based customers, based in Denver, Colorado.

Sanjit Misra is a Senior Technical Product Manager on the Amazon ElastiCache and Amazon MemoryDB team, focused on generative AI and machine learning, based in Seattle, WA. For over 15 years, he has worked in product and engineering roles related to data, analytics, and AI/ML.

Lakshmi Peri is a Sr Specialist Solutions Architect specialized in NoSQL databases focused on generative AI and machine learning. She has more than a decade of experience working with various NoSQL databases and architecting highly scalable applications with distributed technologies.

Source: Read More