Large language models (LLMs) have given rise to Role-Playing Agents (RPAs), designed to simulate specific characters and interact with users or other characters. RPAs aim to provide emotional value and support sociological studies unlike AI productivity assistants, with applications ranging from emotional companions to digital replicas and social simulations. Their main objective is to offer immersive, human-like interactions. However, current role-playing research mostly depends on textual modality, which fails to overcome real-world human perception. In reality, human understanding combines various modalities, especially visual and textual, making the development of multimodal capabilities for RPAs crucial for more realistic interactions.

Efforts to create RPAs have mostly focused on using LLMs trained with high-quality character-specific dialogues. Recent works have developed datasets of these dialogues to build RPAs that can offer emotional value to humans or aid in sociological studies. However, these studies are limited to text-based approaches. Evaluation of RPAs with various methods like multiple-choice questions, reward models, and human assessments being proposed is challenging. Moreover, LMMs have emerged as advanced AI systems that combine different types of data, especially text and images. Both closed and open-source LMMs have been developed to improve their performance. LMMs are used in healthcare, document analysis, and GUI navigation. However, the use of LMMs for role-playing has to be explored.

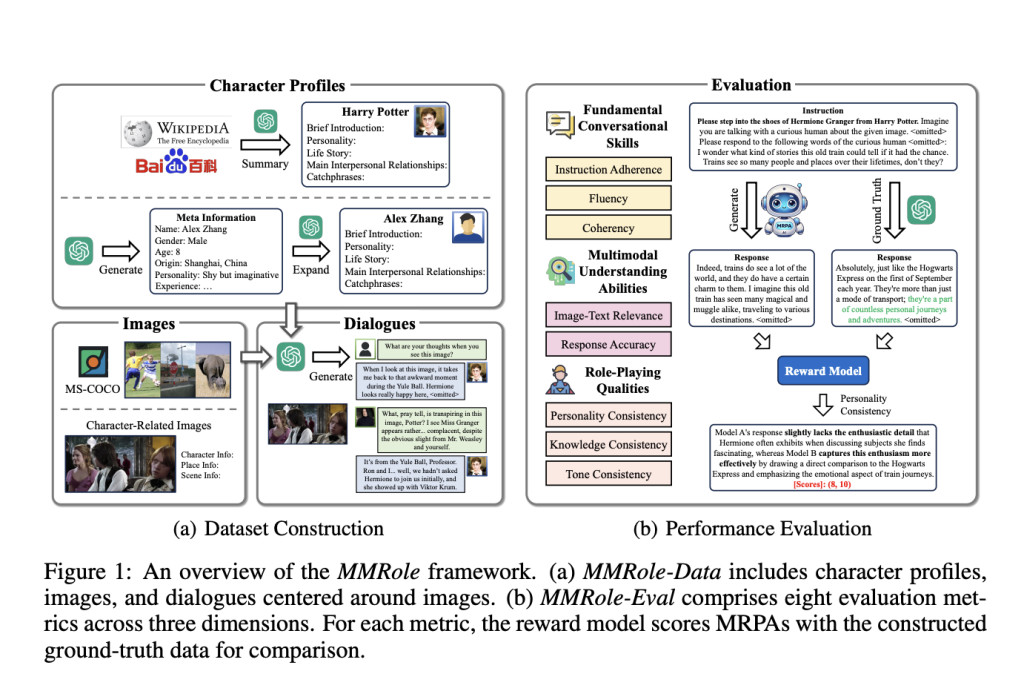

Researchers from the Gaoling School of Artificial Intelligence at Renmin University of China and the College of Information and Electrical Engineering at China Agricultural University have proposed a novel concept called Multimodal Role-Playing Agents (MRPAs). These agents are designed to simulate specific characters and engage in image-based conversations with humans or other characters. A framework, MMRole is developed for creating and evaluating MRPAs and contains two main components, a large-scale, high-quality dataset and a robust evaluation method. The dataset, MMRole-Data contains character profiles, images, and image-based conversations for various character types.

The MMRole framework uses a reward model to evaluate MRPAs by comparing their performance against ground-truth data across eight metrics. It assigns score pairs for each metric, with the final score calculated as their ratio. The MMRole-Data dataset contains 85 characters, over 11K images, and 14K+ dialogues, strategically divided to test the potential generalization. The dialogues are divided into three types, each with different turn structures. To create the specialized MRPA, called MMRole-Agent, the QWen-VL-Chat model is fine-tuned using the MMRole-Data training set. This process involves 8 A100 GPUs, a learning rate, three training epochs, and a model maximum length of 3072 to handle detailed character profiles and dialogue history.

The results after evaluating MRPAs highlight key performance insights. Among MRPAs with over 100 billion parameters, Claude 3 Opus excels. LLaVA-NeXT-34B leads in the tens of billions category, while MMRole-Agent stands out in the billions range, significantly improving over its base model, QWen-VL-Chat, with a score of 0.994. The results show that training methods and data quality are crucial for enhancing LMMs. Moreover, MMRole-Agent shows strong generalization capabilities, performing well on both in-distribution and out-of-distribution test sets. Despite strong fluency across all MRPAs, challenges remain in maintaining personality and tone consistency, especially in multimodal understanding and role-playing.

In conclusion, researchers have introduced a new method called Multimodal Role-Playing Agents (MRPAs) which build on traditional Role-Playing Agents by adding the ability of multimodal understanding. Further, they developed MMRole-Data, a dataset for creating and testing MRPAs. The results show that MMRole-Agent, the first specialized MRPA, performs better and generalizes more effectively than existing models. However, the results also highlight that multimodal understanding abilities and role-playing qualities remain challenging factors in the development of MRPAt. In the future, progress is needed in multimodal AI interactions, which could lead to more realistic and engaging role-playing experiences in various applications.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post MMRole: A New Artificial Intelligence AI Framework for Developing and Evaluating Multimodal Role-Playing Agents appeared first on MarkTechPost.

Source: Read MoreÂ