Large Language Models (LLMs), like ChatGPT and GPT-4 from OpenAI, are advancing significantly and transforming the field of Natural Language Processing (NLP) and Natural Language Generation (NLG), thus paving the way for the creation of a plethora of Artificial Intelligence (AI) applications indispensable to daily life. Even with these improvements, LLMs still have several difficulties when working in fields like finance, law, and medicine that demand specialized expertise.

A team of researchers from the University of Oxford has developed a unique AI framework called MedGraphRAG to improve Large Language Models’ performance in the medical field. The evidence-based outcomes that this framework produces are essential for enhancing the security and dependability of LLMs when handling sensitive medical data.

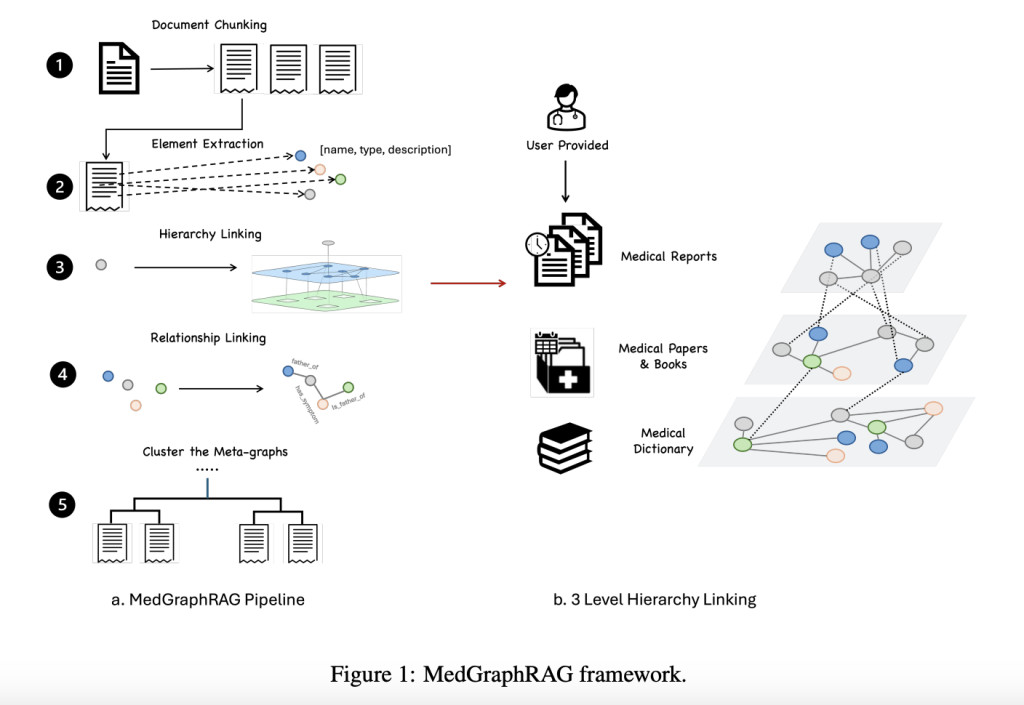

Hybrid static-semantic document chunking is a unique document processing approach that forms the basis of the MedGraphRAG system. This strategy records context better than standard techniques. Rather than just dividing documents into fixed-size sections or pieces, this method considers the semantic content, making context preservation more successful. This is a crucial step in domains such as medicine since correct information retrieval and response production depend on a thorough grasp of context.

The process of extracting important entities from the text comes next once the documents have been chunked. These entities might be words, ailments, therapies, or any other pertinent medical data. Then, a three-tier hierarchical graph structure is built using these retrieved items. This graph aims to establish a connection between these entities and basic medical knowledge that comes from reliable medical dictionaries and articles. In order to make sure that various medical knowledge levels are suitably linked, the hierarchical graph is organized into tiers, which enables more accurate and dependable information retrieval.

These entities generate meta-graphs because of their connections, which are sets of related entities with similar semantic properties. Then, these meta-graphs are combined to form an all-encompassing global graph. The comprehensive knowledge base provided by this global graph enables the LLM to retrieve information precisely and generate responses precisely. The graph structure ensures that the model can effectively retrieve and synthesize information from a wide range of interrelated data points, enabling more accurate and contextually relevant replies.

U-retrieve is the technique that powers MedGraphRAG’s retrieval procedure. This approach is meant to strike a balance between the effectiveness of indexing and retrieving pertinent data and global awareness or the model’s comprehension of the broader context. Even with intricate medical questions, U-retrieve guarantees that the LLM can explore the hierarchical graph with speed and accuracy to locate the most pertinent information.

An extensive study has been conducted to verify MedGraphRAG’s effectiveness. The study’s convincing findings have demonstrated that MedGraphRAG’s hierarchical graph creation technique routinely outperformed cutting-edge models on a variety of medical Q&A benchmarks. The research also verified that the answers produced by MedGraphRAG had references to the original documentation, thereby boosting the LLM’s dependability and credibility in real-world medical settings.

The team has summarized their primary contributions as follows.

A comprehensive pipeline has been presented that utilizes graph-based Retrieval-Augmented Generation (RAG), which is specifically designed for the medical domain.

A unique technique for building hierarchical graphs and data retrieval has been introduced, which enables Large Language Models to use holistic private medical data to produce evidence-based responses efficiently.

The technique has shown to be stable and effective, reliably achieving state-of-the-art (SOTA) performance across multiple model versions through rigorous validation trials across common medical benchmarks.

In conclusion, MedGraphRAG is a big step forward for the use of LLMs in the medical industry. This framework increases the safety and dependability of LLMs in handling sensitive medical data while also improving the accuracy of the responses they generate. It emphasizes evidence-based results and utilizes an advanced graph-based retrieval system.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post MedGraphRAG: An AI Framework for Improving the Performance of LLMs in the Medical Field through Graph Retrieval Augmented Generation (RAG) appeared first on MarkTechPost.

Source: Read MoreÂ