In Replace Amazon QLDB with Amazon Aurora PostgreSQL for audit use cases, we described why Amazon Aurora PostgreSQL-Compatible Edition is an excellent alternative to Amazon QLDB for common ledger database use cases, where keeping a reliable and trustworthy audit of data changes is a core requirement.

In this post, we demonstrate a process for migrating an Amazon QLDB ledger into Amazon Aurora PostgreSQL using the US Department of Motor Vehicles (DMV) sample ledger from the tutorial in the Amazon QLDB Developer Guide as an example. You may use this solution as a foundation for your own migration, altering it as necessary for your schema and migration strategy.

Migration decisions

When performing a database migration, you must decide what data will be migrated. For some applications, migrating the entire database with all historical data is desired. For others, migrating only recent data—the last 12 months, for example—and archiving older data is the best choice. However, others may decide to migrate recent data to accelerate application cutover, migrating older data to the new database later. Few migrations today are performed all at once, requiring the target database to be kept up to date with the source database for a period of time until cutover.

The solution demonstrated in this post performs a base migration of the entire source ledger into the target database, then copies ongoing changes in the source database to the target until cutover. The solution is modular, allowing you to tailor it to your particular migration strategy.

You must also decide how to model the data in the target database and transform the ledger data to fit that model during migration. The Amazon QLDB document data model supports complex, structured documents that may contain nested elements. Tables in Amazon QLDB may contain objects with different document structures. Mapping the ledger’s flexible document model to a more rigid relational database schema can be challenging. Furthermore, the structure of a document may change over time. The migration process can’t assume that every revision of a given document has the same structure. Given these challenges, you may choose to migrate the data into Amazon Aurora PostgreSQL as JSON, which greatly simplifies the migration, but it may not be the most efficient option for accessing your data in the relational database. The alternative is to normalize the ledger data during migration, converting the document model to a relational model and accounting for any changes to the document model that occurred over time. This approach is more complex, requires more coding, and is prone to unexpected conversion errors that disrupt the migration process, but it may be a better choice, depending on how the data is accessed and used in your relational database management service.

In this solution, we demonstrate both approaches. The ledger data from the vehicle registration sample application is normalized into a relational model, but the revision metadata from the ledger is stored in Amazon Aurora PostgreSQL as a JSONB type.

Solution overview

The migration is performed in two phases: full load and ongoing replication. The full load performs an efficient bulk load of data from the source database into the target database. The source database may continue serving traffic until the application cuts over to use the target database, so it may contain data changes that were not migrated by the full load. The ongoing replication or change data capture (CDC) phase continually captures changes and migrates them to the target database, keeping it up to date with the source until the application is pointed to the target database.

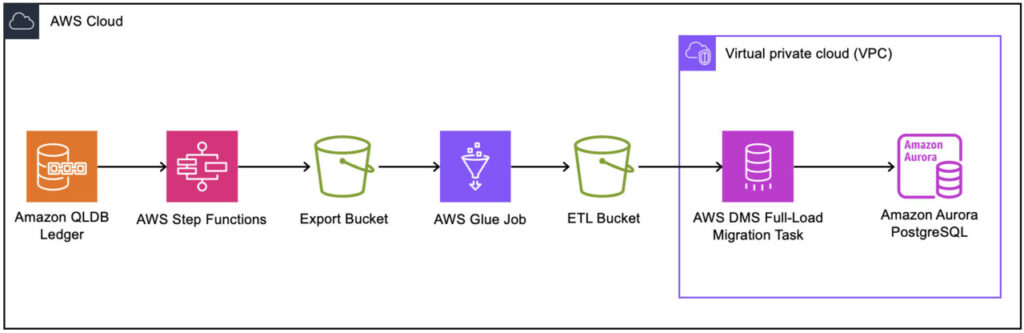

In the full-load phase, the Amazon QLDB ledger is exported into an Amazon Simple Storage Service (Amazon S3) bucket by an AWS Step Functions state machine. The export contains data from all transactions as of the moment the export was initiated. An AWS Glue job extracts the ledger document revisions from the exported files and transforms them into CSV format, which AWS Database Migration Service (AWS DMS) can use. The AWS Glue job writes the transformed CSV files to Amazon S3. An AWS DMS task reads the CSV files from Amazon S3 and loads their data into an Aurora PostgreSQL database. Although this post demonstrates migrating data into Amazon Aurora PostgreSQL, AWS DMS supports a variety of target endpoints, so the process demonstrated here can be adapted to migrate to other databases.

The following diagram illustrates the solution architecture.

The ongoing replication phase captures new updates to the ledger and migrates them to the target Aurora PostgreSQL database in near real time. The process relies on the Amazon QLDB streaming feature. The solution identifies the last ledger block in the export so that the stream can begin with the first block committed after the export began. As new transactions are committed to the ledger, Amazon QLDB sends those changes to Amazon Kinesis Data Streams. An AWS Lambda function consumes the events from the Kinesis data stream and writes their data to the target database, as shown in the following diagram.

Prerequisites

The migration solution is deployed using several AWS CloudFormation templates that set up the infrastructure and deploy the code used in the migration. The CloudFormation templates and related files are available in a GitHub repo and must be downloaded to your workstation. Download the project code with the following command:

The project contains the following files:

setup.yml – Deploys and populates the vehicle-registration ledger, the target Aurora PostgreSQL database, and related VPC networking

ledger-export.yml – Deploys components to export data from the ledger

ledger-full-migration.yml – Deploys the AWS Glue and AWS DMS components to extract, transform, and load (ETL) the exported ledger data into the target database

ledger-cdc-migration.yml – Sets up the Kinesis data stream for Amazon QLDB streaming and the stream consumer that writes ledger changes to the target database

dmv-postload-ddl.sql – Contains SQL statements to create indexes in the target database after the full-load phase of the migration is complete

Create the source and target databases

For this demonstration, we create an Amazon QLDB ledger to act as our source and an Aurora PostgreSQL cluster to act as our target, along with a VPC and related networking, AWS Secrets Manager secrets to hold database credentials, S3 buckets, AWS Identity and Access Management (IAM) roles, and other components required to launch an Aurora cluster. The setup populates the ledger database with data, creates the database and schema on the Aurora PostgreSQL cluster, and creates a database user for the migration.

This solution relies on the RDS Data API, which is not available in all regions. Consult this table to verify that your region supports the Data API.

If you’re using this solution as a foundation for your own migration, you can skip this section if you already have an Amazon QLDB ledger and Aurora PostgreSQL cluster setup.

Complete the following steps to deploy the components using AWS CloudFormation:

On the AWS CloudFormation console, choose Stacks in the navigation pane.

Choose Create stack, then choose With new resources (standard).

Choose Upload a template file.

Choose Choose file and choose the setup.yml file from the GitHub project on your computer.

Choose Next.

On the Specify stack details page, enter ledger-migrate-setup for Stack name.

Leave all of the input parameters at their defaults, unless the CIDR for the VPC conflicts with other VPCs in your environment. In that case, modify the VPCCIDR, DatabaseSubnetCIDRs, and PublicSubnetCIDRs parameters so they don’t conflict. You may also need to adjust the default AuroraDBInstanceClass parameter. Not all instance types are available in all regions.

Choose Next.

On the Configure stack options page, choose Next.

On the Review and create page, select the check box to acknowledge the possible creation of IAM resources, then choose Submit.

When the stack is in the CREATE_COMPLETE stage, choose the Outputs tab to view the stack outputs. These values will be used as inputs for subsequent steps in the migration process.

Export data from Amazon QLDB

The first step in the migration is to export data from the source ledger to an S3 bucket. The export is composed of many files, and each file contains one or more ledger blocks in JSON format (see Journal export output in QLDB for details). Exporting even a medium-sized ledger may take several hours. To reduce the export time, multiple exports of a single ledger can run in parallel, with each export processing a portion of the ledger. Amazon QLDB supports up to two concurrent exports by default. If you have very large ledgers (hundreds of gigabytes), contact AWS Support to request an increase to that limit.

In this solution, we use Step Functions to perform the export. The state machine accepts the desired number of concurrent exports as a parameter. It fetches a ledger digest to obtain the last block number in the journal and uses that to divide the ledger into equal parts, dividing work evenly across exports. The state machine starts the export jobs and loops until they are all complete. When all of the export jobs have completed, the state machine fetches digests and proof hashes for the last block in each export and stores them in Amazon S3 so that the exports can be cryptographically verified after the source ledger has been deleted (this process is not demonstrated in this post).

To run the export, we first need to deploy the required components using AWS CloudFormation. Complete the following steps:

On the AWS CloudFormation console, choose Stacks in the navigation pane.

Choose Create stack, then choose With new resources (standard).

Choose Upload a template file.

Choose Choose file and choose the ledger-export.yml file from the GitHub project on your computer.

Choose Next.

On the Specify stack details page, enter ledger-export for Stack name.

Leave the value of the LedgerName parameter set to its default value (“vehicle-registrationâ€).

Choose Next.

On the Configure stack options page, choose Next.

On the Review and create page, select the check box to acknowledge the possible creation of IAM resources, then choose Submit.

When the stack is in the CREATE_COMPLETE stage, open the Step Functions console and choose State machines in the navigation pane.

Choose the LedgerExporter state machine.

The state machine accepts a JSON object as input.

Enter the following snippet into the JSON editor. If you increased the concurrent export limit through AWS Support, change the value of ExportCount to the new limit.

Choose Start execution.

The state machine will run for about 10 minutes for the vehicle-registration ledger, but will run longer for bigger ledgers. Upon completion, the execution status will be Succeeded. The Graph view section of the run details page shows a visual depiction of the steps in the state machine.

Choose the Export node, copy the export IDs from the Output section, and save them in a text editor. You’ll need them in subsequent steps.

Choose the Digest node and copy the values of LastBlockNum and LastBlockTimestamp into a text editor for later.

The export is complete. The process created an S3 bucket called ledger-export-[ACCOUNT ID] with a folder called dmv that contains the exported ledger data in JSON format. The name of the folder was set in the BucketPrefix parameter in the input to the state machine.

Extract and transform data

With the ledger export complete, the next step is to extract the ledger data from the exported JSON files and transform it into CSV files for efficient loading into Amazon Aurora PostgreSQL. The solution creates an AWS Glue job to perform the extraction and transformation. AWS Glue uses Apache Spark to distribute the export dataset across multiple compute nodes for concurrent processing, reducing the time required to process the data from large ledgers. The AWS Glue job reads the exported data from the S3 bucket created in the export step and writes its output into a new ETL bucket created by the process.

The AWS Glue job is built to convert the tables from the k ledger into the schema we’ve designed for the target database, flattening the structure of ledger documents into the rows and columns of a relational database. To use this process to migrate your ledger, you need to modify portions of the AWS Glue job’s PySpark code to accommodate your data model.

To perform the extraction and transformation, deploy the required components using AWS CloudFormation:

On the AWS CloudFormation console, choose Stacks in the navigation pane.

Choose Create stack, then choose With new resources (standard).

Choose Upload a template file.

Choose Choose file and choose the ledger-full-migration.yml file from the GitHub project on your computer.

Choose Next.

This template will deploy the components needed for this phase of the migration and the next.

On the Specify stack details page, enter ledger-full-migrage for Stack name.

Unlike the export stack, this template requires input parameters.

Provide the following input parameters:

For LedgerName, enter the value of the LedgerName output parameter from the ledger-migrate-setup stack.

For ExportIds, enter the export IDs copied from the state function run, formatted as a comma-delimited list.

For ExportBasePrefix, enter dmv/.

For GlueWorkerType, enter G.2X.

For NumberOfGlueWorkers, enter 2.

For ReplicationInstanceSubnets, enter the subnets from the DatabaseSubnets parameter from the ledger-migrate-setup stack.

For ReplicationInstanceClass, enter dms.r6i.large.

For SecurityGroups choose the security groups from the DatabaseSecurityGroups parameter from the ledger-migrate-setup stack.

For TargetDatabaseSecretName, enter the value of the MigrateDatabaseUserSecretName output parameter from the ledger-migrate-setup stack.

For TargetDatabaseName, enter the value of the TargetDatabaseName output parameter from the ledger-migrate-setup stack.

Choose Next.

On the Configure stack options page, choose Next.

On the Review and create page, select the check box to acknowledge the possible creation of IAM resources, then choose Submit.

When the CloudFormation stack deployment is complete, navigate to the AWS Glue console and choose ETL jobs in the navigation pane.

Select the ledger-dmv-migrate job and choose Run job.

Choose the name of the job to open the job details page.

Choose the Runs tab to view the status of the job.

When the job is complete, its status will be Succeeded.

The CloudFormation template created an S3 bucket called ledger-etl-[AccountId]. The AWS Glue job writes its output to a folder called dmv in that bucket. You can open the Amazon S3 console, navigate to the ledger-etl-[AccountId] bucket, and open the dmv folder. You’ll see a list of folders, one for each of the tables in the vehicle-registration ledger. These folders contain the latest revision of every non-deleted document in the table. There will be an additional folder for each ledger table containing the contents of the audit table that will be written to the target Aurora PostgreSQL database. The audit tables contain the complete revision history of all documents in their respective ledger tables.

Feel free to explore the folders and their contents. The folder table_counts stores a single file containing the name of each table from the ledger with the respective number of document revisions in the table. This can be useful for verifying that all records were migrated into the target database.

Full data migration

The full migration uses AWS DMS to read the CSV output of the AWS Glue job in the previous step and load it into Amazon Aurora PostgreSQL. AWS DMS truncates tables in the target database before loading data. Although we use Amazon Aurora PostgreSQL as our target database in this post, AWS DMS can migrate the AWS Glue job output to many other databases.

Complete the following steps to start the migration:

On the AWS DMS console, choose Database migration tasks in the navigation pane.

Select the dmv-full-migration task, and on the Actions drop-down, choose Restart/Resume.

Choose Restart/Resume in the Possible data loss on target database alert.

The migration task will begin running. Its status will reflect the progress of the job. When the job is complete, its status will be Load complete. At that point, all of the data exported from the vehicle-registration ledger in Amazon QLDB will be migrated to the Aurora PostgreSQL database.Next, you create indexes and constraints in the Aurora PostgreSQL database.

On the Amazon RDS console, connect to the ledger database just as you did in earlier sections.

Copy all of the lines from the dmv-postload-ddl.sql file from the GitHub project, paste them into the RDS query editor, and choose Run.

Using the RDS query editor, run some of the following queries to explore the migrated data:

The ql_audit column in each table is a PostgreSQL JSONB type. This column contains revision metadata from the Amazon QLDB ledger.

Run the following query to see how to access individual fields in the JSON object:

Ongoing change data replication

The full migration process migrates all data exported from the ledger. However, the ledger may continue to be used after the export until applications that use the ledger are modified to use the Aurora PostgreSQL database. The next step of the migration is to capture ongoing changes as they happen and replicate them to the Aurora PostgreSQL database in near real time. The solution uses the Amazon QLDB streaming feature to send ledger changes to a Kinesis data stream in near real time. A Lambda function consumes ledger events from the data stream and writes them to the Aurora PostgreSQL database using the RDS Data API. The following diagram illustrates this workflow.

Complete the following steps to deploy the required components for change data replication using AWS CloudFormation:

On the AWS CloudFormation console, choose Stacks in the navigation pane.

Choose Create stack, then choose With new resources (standard).

Choose Upload a template file.

Choose Choose file and choose the ledger-cdc-migration.yml file from the GitHub project on your computer.

Choose Next.

On the Specify stack details page, enter ledger-cdc-migrate for Stack name.

Enter the following stack parameters:

For AuroraClusterArn, enter the value of the AuroraClusterArn output parameter from the ledger-migrate-setup stack.

For AuroraDatabaseName, enter the value of the TargetDatabaseName output parameter from the ledger-migrate-setup stack.

For DatabaseUserSecretArn, enter the value of the MigrateDatabaseUserSecretARN output parameter from the ledger-migrate-setup stack.

For KinesisShardCount, enter 1.

For LastFullLoadBlock, enter the value of LastBlockNum taken from the export state machine.

For LedgerName, enter the value of the LedgerName output parameter from the ledger-migrate-setup stack.

For LedgerStreamStartTime, enter the value of LastBlockTimestamp taken from the export state machine.

Choose Next.

On the Configure stack options page, choose Next.

On the Review and create page, select the check box to acknowledge the possible creation of IAM resources, then choose Submit.

When the CloudFormation stack deployment is complete, navigate to the AWS Glue console and choose ETL jobs in the navigation pane.

When the stack deployment is complete, ongoing replication is active.

To see the replication in action, navigate to the PartiQL editor on the Amazon QLDB console.

Choose the vehicle-registration ledger on the Choose a ledger dropdown menu and enter the following query:

Navigate to the RDS query editor and run the following query against the target database:

The audit table contains two records for Melvin Parker. The original version contains the first name, spelled “MelVIN,†a version of 0, and an operation of I for INSERT. The updated version has the corrected first name “Melvin,†a version of 1, and an operation of U for UPDATE.

Now run the following query:

The live table contains the latest revision of Melvin Parker’s record. Note the first name and the version number.

Clean up

To delete the AWS infrastructure you created in this post, open the AWS CloudFormation console and delete the following stacks in order: ledger-cdc-migrate, ledger-full-migrate, ledger-export, and setup.yml.

The stacks leave behind two S3 buckets: ledger-export-[AccountId] and ledger-etl-[AccountId]. This is to prevent accidental deletion of critical Amazon QLDB ledger data if the solution is adapted to migrate a production ledger. For the vehicle-registration ledger migration, delete the contents of each bucket, then delete the buckets.

Adapt the solution for your ledger

You can adapt this migration solution to use with your own ledger. You’ll need to modify the schema definition in the PrepareTargetDatabase resource in the setup.yml template file in the project source to create your custom schema. You’ll also need to modify the dmv-postload-ddl.sql file to create primary and secondary indexes for your custom schema.

In the ledger-dmv-migrate AWS Glue job’s PySpark code, lines 141–146 define conversion functions and output columns for the tables in the source ledger in a Python dict called table_converters. The conversion functions flatten a single document revision from the ledger into columns that can be output as CSV. Modify the definition of table_converters and the related conversion functions for the data models of the source and target databases. The source code for the AWS Glue job is defined in the PutGlueJobCodeToS3 resource in the ledger-full-migration.yml file in the GitHub project.

The Lambda function that consumes ledger updates from the Kinesis data stream contains identical logic that must also be modified. The code to modify is defined in the StreamConsumerFunction resource in the ledger-cdc-migration.yml file in the GitHub project.

Finally, the SourceEndpoint resource in the ledger-full-migration.yml file defines an AWS DMS source endpoint that defines the structure of the CSV files produced by the AWS Glue job. This definition must be modified to match the new structure. See Defining external tables for Amazon S3 as a source for AWS DMS for more information on the AWS DMS table definition format.

Before you perform the migration, back up the target database. The migration solution truncates tables in the target database and doesn’t roll back the entire migration for errors.

Summary

In this post, we presented a solution for migrating Amazon QLDB ledgers into Amazon Aurora PostgreSQL using the vehicle registration sample database from the tutorial in the Amazon QLDB Developer Guide. You can adapt this solution to migrate your own ledger, and its modular design allows you to adapt it to your migration strategy.

For more information about this solution or help planning your migration, contact your AWS representative.

About the Author

Dan Blaner is a Principal Solutions Architect specializing in ledger and relational databases. He enjoys learning, figuring stuff out, and helping other people figure stuff out. In his free time, he plays the bass and enjoys making bad music with good friends.

Source: Read More