Large Language Models (LLMs) with parametric memory of rules and knowledge have shown limitations in implicit reasoning. Research has shown that these models, even more complex ones like GPT-4, have trouble applying and integrating internalized facts reliably. For instance, even when they are aware of the entities in question, they frequently make inaccurate comparisons of their properties. Implicit reasoning deficits have important consequences, such as making it harder to induce structured and condensed representations of rules and facts. This makes it difficult to propagate changes and results in redundant knowledge storage, ultimately impairing the model’s capacity to systematically generalize knowledge.

In recent research, researchers from Ohio State University and Carnegie Mellon University have studied whether deep learning models such as transformers can learn to reason implicitly over parametric information. The research focuses on two main categories of reasoning: comparison, which assesses the similarities or differences between items, and composition, which combines several pieces of information.

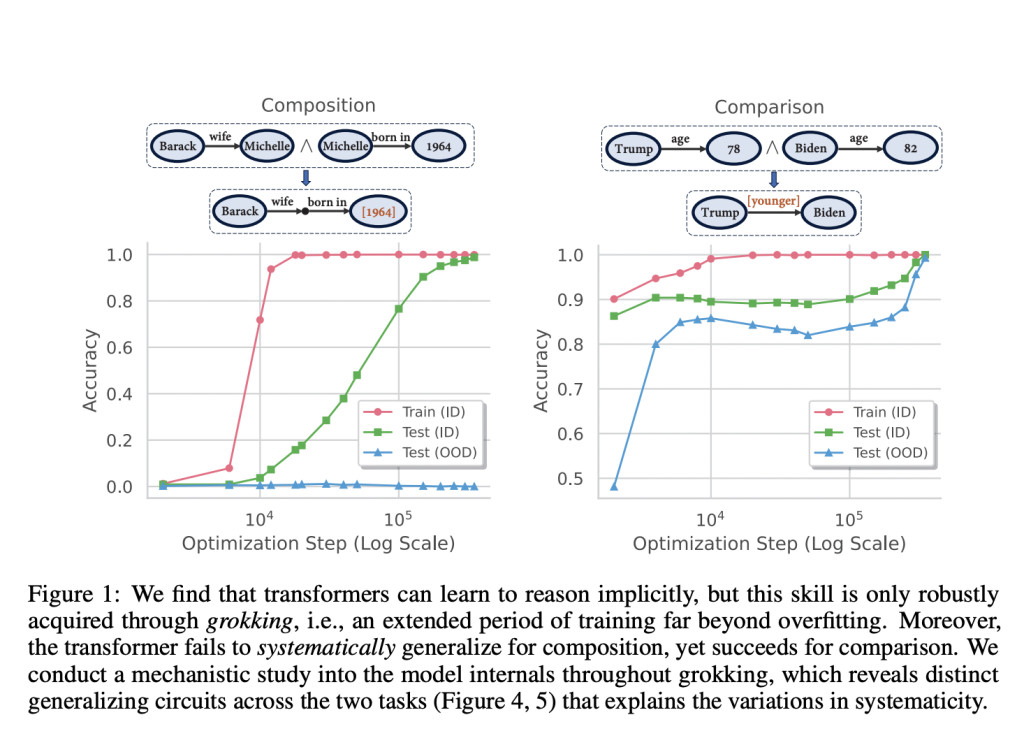

The team has found that while transformers are able to learn implicit reasoning, it is only through a process called grokking that they are able to do so robustly. Grokking is the term for training that is continued much past the point of overfitting, at which the model learns more about the underlying patterns in addition to memorizing training data.Â

Different types of reasoning have different effects on how far transformers can apply this logic. Transformers specifically struggle to generalize effectively for composition tasks when confronted with out-of-distribution examples (data that deviate greatly from the training data), but they perform well for comparison tasks.

The team carried out an in-depth evaluation of the internal workings of the models during training to ascertain why this occurred. The research has produced a number of important findings, which are as follows.

The Mechanism of Grokking: The team found out how the generalizing circuit, which is a component of the model that adapts learned rules to unique circumstances, emerges and develops over time. The effectiveness of this circuit in generalizing data as opposed to just memorization is essential to the model’s ability to perform implicit reasoning.

Systematicity and Circuit Configuration: The team discovered a close relationship between the generalizing circuit’s configuration and the model’s capacity for systematic generalization. The reasoning powers of the model are largely determined by how atomic knowledge and rules are arranged and accessible within it.

According to the research, implicit reasoning in transformers is largely dependent on how the training process is set up and how the training data is organized. The findings have also suggested that the transformer architecture can be improved by including methods that promote cross-layer knowledge sharing, which could strengthen the reasoning capabilities of the model.

The study has also demonstrated that parametric memory, which is the model’s capacity to store and apply knowledge within its parameters, works well for intricate reasoning tasks. State-of-the-art models such as GPT-4-Turbo and Gemini-1.5-Pro, which rely on non-parametric memory, did not perform well for a particularly difficult reasoning task with a large search space, no matter how their retrieval processes were augmented or prompted.Â

On the other hand, a completely grokked transformer that used parametric memory was able to reach almost flawless accuracy. This demonstrates how parametric memory has a great deal of promise in enabling sophisticated reasoning in language models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our 46k+ ML SubReddit, 26k+ AI Newsletter, Telegram Channel, and LinkedIn Group.

If You are interested in a promotional partnership (content/ad/newsletter), please fill out this form.

The post This AI Research from Ohio State University and CMU Discusses Implicit Reasoning in Transformers And Achieving Generalization Through Grokking appeared first on MarkTechPost.

Source: Read MoreÂ