Materials science focuses on studying and developing materials with specific properties and applications. Researchers in this field aim to understand the structure, properties, and performance of materials to innovate and improve existing technologies and create new materials for various applications. This discipline combines chemistry, physics, and engineering principles to address challenges and improve materials used in aerospace, automotive, electronics, and healthcare.

One significant challenge in materials science is integrating vast amounts of visual and textual data from the scientific literature to enhance material analysis and design. Traditional methods often fail to effectively combine these data types, limiting the ability to generate comprehensive insights and solutions. The difficulty lies in extracting relevant information from images and correlating it with textual data, essential for advancing research and applications in this field.

Existing work includes isolated computer vision techniques for image classification and natural language processing for textual data analysis. These methods handle visual and textual data separately, limiting the ability to generate comprehensive insights. Current models like Idefics-2 and Phi-3-Vision can process images and text but need help integrating them effectively. They often need to improveovide nuanced, contextually relevant analyses and leverage multimodal data’s combined potential, impacting their performance in complex materials science applications.

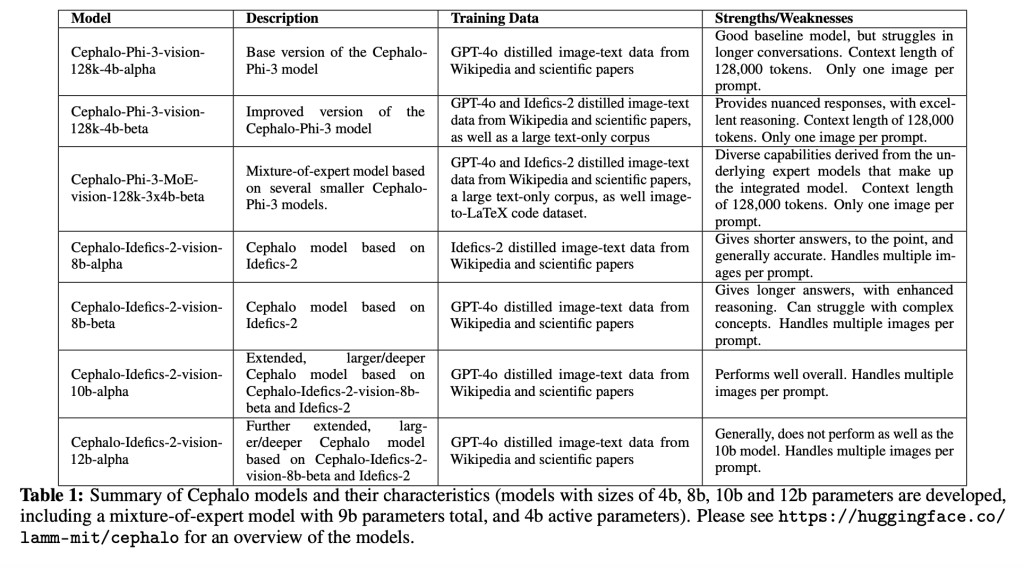

Researchers from the Massachusetts Institute of Technology (MIT) have introduced Cephalo, a series of multimodal vision-language models (V-LLMs) specifically designed for materials science applications. Cephalo aims to bridge the gap between visual perception and language comprehension in analyzing and designing bio-inspired materials. This innovative approach integrates visual and linguistic data, enabling enhanced understanding and interaction within human and multi-agent AI frameworks.

Cephalo utilizes a sophisticated algorithm to detect and separate images and their corresponding textual descriptions from scientific documents. It integrates these data using a vision encoder and an autoregressive transformer, enabling the model to interpret complex visual scenes, generate accurate language descriptions, and effectively answer queries. The model is trained on integrated image and text data from thousands of scientific papers and science-focused Wikipedia pages. It demonstrates its capability to handle complex data and provide insightful analysis.

The performance of Cephalo is significant in its ability to analyze diverse materials, such as biological materials, engineering structures, and protein biophysics. For instance, Cephalo can generate precise image-to-text and text-to-image translations, providing high-quality, contextually relevant training data. This capability significantly enhances understanding and interaction within human AI and multi-agent AI frameworks. Researchers have tested Cephalo in various use cases, including analyzing fracture mechanics, protein structures, and bio-inspired design, showcasing its versatility and effectiveness.

Regarding performance and results, Cephalo’s models range from 4 billion to 12 billion parameters, accommodating different computational needs and applications. The models are tested in diverse use cases, such as biological materials, fracture and engineering analysis, and bio-inspired design. For example, Cephalo demonstrated its ability to interpret complex visual scenes and generate precise language descriptions, enhancing the understanding of material phenomena like failure and fracture. This integration of vision and language allows for more accurate and detailed analysis, supporting the development of innovative solutions in materials science.

Furthermore, the models have shown significant improvements in specific applications. For instance, Cephalo could generate detailed descriptions of microstructures in analyzing biological materials, which are crucial for understanding material properties and performance. In fracture analysis, the model’s ability to accurately depict crack propagation and suggest methods to improve material toughness was particularly substantial. These results highlight Cephalo’s potential to advance materials research and provide practical solutions for real-world challenges.

In conclusion, this research not only addresses the problem of integrating visual and textual data in materials science but also offers an innovative solution with the transformative potential of the Cephalo models. Developed by MIT, these models significantly enhance the capability to analyze and design materials by leveraging advanced AI techniques to provide comprehensive and accurate insights. The combination of vision and language in a single model represents a significant advancement in the field, supporting the development of bio-inspired materials and other applications in materials science, and paving the way for a future of enhanced understanding and innovation.

Check out the Paper and Model Card. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post Cephalo: A Series of Open-Source Multimodal Vision Large Language Models (V-LLMs) Specifically in the Context of Bio-Inspired Design appeared first on MarkTechPost.

Source: Read MoreÂ