Autoregressive image generation models have traditionally relied on vector-quantized representations, which introduce several significant challenges. The process of vector quantization is computationally intensive and often results in suboptimal image reconstruction quality. This reliance limits the models’ flexibility and efficiency, making it difficult to accurately capture the complex distributions of continuous image data. Overcoming these challenges is crucial for improving the performance and applicability of autoregressive models in image generation.

Current methods for tackling this challenge involve converting continuous image data into discrete tokens using vector quantization. Techniques such as Vector Quantized Variational Autoencoders (VQ-VAE) encode images into a discrete latent space and then model this space autoregressively. However, these methods face considerable limitations. The process of vector quantization is not only computationally intensive but also introduces reconstruction errors, resulting in a loss of image quality. Furthermore, the discrete nature of these tokenizers limits the models’ ability to accurately capture the complex distributions of image data, which impacts the fidelity of the generated images.

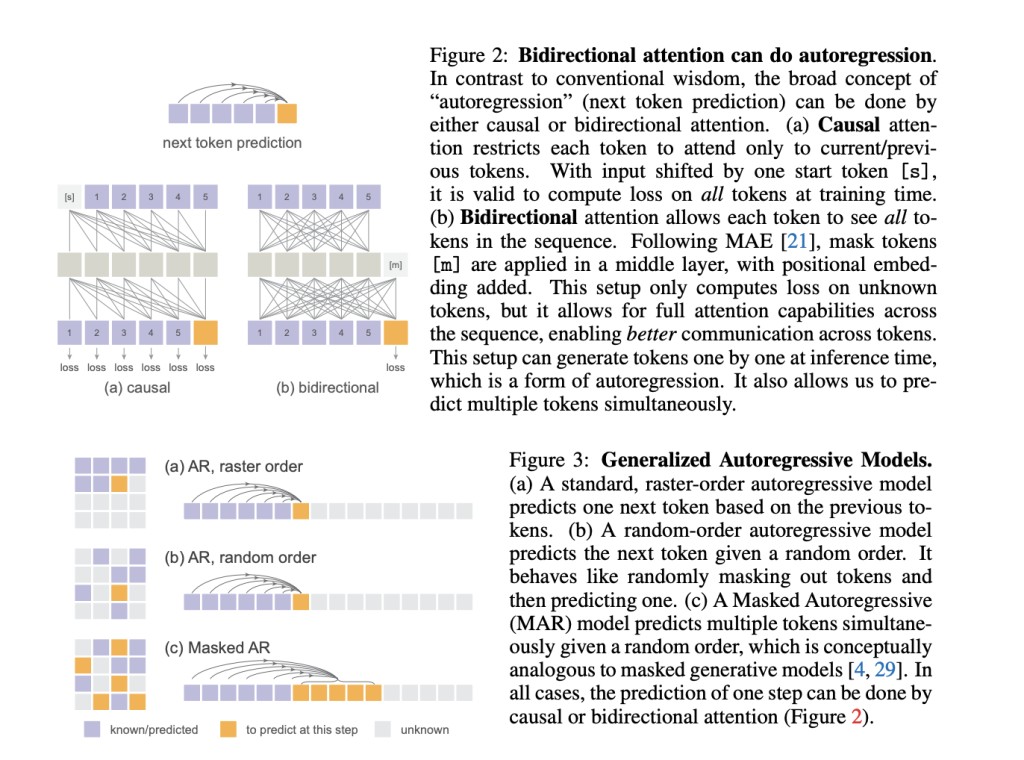

A team of researchers from MIT CSAIL, Google DeepMind, and Tsinghua University have developed a novel technique that eliminates the need for vector quantization. This method leverages a diffusion process to model the per-token probability distribution within a continuous-valued space. By employing a Diffusion Loss function, the model predicts tokens without converting data into discrete tokens, thus maintaining the integrity of the continuous data. This innovative strategy addresses the shortcomings of existing methods by enhancing the generation quality and efficiency of autoregressive models. The core contribution lies in the application of diffusion models to predict tokens autoregressively in a continuous space, which significantly improves the flexibility and performance of image generation models.

The newly introduced technique uses a diffusion process to predict continuous-valued vectors for each token. Starting with a noisy version of the target token, the process iteratively refines it using a small denoising network conditioned on previous tokens. This denoising network, implemented as a Multi-Layer Perceptron (MLP), is trained alongside the autoregressive model through backpropagation using the Diffusion Loss function. This function measures the discrepancy between the predicted noise and the actual noise added to the tokens. The method has been evaluated on large datasets like ImageNet, showcasing its effectiveness in improving the performance of autoregressive and masked autoregressive model variants.

The results demonstrate significant improvements in image generation quality, as evidenced by key performance metrics such as the Fréchet Inception Distance (FID) and Inception Score (IS). Models using Diffusion Loss consistently achieve lower FID and higher IS compared to those using traditional cross-entropy loss. Specifically, the masked autoregressive models (MAR) with Diffusion Loss achieve an FID of 1.55 and an IS of 303.7, indicating a substantial enhancement over previous methods. This improvement is observed across various model variants, confirming the efficacy of this new approach in boosting both the quality and speed of image generation, achieving generation rates of less than 0.3 seconds per image.

In conclusion, the innovative diffusion-based technique offers a groundbreaking solution to the challenge of dependency on vector quantization in autoregressive image generation. By introducing a method to model continuous-valued tokens, the researchers significantly enhance the efficiency and quality of autoregressive models. This novel strategy has the potential to revolutionize image generation and other continuous-valued domains, providing a robust solution to a critical challenge in AI research.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post Eliminating Vector Quantization: Diffusion-Based Autoregressive AI Models for Image Generation appeared first on MarkTechPost.

Source: Read MoreÂ