Llama 3 has significantly outperformed GPT-3.5 and even surpassed GPT-4 in several benchmarks, showcasing its strength in efficiency and task-specific performance despite having fewer parameters. However, GPT-4o emerged with advanced multimodal capabilities, reclaiming the top position. Llama 3, utilizing innovations like Grouped-Query Attention, excels in translation and dialogue generation, while GPT-4 demonstrates superior reasoning and problem-solving skills. GPT-4o further enhances these abilities, solidifying its dominance with improved neural architecture and multimodal proficiency.

This study presents Llama3-V, a multimodal model based on Llama3, trained for under $500. It integrates visual information by embedding input images into patch embeddings using the SigLIP model. These embeddings align with textual tokens via a projection block using self-attention blocks, placing visual and textual embeddings on the same plane. The visual tokens are then prepended to the textual tokens, and the joint representation is processed through Llama3, enhancing its ability to understand and integrate visual data.

SigLIP, an image embedding model, uses a pairwise sigmoid loss for processing each image-text pair independently, unlike CLIP’s contrastive loss with softmax normalization. SigLIP’s vision encoder divides images into non-overlapping patches, projecting them into a lower-dimensional embedding space and applying self-attention for higher-level feature extraction. To align SigLIP’s image embeddings with Llama3’s textual embeddings, a projection module with two self-attention blocks is used. Visual tokens from these embeddings are prepended to textual tokens, creating a joint input for Llama3.

To optimize computational resources, two major strategies were employed. First, a caching mechanism precomputes SigLIP image embeddings, increasing GPU utilization and batch size without causing out-of-memory errors. This separation of SigLIP and Llama3 processing stages enhances efficiency. Second, utilization of MPS/MLX optimizations, SigLIP, due to its smaller size, runs inference on Macbooks and achieves a throughput of 32 images/second. These optimizations save training and inference time by efficiently managing resources and maximizing GPU usage.

Precomputing image embeddings via SigLIP involves loading the SigLIP model, preprocessing images, and obtaining vector representations. High-resolution images are split into patches for efficient encoding. Sigmoid activation is applied to logits to extract embeddings, which are then projected into a joint multimodal space using a learned weight matrix. These projected embeddings, or “latents,†are prepended to text tokens for pretraining Llama3. Pretraining uses 600,000 image-text pairs, updating only the projection matrix. Supervised finetuning enhances performance using 1M examples, focusing on the vision and projection matrices.

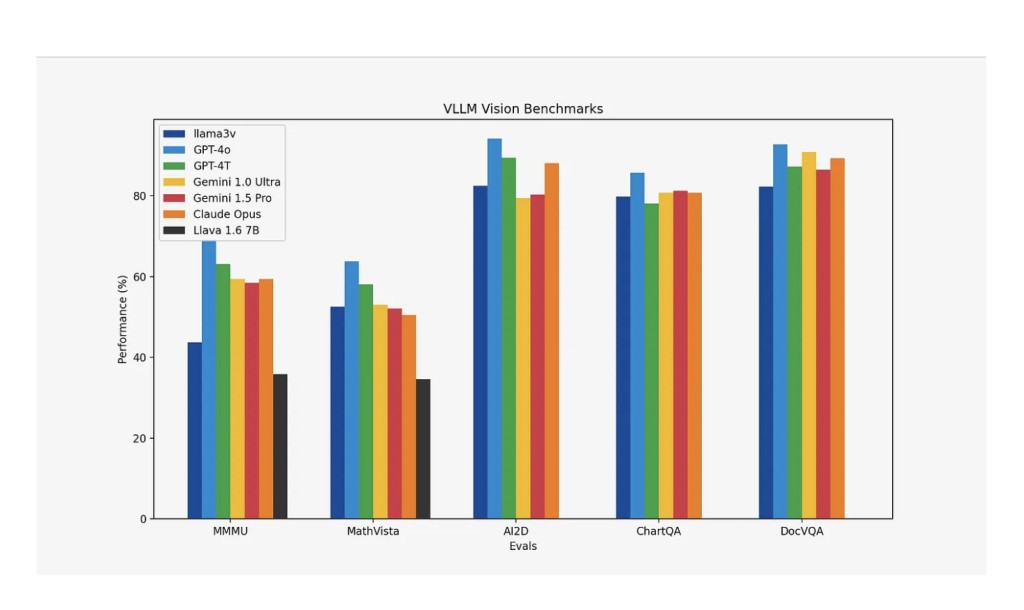

Llama3-V achieves a 10–20% performance boost over Llava, the leading model for multimodal understanding. It also performs comparably to much larger closed-source models across most metrics, except for MMMU, demonstrating its efficiency and competitiveness despite a smaller size.

To recapitulate, Llama3-V demonstrates significant advancements in multimodal AI, outperforming Llava and rivaling larger closed-source models in most metrics. By integrating SigLIP for efficient image embedding and employing strategic computational optimizations, Llama3-V maximizes GPU utilization and reduces training costs. Pretraining and supervised finetuning enhance its multimodal capabilities, leading to a significant 10–20% performance boost over Llava. Llama3-V’s innovative approach and cost-effective training establish it as a competitive and efficient state-of-the-art model for multimodal understanding.

Check out the Github, Model, and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post Llama3-V: A SOTA Open-Source VLM Model Comparable performance to GPT4-V, Gemini Ultra, Claude Opus with a 100x Smaller Model appeared first on MarkTechPost.

Source: Read MoreÂ