Speech recognition technology focuses on converting spoken language into text. It involves processes such as acoustic modeling, language modeling, and decoding, aiming to achieve high accuracy in transcriptions. Significant advancements have been made in this field, driven by machine learning algorithms and large datasets. These advancements enable more accurate and efficient speech recognition systems, crucial for various applications like virtual assistants, transcription services, and accessibility tools.

A major challenge in speech recognition is correcting errors generated by automatic speech recognition (ASR) systems. Traditional language models (LMs) integrated with ASR systems often need to be aware of specific errors, leading to suboptimal performance. Effective error correction models that can accurately fix these errors without extensive supervised training data remain a critical problem. This challenge is particularly pressing given the increasing reliance on ASR systems in everyday technology and communication tools.

Existing work includes techniques like integrating LMs with neural acoustic models using sequence discriminative criteria and merging text-only LM features with ASR models. Error correction models post-process ASR outputs, improving transcription accuracy by converting noisy hypotheses into clean text. Transformer-based error correction models have improved, especially with advanced WER-based metrics and noise augmentation strategies. Recent advances also explore large language models (LLMs) like ChatGPT for enhancing transcription accuracy through powerful linguistic representations.

Researchers from Apple have introduced the Denoising LM (DLM), an advanced error correction model developed by a research team at Apple. The DLM leverages vast amounts of synthetic data generated by TTS systems to train the model effectively. This approach significantly exceeds previous attempts and achieves state-of-the-art performance in ASR systems. The DLM’s innovative use of synthetic data addresses the data scarcity issue that has hampered the performance of earlier error correction models.

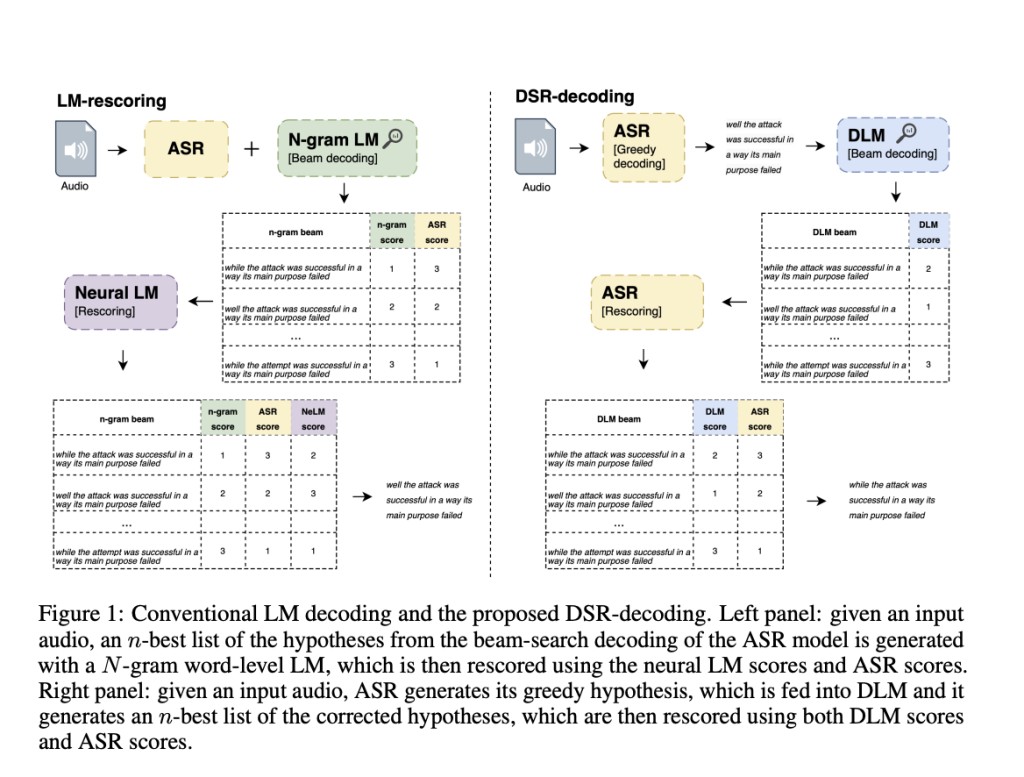

The DLM works by synthesizing audio using TTS systems, which are then fed into an ASR system to produce noisy hypotheses. These hypotheses are paired with the original texts to form a training dataset. Key elements of DLM include up-scaled models and data, multi-speaker TTS systems, multiple noise augmentation strategies, and novel decoding techniques. Specifically, the model uses text from a large language model corpus to generate audio, which is then processed by the ASR system to create noisy transcriptions. These transcriptions are used alongside the original text to train the DLM. This method ensures that the model learns to correct a wide variety of ASR errors, making it highly versatile and scalable.

The DLM demonstrated impressive performance, achieving a 1.5% word error rate (WER) on the Librispeech test-clean dataset and 3.3% on the test-other dataset. These results are significant as they match or surpass the performance of conventional LMs and even some self-supervised methods that use external audio data. The DLM’s ability to improve ASR accuracy significantly highlights its potential to replace traditional LMs in ASR systems. Furthermore, the model showed that it could be applied to different ASR architectures, maintaining high performance across various systems. This universality is a crucial advantage, as it means the DLM can be integrated into a wide range of ASR applications.

To conclude, the research highlights the effectiveness of the DLM in addressing ASR errors by utilizing synthetic data for training. The proposed method not only enhances accuracy but also demonstrates scalability and versatility across different ASR systems. This innovative approach marks a significant advancement in speech recognition, promising more accurate and reliable ASR systems in the future. Researchers believe that the DLM model’s success indicates a need to rethink how large text corpora might be leveraged to improve ASR accuracy further. By focusing on error correction rather than just language modeling, the DLM sets a new standard for future research and development in the field.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post From Noisy Hypotheses to Clean Text: How Denoising LM (DLM) Improves Speech Recognition Accuracy appeared first on MarkTechPost.

Source: Read MoreÂ