Proof assistants like Lean ensure high accuracy in mathematical proofs, addressing the growing complexity of modern mathematics that often leads to errors. Formal languages like Lean, Isabelle, and Coq create computer-verifiable proofs but require significant effort and expertise. Automated theorem proving is increasingly important, with new methods focusing on search algorithms to explore potential solutions. Despite LLM improvements, these methods need more training data. Advances in autoformalization offer some relief, but the datasets remain too small to leverage LLM capabilities fully.

Researchers from DeepSeek, Sun Yat-sen University, the University of Edinburgh, and MBZUAI have developed a method to generate extensive LFourfour proof data from high-school and undergraduate math competition problems. By translating these problems into formal statements, filtering low-quality ones, and generating proofs, they created an 8 million statement dataset. Fine-tuning the DeepSeekMath 7B model on this data, they achieved 46.3% accuracy in whole-proof generation on the Lean 4 miniF2F test, surpassing GPT-4’s 23.0%. Their model also solved 5 out of 148 FIMO benchmark problems, outperforming GPT-4. This work advances theorem proving by leveraging large-scale synthetic data.

Automated theorem proving (ATP) has been a key AI research area since its inception. It has evolved from efficient first-order provers like E and Vampire to handling complex theorems in modern proof assistants such as Lean, Isabelle, and Coq. Recent advances in deep learning and model-guided search have revitalized ATP, combining neural models with tree search algorithms and reinforcement learning. These methods, though powerful, are resource-intensive. Autoformalization, converting natural language into formal statements, addresses limited training data. Recent efforts synthesize larger formal proof datasets using LLMs to improve neural provers’ performance on complex mathematical problems significantly.

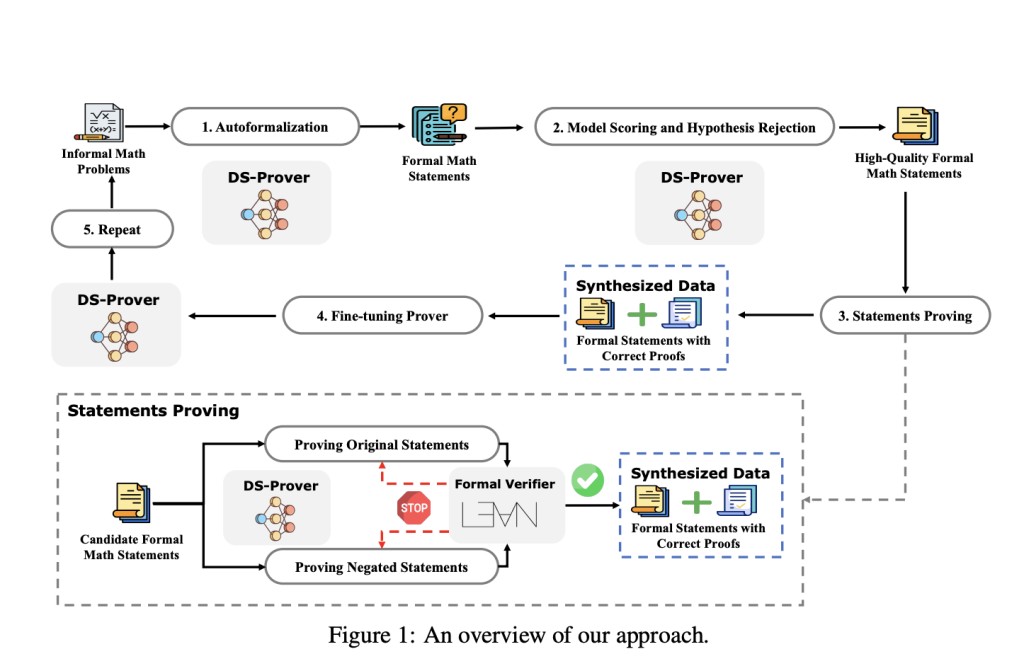

The approach comprises four main stages. Formal mathematical statements are initially generated from a large collection of informal math problems. These auto-formalized statements undergo filtering through model scoring and hypothesis rejection to select high-quality ones. The DeepSeek-Prover model then attempts to prove these statements with correctness verified by the Lean 4 formal verifier, resulting in validated formal statements and proofs. This data is used to fine-tune the DeepSeek-Prover, and the process repeats until improvements become marginal. To enhance proof efficiency, both original statements and their negations are proved concurrently, swiftly discarding invalid statements.Â

DeepSeek-Prover, based on the DeepSeekMath-Base 7B model, was fine-tuned with a global batch size of 512 and a constant learning rate of 1 × 10^−4, including 6,000 warmup steps using synthetic data. Its performance was compared against GPT-3.5, GPT-4, and several advanced methods like GPT-f, Proof Artifact Co-Training, ReProver, Llemma, and COPRA. Evaluations on the miniF2F and FIMO benchmarks revealed that DeepSeek-Prover outperformed others, achieving 60.2% on miniF2F-valid and 52.0% on miniF2F-test, significantly higher than GPT-4’s 25.41% and 22.95%. The FIMO benchmark successfully proved five theorems with varying attempts, surpassing GPT-4, which failed to establish any.

In conclusion, the study devised a method for generating extensive synthetic proof data from high-school and undergraduate-level math competition problems. By translating natural language problems into formal statements, filtering out low-quality data, and using iterative proof generation, 8 million proof data points were created, significantly enhancing the DeepSeekMath 7B model’s performance in ATP. The model surpasses GPT-4 and other benchmark methods like miniF2F and FIMO. The open-sourced dataset and model aim to advance ATP research and improve large language models’ capabilities in formal mathematical reasoning, with plans to broaden the range of addressed mathematical problems in future work.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Revolutionizing Theorem Proving: How Synthetic Proof Data Transforms LLM Capabilities appeared first on MarkTechPost.

Source: Read MoreÂ