Local image feature matching techniques help identify fine-grained visual similarities between two images. Although there is a lot of progress in this area, these advancements don’t account for the generalization capability of image-matching models. Many models that focus on specific visual domains with lots of training data, show lower performance on out-of-domain data compared to traditional methods in some cases. Since the cost of collecting high-quality correspondence annotations is high, it is unreal to assume that there will be a large amount of data for each image domain. So, it is important to develop an architectural improvement to generalize the learnable matching methods.Â

Before deep learning became popular, many studies focused on developing generalizable local feature models. For example, SIFT, SURF, and ORB are commonly used for image-matching tasks across various image domains. Moreover, Sparse Learnable Matching methods like SuperGlue use SuperPoint for detecting keypoint and utilize the attention mechanism to perform intra- and inter-image keypoint feature propagation. Another method, Dense image matching, learns the image descriptors and the matching module to perform matching on the entire input images pixel-wise.

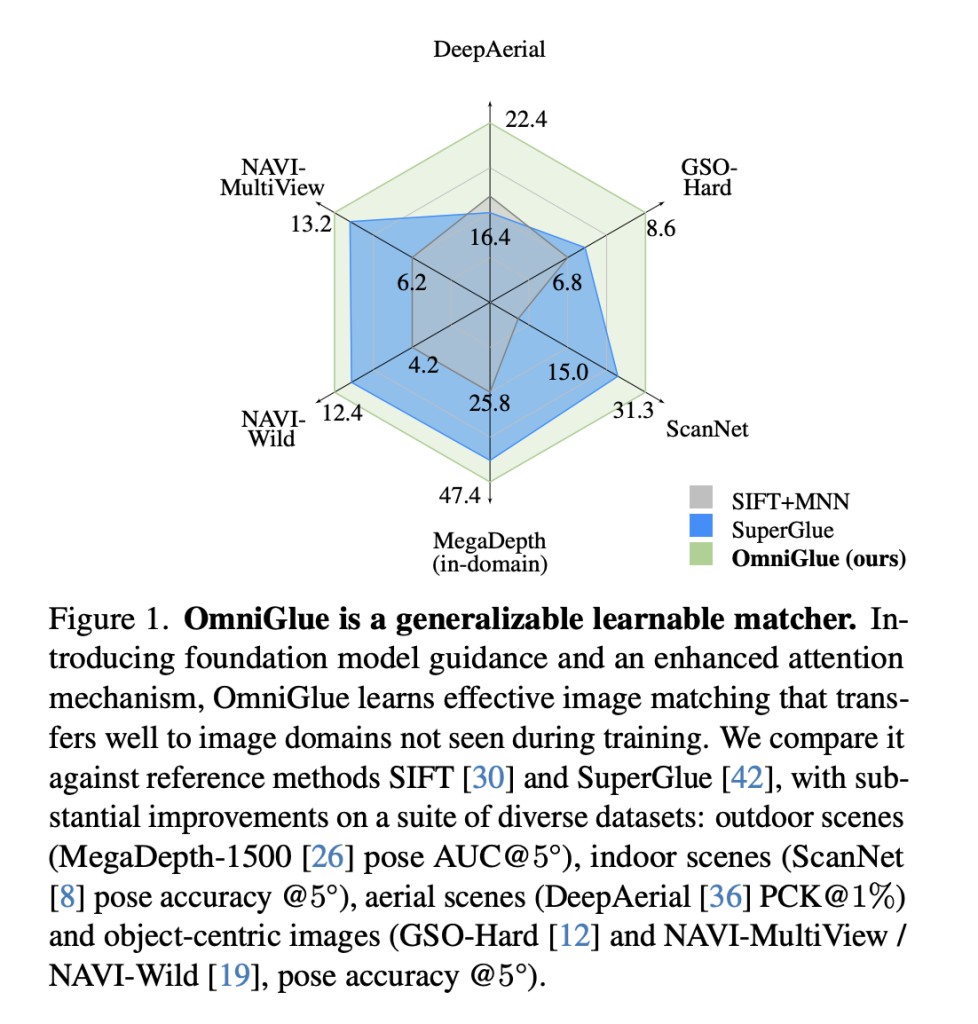

Researchers from the University of Texas at Austin, and Google Research proposed OmniGlue, the first learnable image matcher designed with generalization as a core principle. To enhance the generalization of matching layers, researchers introduced two techniques which are foundation model guidance and keypoint-position attention guidance. OmniGlue uses these two techniques for better generalization in the area of out-of-distribution, keeping the performance on the source domain unaffected. To develop the proposed method, the foundation model, DINO is used to guide the inter-image feature propagation process because of its good performance in the field of diverse images,Â

During the experimentation, researchers compared OmniGlue against (a) SIFT and SuperPoint which gives domain-agnostic local visual descriptors for key points along with the generation of matching results using nearest neighbor + ratio test (NN/ratio) and mutual nearest neighbor (MNN), (b) Sparse Matchers like SuperGlue which uses attention layers for intra- and inter-image keypoint information, and descriptors obtained from SuperPoint. SuperGlue is the best reference for OmniGlue and uses LightGlue to enhance its performance and speed, and (c) Semi-Dense Matchers like LoFTR and PDCNet are used for contextualizing the performance of sparse matching.Â

The results show that OmniGlue outperforms the base method, SuperGlue in the field of in-domain data and also shows better generalization. SuperGlue strongly depends on learned patterns related to image positions, and it can’t handle image warping distortions because there is a significant reduction of 20% in precision and recall due to a shift of minimal data distribution from SH100 to SH200. On the other hand, OmniGlue shows strong generalization capability with an improvement of 12% in precision and 14% in recall outperforming SuperGlue. Moreover, OmniGlue outperforms SuperGlue, showing a 12.3% relative gain on MegaDepth-500, and a 15% improvement in recall during the transfer from SH200 to Megadepth.

In conclusion, researchers from the University of Texas at Austin, and Google Research introduced OmniGlue, the first learnable image matcher designed with generalization as a core principle. OmniGlue shows strong generalization capabilities, outperforming the base method, SuperGlue. Moreover, the proposed method can be easily adjusted into a target domain with a small amount of data collected for fine-tuning. Future work includes exploring the utilization of unannotated data in target domains to enhance generalization. Also, good architectural designs and data will help develop a foundational matching model. Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post OmniGlue: The First Learnable Image Matcher Designed with Generalization as a Core Principle appeared first on MarkTechPost.

Source: Read MoreÂ