Digital pathology converts traditional glass slides into digital images for viewing, analysis, and storage. Advances in imaging technology and software drive this transformation, which has significant implications for medical diagnostics, research, and education. There is a chance to speed up advancements in precision health by a factor of ten because of the present generative AI revolution and the parallel digital change in biomedicine. To generate evidence at the population level, digital pathology can be integrated with other multimodal, longitudinal patient data in multimodal generative AI.

A typical gigapixel slide maybe thousands of times wider and longer than ordinary natural images, which is both an exciting development and a sobering reminder that digital pathology has distinct computing hurdles. This massive magnitude is too much for traditional vision transformers to handle since the computation required for self-attention increases substantially as the input length does. Therefore, previous digital pathology work frequently fails to account for the complex interdependencies between picture tiles on each slide, leaving out crucial context at the slide level for vital applications like tumor microenvironment modeling.

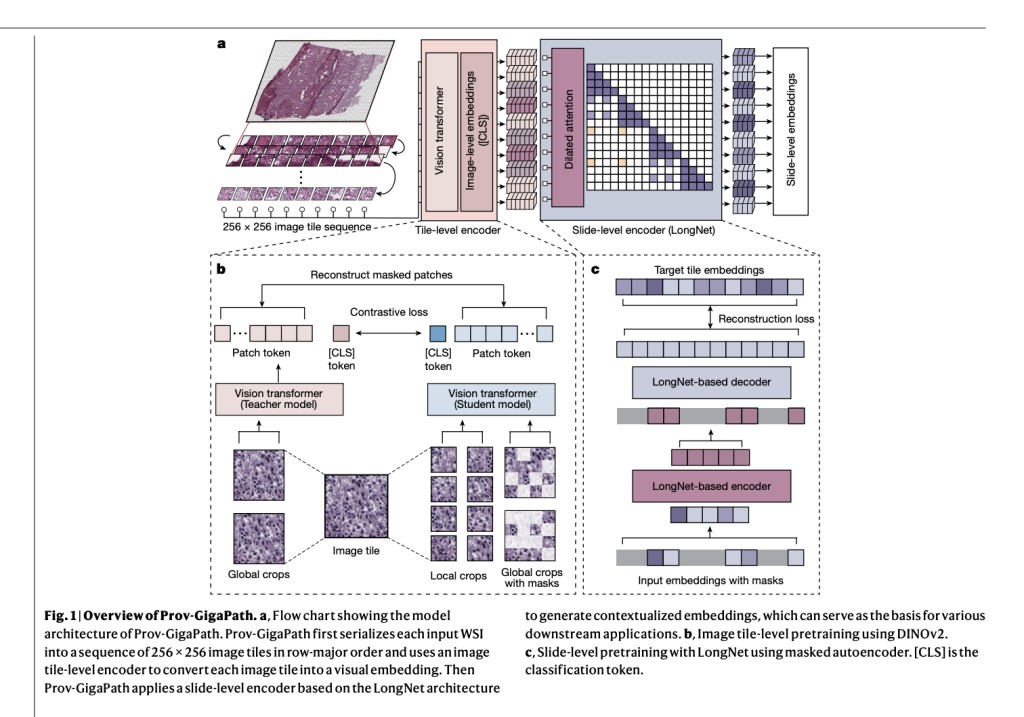

Microsoft’s innovative vision transformer GigaPath has revolutionized whole-slide modeling by introducing dilated self-attention to manage computation. In a groundbreaking collaboration with Providence Health System and the University of Washington, they have developed Prov-GigaPath, an open-access whole-slide pathology foundation model. This model, pretrained on a staggering one billion 256 X 256 pathology image tiles from over 170,000 whole slides using real-world data from Providence, is a significant step forward in the field. All computations were conducted at the private tenant of Providence, with the approval of the Providence Institutional Review Board (IRB).

GigaPath’s two-stage curriculum learning involves pretraining at the tile level with DINOv2 and pre-training at the slide level using masked autoencoder and LongNet. The DINOv2 self-supervision method integrates masked reconstruction loss and contrastive loss to train student and teacher vision transformers. However, because self-attention is computationally challenging, it can only be used for small images like 256 × 256 tiles. The researchers modified LongNet’s dilated attention to digital pathology for slide-level modeling. They introduce a series of rising sizes for segmenting the tile sequence into pieces of the specified size to manage the lengthy sequence of image tiles for an entire presentation. They implement sparse attention for longer segments to counteract the quadratic expansion, where sparsity is directly proportional to segment length. The biggest part would encompass the whole slide but with sparsely subsampled self-attention. As a result, they keep computation tractable while systematically capturing long-range relationships.

By leveraging data from both the Providence and TCGA datasets, the team has established a digital pathology standard with nine tasks for cancer subtyping and seventeen tasks for pathomics. Through large-scale pretraining and whole-slide modeling, Prov-GigaPath has demonstrated exceptional performance, outperforming the second-best model on 18 of the 26 tasks by a significant margin. This impressive performance underscores the model’s versatility and potential for a wide range of applications in digital pathology.Â

The objective of cancer subtyping is to categorize specific subtypes using pathology slides. Pathomics tasks aim to classify tumors as having particular therapeutically important genetic alterations using only the slide image. This has the potential to reveal significant associations between genetic pathways and tissue morphology that are too subtle to be detected by human eyes.Â

In addition, the team aims to find universal signals for a gene mutation across all cancer kinds, and extremely different tumor morphologies in some studies called the pan-cancer scenario. Under these difficult conditions, Prov-GigaPath achieved state-of-the-art performance on 17 of 18 tasks, surpassing the second-best on 12 of 18 jobs. For instance, in the pan-cancer 5-gene study, Prov-GigaPath achieved a 6.5% improvement in AUROC and an 18.7% improvement in AUPRC compared to the top competing approaches.Â

To further evaluate the Prov-generalizability GigaPath, researchers again ran a head-to-head comparison using TCGA data, dominating all other approaches. Evidence of the biological significance of the learned embeddings and the ability of Prov-Gigapath to extract genetically linked pan-cancer and subtype-specific morphological features at the whole-slide level pave the way for the utilization of real-world data in future research about the intricate biology of the tumor microenvironment.

Incorporating the pathology data further demonstrates GigaPath’s capability to perform vision-language tasks. The researchers utilize the report semantics to align the pathology slide representation and continue pretraining on these pairs. Then, they use them for downstream prediction tasks like zero-shot subtyping that don’t require supervised fine-tuning. They perform contrastive learning utilizing the slide-report pairings, with Prov-GigaPath as the whole-slide image encoder and PubMedBERT as the text encoder. This is far more difficult than conventional vision-language pretraining without granular alignment information between specific picture tiles and text samples. On benchmark vision-language tasks, including zero-shot cancer subtyping and gene mutation prediction, Prov-GigaPath significantly beats three top-tier pathology models, highlighting its promise for whole-slide vision-language modeling.

According to the researchers, this is the first digital pathology foundation model that has undergone large-scale pretraining on real-world data. Standard cancer classification, pathomics, and vision-language tasks are all areas where Prov-GigaPath achieves state-of-the-art performance. This offers new avenues for improving patient care and speeding up clinical discovery, and it also shows how important whole-slide modeling is on large-scale real-world data. They highlight that extensive work remains before one can fully realize the promise of a multimodal conversational assistant, particularly in integrating state-of-the-art multimodal frameworks like LLaVA-Med.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Microsoft Research Introduces Gigapath: A Novel Vision Transformer For Digital Pathology appeared first on MarkTechPost.

Source: Read MoreÂ