Machine learning interpretability is a critical area of research for understanding complex models’ decision-making processes. These models are often seen as “black boxes,†making it difficult to discern how specific features influence their predictions. Techniques such as feature attribution and interaction indices have been developed to shed light on these contributions, thereby enhancing the transparency and trustworthiness of AI systems. The ability to interpret these models accurately is essential for debugging and improving models and ensuring they operate fairly and without unintended biases.

A significant challenge in this field is effectively allocating credit to various features within a model. Traditional methods like the Shapley value provide a robust framework for feature attribution but must catch up when capturing higher-order interactions among features. Higher-order interactions refer to the combined effect of multiple features on a model’s output, which is crucial for a comprehensive understanding of complex systems. Without accounting for these interactions, interpretability methods can miss important synergies or redundancies between features, leading to incomplete or misleading explanations.

Current tools such as SHAP (SHapley Additive exPlanations) leverage the Shapley value to quantify the contribution of individual features. These tools have made significant strides in improving model interpretability. However, they primarily focus on first-order interactions and often fail to capture the nuanced interplay between multiple features. While extensions like KernelSHAP have improved computational efficiency and applicability, they still need to fully address the complexity of higher-order interactions in machine learning models. These limitations necessitate the development of more advanced methods capable of capturing these complex interactions.

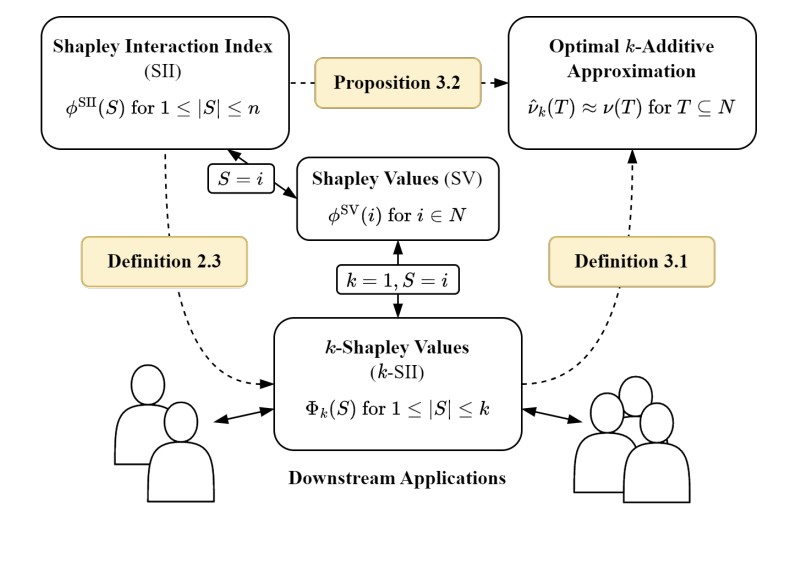

Researchers from Bielefeld University, LMU Munich, and Paderborn University have introduced a novel method called KernelSHAP-IQ to address these challenges. This method extends the capabilities of KernelSHAP to include higher-order Shapley Interaction Indices (SII). KernelSHAP-IQ utilizes a weighted least square (WLS) optimization approach to capture and quantify interactions beyond the first order accurately. Doing so provides a more detailed and precise framework for model interpretability. This advancement is significant as it allows for the inclusion of complex feature interactions often present in sophisticated models but should be noticed by traditional methods.

KernelSHAP-IQ constructs an optimal approximation of the Shapley Interaction Index using iterative k-additive approximations. It starts with first-order interactions and incrementally includes higher-order interactions. The method leverages weighted least square (WLS) optimization to capture feature interactions accurately. The approach was tested on various datasets, including the California Housing regression dataset, a sentiment analysis model using IMDB reviews, and image classifiers like ResNet18 and Vision Transformer. By sampling subsets and solving WLS problems, KernelSHAP-IQ provides a detailed representation of feature interactions, ensuring computational efficiency and precise interpretability.

The performance of KernelSHAP-IQ has been evaluated across various datasets and model classes, demonstrating state-of-the-art results. For instance, in experiments with the California Housing regression dataset, KernelSHAP-IQ significantly improved the mean squared error (MSE) in estimating interaction values, outperforming baseline methods substantially. The process achieved a mean squared error of 0.20 compared to 0.39 and 0.59 for existing techniques. Furthermore, KernelSHAP-IQ’s ability to identify the ten highest interaction scores with high precision was evident in tasks involving sentiment analysis models and image classifiers. The empirical evaluations highlighted the method’s capability to capture and accurately represent higher-order interactions, which are crucial for understanding complex model behaviors. The research showed that KernelSHAP-IQ consistently provided more accurate and interpretable results, enhancing the overall understanding of model dynamics.

In conclusion, the research introduced KernelSHAP-IQ, a method for capturing higher-order feature interactions in machine learning models using iterative k-additive approximations and weighted least square optimization. Tested on various datasets, KernelSHAP-IQ demonstrated enhanced interpretability and accuracy. This work addresses a critical gap in model interpretability by effectively quantifying complex feature interactions, providing a more comprehensive understanding of model behavior. The advancements made by KernelSHAP-IQ contribute significantly to the field of explainable AI, enabling better transparency and trust in machine learning systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post This AI Paper Introduces KernelSHAP-IQ: Weighted Least Square Optimization for Shapley Interactions appeared first on MarkTechPost.

Source: Read MoreÂ