The field of AI involves the development of systems that can do tasks requiring human intelligence. These tasks encompass a broad range, including language translation, speech recognition, and decision-making processes. Researchers in this domain are dedicated to creating advanced models and tools to process and analyze vast datasets efficiently.Â

A significant challenge in AI is creating models that accurately understand and generate human language. Traditional models often face context and nuanced language difficulties, leading to less effective communication and interaction. Addressing these issues is crucial for advancing human-computer interaction and the broader application of AI technologies in customer service, content creation, and automated decision-making. Improving the performance & accuracy of these models is essential for realizing AI’s full potential.

Existing methods for language modeling involve extensive training on large datasets. Transformer models, in particular, have gained widespread adoption due to their ability to manage complex language tasks effectively. These models leverage a mechanism known as attention, allowing them to weigh the importance of different parts of the input data. Despite their success, these models can be resource-intensive and require substantial fine-tuning to achieve optimal performance. This need for resources and tuning can hinder wider adoption and practical application.

In collaboration with Hugging Face, researchers from Mistral AI introduced the Mistral-7B-Instruct-v0.3 model, an advanced version of the earlier Mistral-7B model. This new model has been fine-tuned specifically for instruction-based tasks to enhance language generation and understanding capabilities. The Mistral-7B-Instruct-v0.3 model includes significant improvements, such as an expanded vocabulary and support for new features like function calling.Â

Mistral-7B-v0.3 has the following changes compared to Mistral-7B-v0.2:

Extended vocabulary to 32,768 tokens: Enhances the model’s ability to understand and generate diverse language inputs.

Supports version 3 Tokenizer: Improves efficiency and accuracy in language processing.

Supports function calling: Enables the model to execute predefined functions during language processing.

The Mistral-7B-Instruct-v0.3 model incorporates several key enhancements. It features an extended vocabulary of 32,768 tokens, significantly broader than its predecessors, which enables it to understand and generate a more diverse array of language inputs. Additionally, it supports a version 3 tokenizer, further improving its ability to process language accurately. The introduction of function calling is another critical advancement, allowing the model to execute predefined functions during language processing. This functionality can be particularly useful in dynamic interaction scenarios and real-time data manipulation.

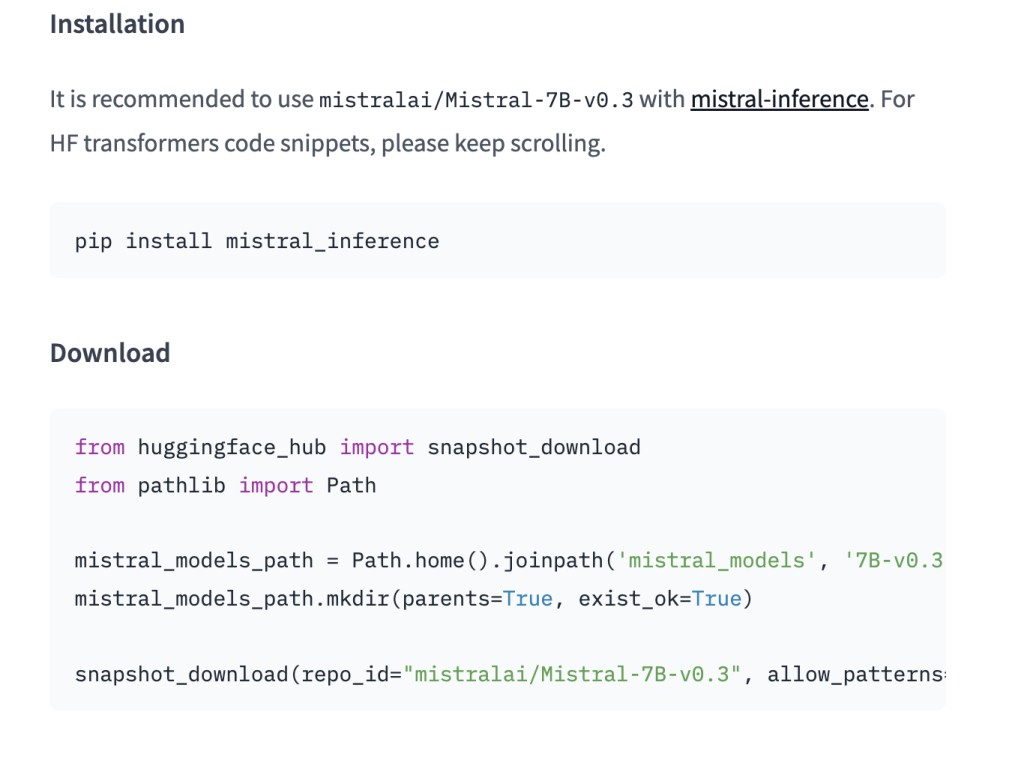

Installation from Hugging Face

pip install mistral_inference

Download from Hugging Face

from huggingface_hub import snapshot_download

from pathlib import Path

mistral_models_path = Path.home().joinpath(‘mistral_models’, ‘7B-Instruct-v0.3’)

mistral_models_path.mkdir(parents=True, exist_ok=True)

snapshot_download(repo_id=”mistralai/Mistral-7B-Instruct-v0.3″, allow_patterns=[“params.json”, “consolidated.safetensors”, “tokenizer.model.v3”], local_dir=mistral_models_path)

Performance evaluations of the Mistral-7B-Instruct-v0.3 model have demonstrated substantial improvements over earlier versions. The model has shown a remarkable ability to generate coherent and contextually appropriate text based on user instructions. The Mistral-7B-Instruct-v0.3 model outperformed previous models in practical tests, highlighting its enhanced capability in handling complex language tasks. For instance, the model can efficiently manage up to 7.25 billion parameters, ensuring high detail and output accuracy. However, it is important to note that this model currently lacks moderation mechanisms, which are essential for deployment in environments requiring moderated outputs to avoid inappropriate or harmful content.

In conclusion, the Mistral-7B-Instruct-v0.3 model addresses the challenges of language understanding and generation; researchers have enhanced the model’s capabilities through a series of strategic improvements. These include an expanded vocabulary, improved tokenizer support, and the innovative introduction of function calling. The promising results demonstrated by the Mistral-7B-Instruct-v0.3 model underscore its potential impact on various AI-driven applications. Continued development and community engagement will be crucial to refining this model further, particularly in implementing necessary moderation mechanisms for safe deployment.

Sources

https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.3

https://huggingface.co/mistralai/Mistral-7B-v0.3

Mistral-7B v0.3 has been released

byu/remixer_dec inLocalLLaMA

The post Mistral AI Team Releases The Mistral-7B-Instruct-v0.3: An Instruct Fine-Tuned Version of the Mistral-7B-v0.3 appeared first on MarkTechPost.

Source: Read MoreÂ