Generative AI models, particularly Large Language Models (LLMs), have seen a surge in adoption across various industries, transforming the software development landscape. As enterprises and startups increasingly integrate LLMs into their workflows, the future of programming is set to undergo significant changes.

Historically, symbolic programming has dominated, where developers use symbolic code to express logic for tasks or problem-solving. However, the rapid adoption of LLMs has sparked interest in a new paradigm, Neurosymbolic programming, which combines neural networks and traditional symbolic code to create sophisticated algorithms and applications.

LLMs operate by processing text inputs and generating text outputs, with prompt engineering currently being the primary programming method with these models. This approach relies heavily on constructing the right input prompts, a task that can be complex and tedious. The intricacies of generating appropriate prompts from existing code constructs can reduce code readability and maintainability. To address these challenges, several open-source libraries and research efforts, such as LangChain, Guidance, LMQL, and SGLang, have emerged. These tools aim to simplify prompt construction and facilitate LLM programming, but they still require developers to manually decide the type of prompts and the information to include.

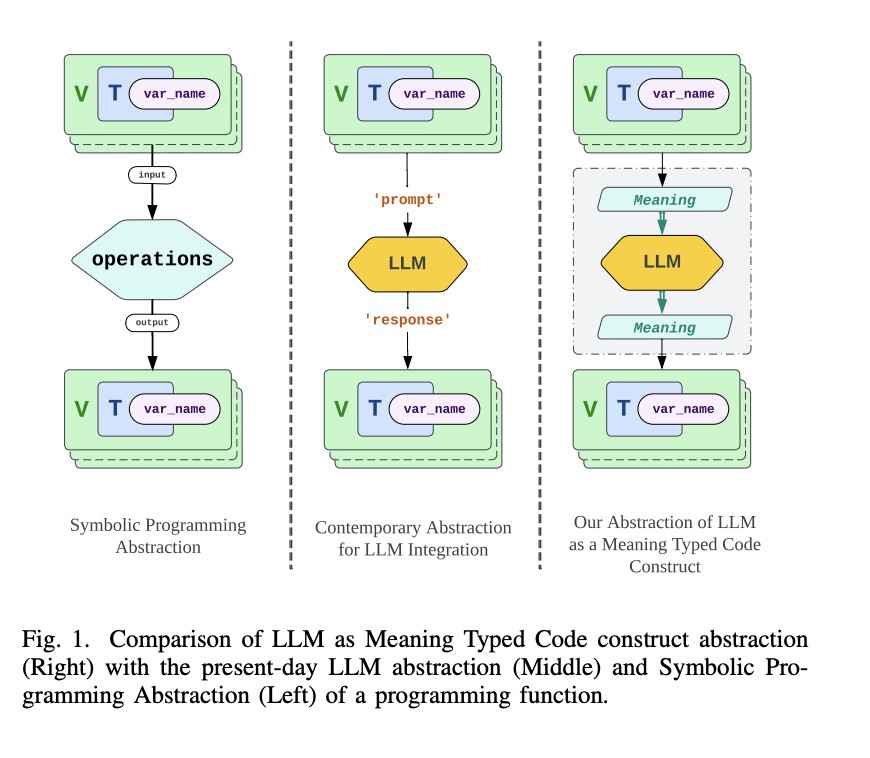

The complexity of LLM programming largely stems from the need for more abstraction when interfacing with these models. In conventional symbolic programming, operations are conducted directly on variables or typed values. However, LLMs operate on text strings, necessitating the conversion of variables to prompts and the parsing of LLM outputs back into variables. This process introduces additional logic and complexity, highlighting a fundamental mismatch between LLM abstractions and conventional symbolic programming.

To address this, a new approach proposes treating LLMs as native code constructs and providing syntax support at the programming language level. This approach introduces a new type of “meaning†to serve as the abstraction for LLM interactions. “Meaning†refers to the semantic purpose behind the symbolic data (strings) used as LLM inputs and outputs. The language runtime should automate the process of translating conventional code constructs and meanings, termed Meaning-type Transformations (MTT), to reduce developer complexity.

A novel language feature, Semantic Strings (semstrings), is introduced to enable developers to annotate existing code constructs with additional context. Semstrings allow for the seamless integration of LLMs by providing necessary context and information, facilitating the Automatic Meaning-type Transformation (A-MTT). This automation abstracts the complexity of prompt generation and response parsing, making it easier for developers to leverage LLMs in their code.

Through real code examples, the concept of A-MTT is demonstrated to streamline common symbolic code operations, such as instantiating custom type objects, standalone function calls, and class member methods. Introducing these new abstractions and language features represents a significant contribution to the programming paradigm, enabling more efficient and maintainable integration of LLMs into conventional symbolic programming. This advancement promises to transform the future of programming, making it more accessible and less cumbersome for developers working with generative AI models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post The AI-Powered Code Revolution: Bridging Traditional and Neurosymbolic Programming appeared first on MarkTechPost.

Source: Read MoreÂ