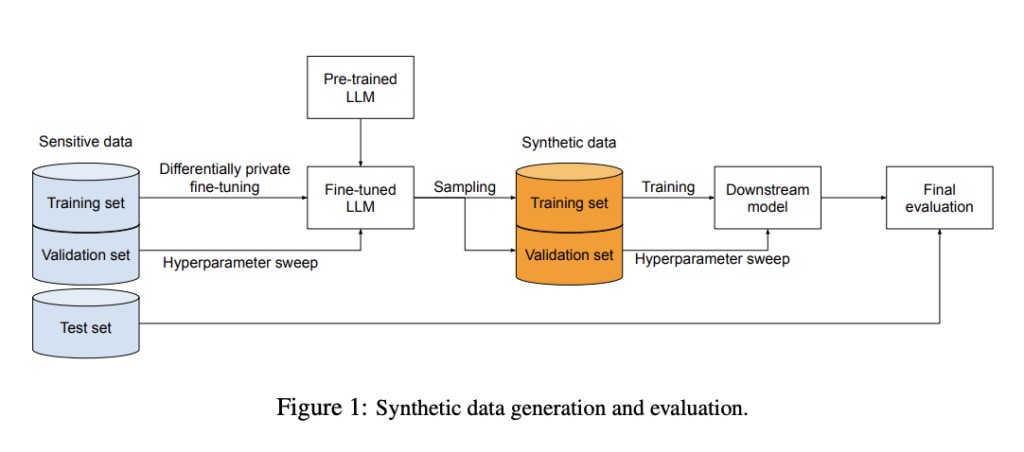

Google AI researchers describe their novel approach to addressing the challenge of generating high-quality synthetic datasets that preserve user privacy, which are essential for training predictive models without compromising sensitive information. As machine learning models increasingly rely on large datasets, ensuring the privacy of individuals whose data contributes to these models becomes crucial. Differentially private synthetic data is synthesized by creating new datasets that reflect the key characteristics of the original data but are entirely artificial, thus protecting user privacy while enabling robust model training.

Current methods for privacy-preserving data generation involve training models directly with differentially private machine learning (DP-ML) algorithms, which provide strong privacy guarantees. However, when working with high-dimensional datasets utilized for a variety of tasks, this method can be computationally demanding and may only sometimes produce high-quality results. Previous models, such as Harnessing large-language models, have leveraged large-language models (LLMs) combined with differentially private stochastic gradient descent (DP-SGD) to generate private synthetic data. This method involves fine-tuning an LLM trained on public data using DP-SGD on a sensitive dataset, ensuring that the generated synthetic data does not reveal any specific information about the individuals in the sensitive dataset.

Google’s researchers proposed an enhanced approach to generating differentially private synthetic data by leveraging parameter-efficient fine-tuning techniques, such as LoRa (Low-Rank Adaptation) and prompt fine-tuning. These techniques aim to modify a smaller number of parameters during the private training process, which reduces computational overhead and potentially improves the quality of the synthetic data.

The first step of the approach is to train LLM on a large corpus of public data. The LLM is then fine-tuned using DP-SGD on the sensitive dataset, with the fine-tuning process restricted to a subset of the model’s parameters. LoRa fine-tuning involves replacing each W in the model with W + LR, where L and R are low-rank matrices, and only trains L and R. Prompt fine-tuning, on the other hand, involves inserting a “prompt tensor†at the start of the network and only trains its weights, effectively modifying only the input prompt used by the LLM.

Empirical results showed that LoRa fine-tuning, which modifies roughly 20 million parameters, outperforms both full-parameter fine-tuning and prompt-based tuning, which modifies only about 41 thousand parameters. This suggests that there is an optimal number of parameters that balances the trade-off between computational efficiency and data quality. Classifiers trained on synthetic data generated by LoRa fine-tuned LLMs outperformed those trained on synthetic data from other fine-tuning methods, and in some cases, classifiers trained directly on the original sensitive data using DP-SGD. In an experiment to evaluate the proposed approach, a decoder-only LLM (Lamda-8B) was trained on public data and then privately fine-tuned on three publicly available datasets, namely IMDB, Yelp, and AG News, and treated as sensitive. The synthetic data generated was used to train classifiers on tasks such as sentiment analysis and topic classification. The classifiers’ performance on held-out subsets of the original data demonstrated the efficacy of the proposed method.

In conclusion, Google’s approach to generating differentially private synthetic data using parameter-efficient fine-tuning techniques has outperformed existing methods. By fine-tuning a smaller subset of parameters, the method reduces computational requirements and improves the quality of the synthetic data. This approach not only preserves privacy but also maintains high utility for training predictive models, making it a valuable tool for organizations looking to leverage sensitive data without compromising user privacy. The empirical results demonstrate the effectiveness of the proposed method, suggesting its potential for broader applications in privacy-preserving machine learning.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Google AI Described New Machine Learning Methods for Generating Differentially Private Synthetic Data appeared first on MarkTechPost.

Source: Read MoreÂ