Large language models (LLMs) have excelled in natural language tasks and instruction following, yet they struggle with non-textual data like images and audio. Incorporating speech comprehension could vastly improve human-computer interaction. Current methods rely on automated speech recognition (ASR) followed by LLM processing, missing non-textual cues. A promising approach integrates textual LLMs with speech encoders in one training setup. This allows for a more comprehensive understanding of both speech and text, promising richer comprehension compared to text-only methods. Particularly, instruction-following multimodal audio-language models are gaining traction due to their ability to generalize across tasks. While previous works like SpeechT5, Whisper, VIOLA, SpeechGPT, and SLM show promise, they are constrained to a limited range of speech tasks.

Multi-task learning involves leveraging shared representations across diverse tasks to enhance generalization and efficiency. Models like T5 and SpeechNet employ this approach for text and speech tasks, achieving significant results. However, multimodal large language models integrating audio have garnered less attention. Recent efforts like SpeechGPT and Qwen-Audio aim to bridge this gap, showcasing capabilities in various audio tasks. SpeechVerse innovatively combines multi-task learning and instruction finetuning to achieve superior performance in audio-text tasks.

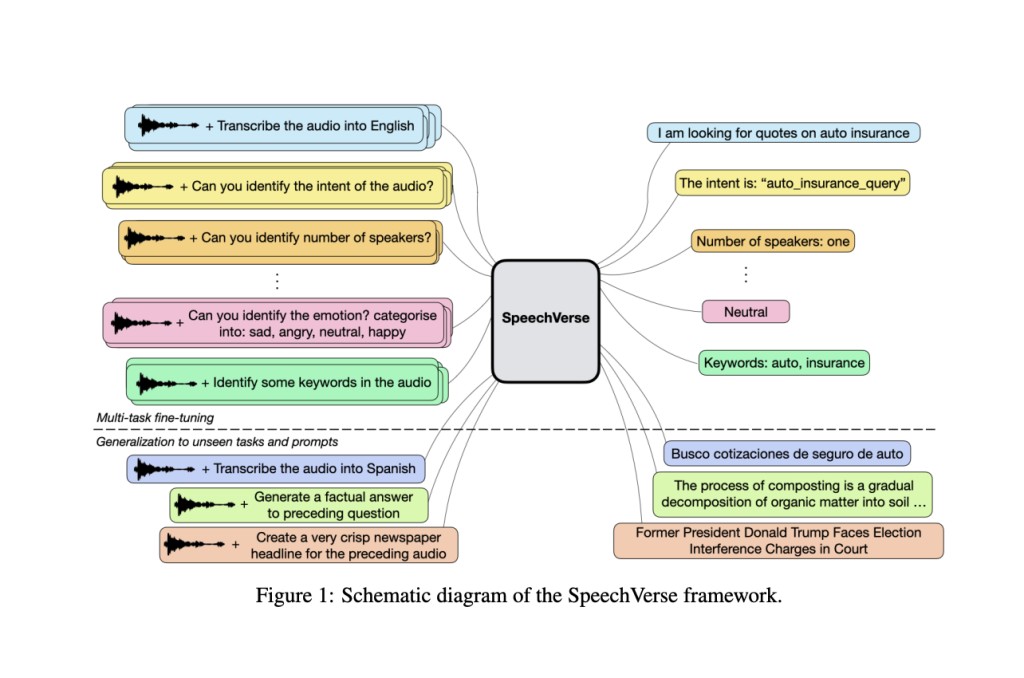

Amazon researchers introduce SpeechVerse, a multi-task framework with supervised instruction finetuning for diverse speech tasks. Unlike SpeechGPT, it utilizes continuous representations from pre-trained speech models for text-only output tasks. In comparison to Qwen-Audio, which requires hierarchical tagging and a large-scale audio encoder, SpeechVerse incorporates multi-task learning and finetuning without task-specific tagging, enabling generalization to unseen tasks through natural language instructions.

The multimodal model architecture of SpeechVerse comprises an audio encoder, a convolution downsampling module, and an LLM. The audio encoder extracts semantic features from audio using a pre-trained model, generating a unified representation. The downsampling module adjusts the audio features for compatibility with LLM token sequences. The LLM processes text and audio input, combining downsampled audio features with token embeddings. Curriculum learning with parameter-efficient finetuning optimizes training, freezing pre-trained components to efficiently handle diverse speech tasks.

The evaluation of end-to-end trained joint speech and language models (E2E-SLM) using the SpeechVerse framework covers 11 tasks spanning various domains and datasets. ASR benchmarks reveal the efficacy of SpeechVerse’s core speech understanding, with task-specific pre-trained ASR models showing promising results. For SLU tasks, end-to-end trained models outperform cascaded pipelines in most cases, demonstrating the effectiveness of SpeechVerse. SpeechVerse models also exhibit competitive or superior performance compared to state-of-the-art models across diverse tasks like ASR, ST, IC, SF, and ER.

To recapitulate, SpeechVerse is introduced by Amazon researchers, a multimodal framework enabling LLMs to execute diverse speech processing tasks through natural language instructions. Utilizing supervised instruction finetuning and combining representations from pre-trained speech and text models, SpeechVerse exhibits strong zero-shot generalization on unseen tasks. Comparative analysis against conventional baselines underscores SpeechVerse’s superior performance on 9 out of 11 tasks, showcasing its robust instruction-following capability. The model demonstrates resilience across out-of-domain datasets, unseen prompts, and novel tasks, highlighting the effectiveness of the proposed training approach in fostering generalizability.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post SpeechVerse: A Multimodal AI Framework that Enables LLMs to Follow Natural Language Instructions for Performing Diverse Speech-Processing Tasks appeared first on MarkTechPost.

Source: Read MoreÂ