The primary goal of AI is to create interactive systems capable of solving diverse problems, including those in medical AI aimed at improving patient outcomes. Large language models (LLMs)Â have demonstrated significant problem-solving abilities, surpassing human scores on exams like the USMLE. While LLMs can enhance healthcare accessibility, they still face limitations in real-world clinical settings due to the complexity of clinical tasks involving sequential decision-making, handling uncertainty, and compassionate patient care. Current evaluations mostly focus on static multiple-choice questions, not fully capturing the dynamic nature of clinical work.

The USMLE assesses medical students across foundational knowledge, clinical application, and independent practice skills. In contrast, the Objective Structured Clinical Examination (OSCE) evaluates practical clinical skills through simulated scenarios, offering direct observation and a comprehensive assessment. Language models in medicine are primarily evaluated using knowledge-based benchmarks like MedQA, which consists of challenging medical question-answering pairs. Recent efforts focus on refining language models’ applications in healthcare through red teaming and creating new benchmarks like EquityMedQA to address biases and improve evaluation methods. Also, advancements in clinical decision-making simulations, such as AMIE, show promise in enhancing diagnostic accuracy in medical AI.

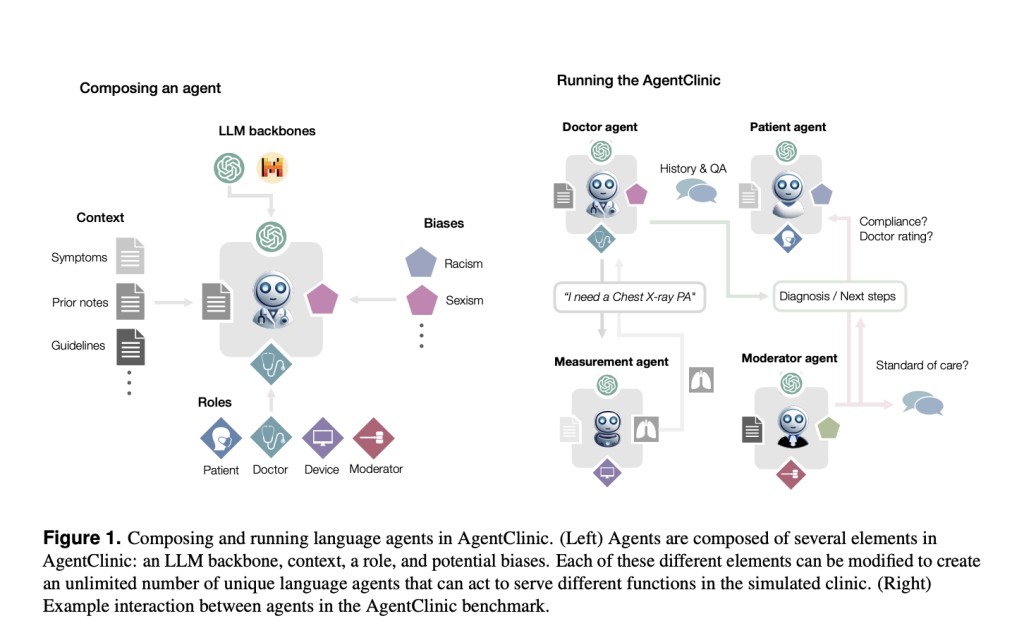

Researchers from Stanford University, Johns Hopkins University, and Hospital Israelita Albert Einstein present AgentClinic, an open-source benchmark for simulating clinical environments using language, patient, doctor, and measurement agents. It extends previous simulations by including medical exams (e.g., temperature, blood pressure) and ordering medical images (e.g., MRI, X-ray) through dialogue. Also, AgentClinic supports 24 biases found in clinical settings.

AgentClinic introduces four language agents: patient, doctor, measurement, and moderator. Each agent has specific roles and unique information for simulating clinical interactions. The patient agent provides symptom information without knowing the diagnosis, the measurement agent offers medical readings and test results, the doctor agent evaluates the patient and requests tests, and the moderator assesses the doctor’s diagnosis. AgentClinic also includes 24 biases relevant to clinical settings. The agents are built using curated medical questions from the USMLE and NEJM case challenges to create structured scenarios for evaluation using language models like GPT-4.

The accuracy of different language models (GPT-4, Mixtral-8x7B, GPT-3.5, and Llama 2 70B-chat) is evaluated on AgentClinic-MedQA, where each model acts as a doctor agent diagnosing patients through dialogue. GPT-4 achieved the highest accuracy at 52%, followed by GPT-3.5 at 38%, Mixtral-8x7B at 37%, and Llama 2 at 70B-chat at 9%. Comparison with MedQA accuracy showed weak predictability for AgentClinic-MedQA accuracy, similar to studies on medical residents’ performance relative to the USMLE.

To recapitulate, this work researchers present AgentClinic, a benchmark for simulating clinical environments with 15 multimodal language agents and 107 unique language agents based on USMLE cases. These agents exhibit 23 biases, impacting diagnostic accuracy and patient-doctor interactions. GPT-4, the highest-performing model, shows reduced accuracy (1.7%-2%) with cognitive biases and larger reductions (1.5%) with implicit biases, affecting patient follow-up willingness and confidence. Cross-communication between patient and doctor models improves accuracy. Limited or excessive interaction time decreases accuracy, with a 27% reduction at N=10 interactions and a 4%-9% reduction at N>20 interactions. GPT-4V achieves around 27% accuracy in a multimodal clinical environment based on NEJM cases.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post AgentClinic: Simulating Clinical Environments for Assessing Language Models in Healthcare appeared first on MarkTechPost.

Source: Read MoreÂ